Hi all,

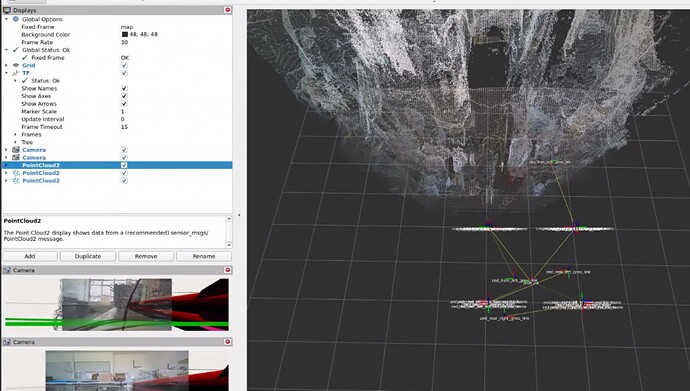

After setting up the urdf and pose tracking with the urdf (information about the position and orientation of the cameras), I was wondering if the topic mapping/fused_cloud should not be aligned based on the defined camera extrinsics (camera position w.r.t the “master-camera” or base link). When showing the fused_cloud in rviz, it seems that the orientation is not correct.

Do I have to do the alingement by myself, e.g. in a custom ROS2 node, in order to get a 360 degree mapping?

And want is the difference between the topic mapping/fused_cloud and /point_cloud/cloud_registered?