Hello Stereolabs team,

I have started to work with your SDK and its pretty awesome what we can we do with it. The amount of functionality and control we have in most modules is super handy.

I’m setting up a multi-camera setup, with two ZED2i cameras facing each other, angled downwards and approximately 3.6m apart, with pretty good lighting. Additionally, they share a pretty good FOV (> 40%).

They are also both locally connected to a Jetson ORIN NX and their purpose is inspecting static objects to create accurate pointclouds.

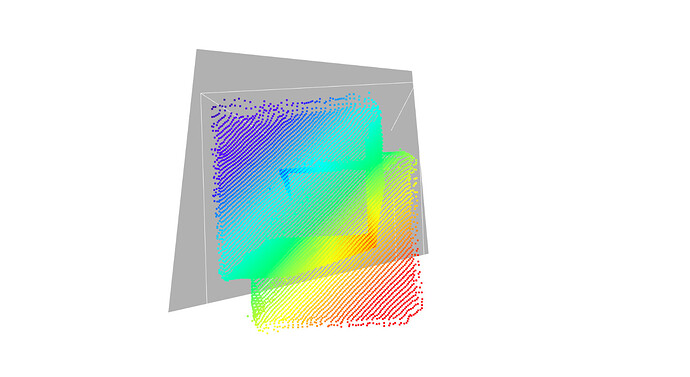

My issue is that I have been trying the past few days to get a good extrinsic calibration with ZED360 and even at those pretty-good conditions, I get a mismatch particularly in the XY plane, of around ±15cm. This unfortunately is not acceptable for my application and I was wondering if you have any suggestions to improve the accuracy. I have fiddled around with the room settings and played with both NEURAL and ULTRA models, while moving very slowly everywhere in the scene, for more than 1 minute. No matter what settings I use I get a very similar calibration output, which is not correct.

Any ideas what I can do to improve the ZED360 calibration? Is there any way I can increase the camera resolution from the default HD720? I would expect calibration inaccuracy to be below the cm level at these conditions.

Sample output from the Fusion API:

Im using the stereolabs/zed:5.0-tools-devel-l4t-r36.4 docker image for the ZED360 tool.

Thanks a lot!