HI,

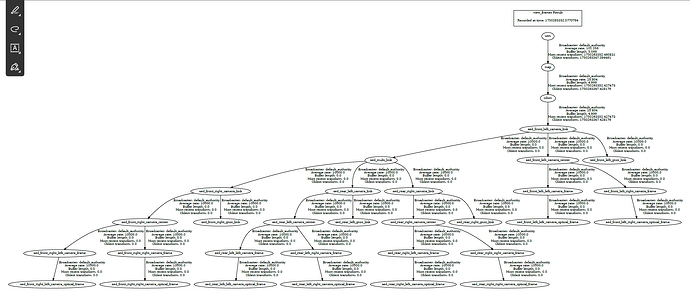

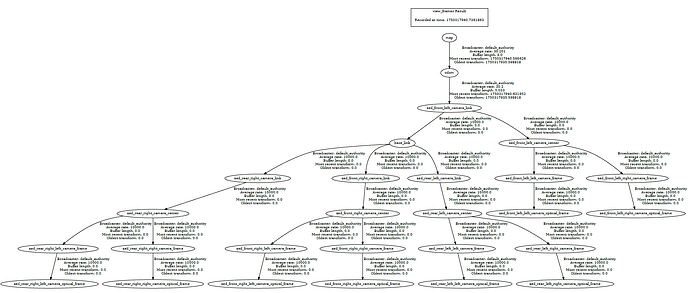

I am a little unsure about the correct configuration of the TF-tree (as shown following) for pose tracking in a outdoor application, therefore I set utm → map (hope this is correct)

We have four ZED X cameras and a dual-antenna GPS module. I would now like to determine the pose in relation to dbas base-link [0.0, 0.0, 0.0]. I only do the locaisation with the ZED cameras and the GPS+RTK module. Shouldn’t a base_link appear in the TF-tree? Unfortunately, the documentation did not help me to solve my problem.

My config file and urdf files look like this:

stereo_common.yaml

# config/common_stereo.yaml

# Common parameters to Stereolabs ZED Stereo cameras

---

/**:

ros__parameters:

use_sim_time: false # Set to `true` only if there is a publisher for the simulated clock to the `/clock` topic. Normally used in simulation mode.

simulation:

sim_enabled: false # Set to `true` to enable the simulation mode and connect to a simulation server

sim_address: '127.0.0.1' # The connection address of the simulation server. See the documentation of the supported simulation plugins for more information.

sim_port: 30000 # The connection port of the simulation server. See the documentation of the supported simulation plugins for more information.

svo:

use_svo_timestamps: true # Use the SVO timestamps to publish data. If false, data will be published at the system time.

publish_svo_clock: false # [overwritten by launch file options] When use_svo_timestamps is true allows to publish the SVO clock to the `/clock` topic. This is useful for synchronous rosbag playback.

svo_loop: false # Enable loop mode when using an SVO as input source. NOTE: ignored if SVO timestamping is used

svo_realtime: false # if true the SVO will be played trying to respect the original framerate eventually skipping frames, otherwise every frame will be processed respecting the `pub_frame_rate` setting

play_from_frame: 0 # Start playing the SVO from a specific frame

replay_rate: 1.0 # Replay rate for the SVO when not used in realtime mode (between [0.10-5.0])

general:

camera_timeout_sec: 5

camera_max_reconnect: 5

camera_flip: false

self_calib: true # Enable the self-calibration process at camera opening. See https://www.stereolabs.com/docs/api/structsl_1_1InitParameters.html#affeaa06cfc1d849e311e484ceb8edcc5

serial_number: 0 # overwritten by launch file

pub_resolution: 'CUSTOM' # The resolution used for image and depth map publishing. 'NATIVE' to use the same `general.grab_resolution` - `CUSTOM` to apply the `general.pub_downscale_factor` downscale factory to reduce bandwidth in transmission

pub_downscale_factor: 2.0 # rescale factor used to rescale image before publishing when 'pub_resolution' is 'CUSTOM'

pub_frame_rate: 15.0 # [DYNAMIC] Frequency of publishing of visual images and depth data (not the Point Cloud, see 'depth.point_cloud_freq'). This value must be equal or less than the camera framerate.

enable_image_validity_check: 0 # [SDK5 required] Sets the image validity check. If set to 1, the SDK will check if the frames are valid before processing.

gpu_id: 0

optional_opencv_calibration_file: '' # Optional path where the ZED SDK can find a file containing the calibration information of the camera computed by OpenCV. Read the ZED SDK documentation for more information: https://www.stereolabs.com/docs/api/structsl_1_1InitParameters.html#a9eab2753374ef3baec1d31960859ba19

async_image_retrieval: false # If set to true will camera image retrieve at a framerate different from \ref grab() application framerate. This is useful for recording SVO or sending camera stream at different rate than application.

# Other parameters are defined, according to the camera model, in the 'zed.yaml', 'zedm.yaml', 'zed2.yaml', 'zed2i.yaml'

# 'zedx.yaml', 'zedxmini.yaml', 'virtual.yaml' files

video:

saturation: 4 # [DYNAMIC]

sharpness: 4 # [DYNAMIC]

gamma: 8 # [DYNAMIC]

auto_exposure_gain: true # [DYNAMIC]

exposure: 80 # [DYNAMIC]

gain: 80 # [DYNAMIC]

auto_whitebalance: true # [DYNAMIC]

whitebalance_temperature: 42 # [DYNAMIC] - [28,65] x100 - works only if `auto_whitebalance` is false

# Other parameters are defined, according to the camera model, in the 'zed.yaml', 'zedm.yaml', 'zed2.yaml', 'zed2i.yaml'

# 'zedx.yaml', 'zedxmini.yaml', 'virtual.yaml' files

sensors:

publish_imu_tf: true # [overwritten by launch file options] enable/disable the IMU TF broadcasting

sensors_image_sync: true # Synchronize Sensors messages with latest published video/depth message

sensors_pub_rate: 100. # frequency of publishing of sensors data. MAX: 400. - MIN: grab rate

region_of_interest:

automatic_roi: false # Enable the automatic ROI generation to automatically detect part of the robot in the FoV and remove them from the processing. Note: if enabled the value of `manual_polygon` is ignored

depth_far_threshold_meters: 2.5 # Filtering how far object in the ROI should be considered, this is useful for a vehicle for instance

image_height_ratio_cutoff: 0.5 # By default consider only the lower half of the image, can be useful to filter out the sky

#manual_polygon: '[]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#manual_polygon: '[[0.25,0.33],[0.75,0.33],[0.75,0.5],[0.5,0.75],[0.25,0.5]]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#manual_polygon: '[[0.25,0.25],[0.75,0.25],[0.75,0.75],[0.25,0.75]]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#manual_polygon: '[[0.5,0.25],[0.75,0.5],[0.5,0.75],[0.25,0.5]]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

apply_to_depth: true # Apply ROI to depth processing

apply_to_positional_tracking: true # Apply ROI to positional tracking processing

apply_to_object_detection: true # Apply ROI to object detection processing

apply_to_body_tracking: true # Apply ROI to body tracking processing

apply_to_spatial_mapping: true # Apply ROI to spatial mapping processing

depth:

depth_mode: 'NEURAL_LIGHT' # Matches the ZED SDK setting: 'NONE', 'PERFORMANCE', 'QUALITY', 'ULTRA', 'NEURAL', 'NEURAL_PLUS' - Note: if 'NONE' all the modules that requires depth extraction are disabled by default (Pos. Tracking, Obj. Detection, Mapping, ...)

depth_stabilization: 10 # Forces positional tracking to start if major than 0 - Range: [0,100]

openni_depth_mode: false # 'false': 32bit float [meters], 'true': 16bit unsigned int [millimeters]

point_cloud_freq: 5.0 # [DYNAMIC] Frequency of the pointcloud publishing. This value must be equal or less than the camera framerate.

point_cloud_res: 'COMPACT' # The resolution used for point cloud publishing - 'COMPACT'-Standard resolution. Optimizes processing and bandwidth, 'REDUCED'-Half resolution. Low processing and bandwidth requirements

depth_confidence: 95 # [DYNAMIC]

depth_texture_conf: 100 # [DYNAMIC]

remove_saturated_areas: true # [DYNAMIC]

# Other parameters are defined, according to the camera model, in the 'zed.yaml', 'zedm.yaml', 'zed2.yaml', 'zed2i.yaml'

# 'zedx.yaml', 'zedxmini.yaml', 'virtual.yaml' files

pos_tracking:

pos_tracking_enabled: true # True to enable positional tracking from start

pos_tracking_mode: 'GEN_3' # Matches the ZED SDK setting: 'GEN_1', 'GEN_2', 'GEN_3'

imu_fusion: true # enable/disable IMU fusion. When set to false, only the optical odometry will be used.

publish_tf: true # [overwritten by launch file options] publish `odom -> camera_link` TF

publish_map_tf: true # [overwritten by launch file options] publish `map -> odom` TF

map_frame: 'map'

odometry_frame: 'odom'

area_memory_db_path: ''

area_memory: true # Enable to detect loop closure

reset_odom_with_loop_closure: true # Re-initialize odometry to the last valid pose when loop closure happens (reset camera odometry drift)

depth_min_range: 0.0 # Set this value for removing fixed zones of the robot in the FoV of the camerafrom the visual odometry evaluation

set_as_static: false # If 'true' the camera will be static and not move in the environment

set_gravity_as_origin: true # If 'true' align the positional tracking world to imu gravity measurement. Keep the yaw from the user initial pose.

floor_alignment: false # Enable to automatically calculate camera/floor offset

initial_base_pose: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0] # Initial position of the `camera_link` frame in the map -> [X, Y, Z, R, P, Y]

path_pub_rate: 5.0 # [DYNAMIC] - Camera trajectory publishing frequency

path_max_count: -1 # use '-1' for unlimited path size

two_d_mode: false # Force navigation on a plane. If true the Z value will be fixed to 'fixed_z_value', roll and pitch to zero

fixed_z_value: 0.0 # Value to be used for Z coordinate if `two_d_mode` is true

transform_time_offset: 0.0 # The value added to the timestamp of `map->odom` and `odom->camera_link` transform being generated

reset_pose_with_svo_loop: true # Reset the camera pose the `initial_base_pose` when the SVO loop is enabled and the SVO playback reaches the end of the file.

gnss_fusion:

gnss_fusion_enabled: true # fuse 'sensor_msg/NavSatFix' message information into pose data

gnss_fix_topic: '/gps/ZED_tracking' # Name of the GNSS topic of type NavSatFix to subscribe [Default: '/gps/fix']

gnss_zero_altitude: false # Set to `true` to ignore GNSS altitude information

h_covariance_mul: 1.0 # Multiplier factor to be applied to horizontal covariance of the received fix (plane X/Y)

v_covariance_mul: 1.0 # Multiplier factor to be applied to vertical covariance of the received fix (Z axis)

publish_utm_tf: true # Publish `utm` -> `map` TF

broadcast_utm_transform_as_parent_frame: true # if 'true' publish `utm` -> `map` TF, otherwise `map` -> `utm`

enable_reinitialization: false # determines whether reinitialization should be performed between GNSS and VIO fusion when a significant disparity is detected between GNSS data and the current fusion data. It becomes particularly crucial during prolonged GNSS signal loss scenarios.

enable_rolling_calibration: true # If this parameter is set to true, the fusion algorithm will used a rough VIO / GNSS calibration at first and then refine it. This allow you to quickly get a fused position.

enable_translation_uncertainty_target: false # When this parameter is enabled (set to true), the calibration process between GNSS and VIO accounts for the uncertainty in the determined translation, thereby facilitating the calibration termination. The maximum allowable uncertainty is controlled by the 'target_translation_uncertainty' parameter.

gnss_vio_reinit_threshold: 5.0 # determines the threshold for GNSS/VIO reinitialization. If the fused position deviates beyond out of the region defined by the product of the GNSS covariance and the gnss_vio_reinit_threshold, a reinitialization will be triggered.

target_translation_uncertainty: 0.1 # defines the target translation uncertainty at which the calibration process between GNSS and VIO concludes. By default, the threshold is set at 10 centimeters.

target_yaw_uncertainty: 0.1 # defines the target yaw uncertainty at which the calibration process between GNSS and VIO concludes. The unit of this parameter is in radian. By default, the threshold is set at 0.1 radians.

mapping:

mapping_enabled: true # True to enable mapping and fused point cloud pubblication

resolution: 0.05 # maps resolution in meters [min: 0.01f - max: 0.2f]

max_mapping_range: 10.0 # maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0]

fused_pointcloud_freq: 5.0 # frequency of the publishing of the fused colored point cloud

clicked_point_topic: '/clicked_point' # Topic published by Rviz when a point of the cloud is clicked. Used for plane detection

pd_max_distance_threshold: 0.15 # Plane detection: controls the spread of plane by checking the position difference.

pd_normal_similarity_threshold: 15.0 # Plane detection: controls the spread of plane by checking the angle difference.

object_detection:

od_enabled: false # True to enable Object Detection

enable_tracking: true # Whether the object detection system includes object tracking capabilities across a sequence of images.

detection_model: 'MULTI_CLASS_BOX_FAST' # 'MULTI_CLASS_BOX_FAST', 'MULTI_CLASS_BOX_MEDIUM', 'MULTI_CLASS_BOX_ACCURATE', 'PERSON_HEAD_BOX_FAST', 'PERSON_HEAD_BOX_ACCURATE', 'CUSTOM_YOLOLIKE_BOX_OBJECTS'

max_range: 20.0 # [m] Upper depth range for detections.The value cannot be greater than 'depth.max_depth'

filtering_mode: 'NMS3D' # Filtering mode that should be applied to raw detections: 'NONE', 'NMS3D', 'NMS3D_PER_CLASS'

prediction_timeout: 2.0 # During this time [sec], the object will have OK state even if it is not detected. Set this parameter to 0 to disable SDK predictions

allow_reduced_precision_inference: false # Allow inference to run at a lower precision to improve runtime and memory usage

# Other parameters are defined in the 'object_detection.yaml' and 'custom_object_detection.yaml' files

body_tracking:

bt_enabled: false # True to enable Body Tracking

model: 'HUMAN_BODY_MEDIUM' # 'HUMAN_BODY_FAST', 'HUMAN_BODY_MEDIUM', 'HUMAN_BODY_ACCURATE'

body_format: 'BODY_38' # 'BODY_18','BODY_34','BODY_38'

allow_reduced_precision_inference: false # Allow inference to run at a lower precision to improve runtime and memory usage

max_range: 15.0 # [m] Defines a upper depth range for detections

body_kp_selection: 'FULL' # 'FULL', 'UPPER_BODY'

enable_body_fitting: false # Defines if the body fitting will be applied

enable_tracking: true # Defines if the object detection will track objects across images flow

prediction_timeout_s: 0.5 # During this time [sec], the skeleton will have OK state even if it is not detected. Set this parameter to 0 to disable SDK predictions

confidence_threshold: 50.0 # [DYNAMIC] - Minimum value of the detection confidence of skeleton key points [0,99]

minimum_keypoints_threshold: 5 # [DYNAMIC] - Minimum number of skeleton key points to be detected for a valid skeleton

stream_server:

stream_enabled: false # enable the streaming server when the camera is open

codec: 'H264' # different encoding types for image streaming: 'H264', 'H265'

port: 30000 # Port used for streaming. Port must be an even number. Any odd number will be rejected.

bitrate: 12500 # [1000 - 60000] Streaming bitrate (in Kbits/s) used for streaming. See https://www.stereolabs.com/docs/api/structsl_1_1StreamingParameters.html#a873ba9440e3e9786eb1476a3bfa536d0

gop_size: -1 # [max 256] The GOP size determines the maximum distance between IDR/I-frames. Very high GOP size will result in slightly more efficient compression, especially on static scenes. But latency will increase.

adaptative_bitrate: false # Bitrate will be adjusted depending the number of packet dropped during streaming. If activated, the bitrate can vary between [bitrate/4, bitrate].

chunk_size: 16084 # [1024 - 65000] Stream buffers are divided into X number of chunks where each chunk is chunk_size bytes long. You can lower chunk_size value if network generates a lot of packet lost: this will generates more chunk for a single image, but each chunk sent will be lighter to avoid inside-chunk corruption. Increasing this value can decrease latency.

target_framerate: 0 # Framerate for the streaming output. This framerate must be below or equal to the camera framerate. Allowed framerates are 15, 30, 60 or 100 if possible. Any other values will be discarded and camera FPS will be taken.

advanced: # WARNING: do not modify unless you are confident of what you are doing

# Reference documentation: https://man7.org/linux/man-pages/man7/sched.7.html

thread_sched_policy: 'SCHED_BATCH' # 'SCHED_OTHER', 'SCHED_BATCH', 'SCHED_FIFO', 'SCHED_RR' - NOTE: 'SCHED_FIFO' and 'SCHED_RR' require 'sudo'

thread_grab_priority: 50 # ONLY with 'SCHED_FIFO' and 'SCHED_RR' - [1 (LOW) z-> 99 (HIGH)] - NOTE: 'sudo' required

thread_sensor_priority: 70 # ONLY with 'SCHED_FIFO' and 'SCHED_RR' - [1 (LOW) z-> 99 (HIGH)] - NOTE: 'sudo' required

thread_pointcloud_priority: 60 # ONLY with 'SCHED_FIFO' and 'SCHED_RR' - [1 (LOW) z-> 99 (HIGH)] - NOTE: 'sudo' required

debug:

sdk_verbose: 1 # Set the verbose level of the ZED SDK

sdk_verbose_log_file: '' # Path to the file where the ZED SDK will log its messages. If empty, no file will be created. The log level can be set using the `sdk_verbose` parameter.

debug_common: false

debug_sim: false

debug_video_depth: false

debug_camera_controls: false

debug_point_cloud: false

debug_positional_tracking: false

debug_gnss: false

debug_sensors: false

debug_mapping: false

debug_terrain_mapping: false

debug_object_detection: false

debug_body_tracking: false

debug_roi: false

debug_streaming: false

debug_advanced: false

urdf file in ZED-Examples node for multi camera:

<?xml version="1.0"?>

<!--

// Copyright 2024 Stereolabs

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

-->

<robot name="zed_multi_camera" xmlns:xacro="http://ros.org/wiki/xacro">

<!-- <xacro:property name="M_PI" value="3.1415926535897931" /> -->

<!-- Define the required parameters -->

<xacro:arg name="multi_link" default="zed_multi_link" />

<xacro:arg name="camera_name_0" default="zed_front_left" />

<xacro:arg name="camera_name_1" default="zed_front_right" />

<xacro:arg name="camera_name_2" default="zed_rear_left" />

<xacro:arg name="camera_name_3" default="zed_rear_right" />

<!-- Define the reference links -->

<link name="$(arg multi_link)" />

<link name="$(arg camera_name_0)_camera_link" />

<link name="$(arg camera_name_1)_camera_link" />

<link name="$(arg camera_name_2)_camera_link" />

<link name="$(arg camera_name_3)_camera_link" />

<!-- Note the URDF of the cameras are loaded by the respective launch files.

We must only provide the position of each of them with respect to the reference link -->

<!-- Define the position of the front_left camera with respect to the reference link -->

<joint name="$(arg camera_name_0)_camera_joint" type="fixed">

<parent link="$(arg camera_name_0)_camera_link"/>

<child link="$(arg multi_link)"/>

<origin xyz="1.02523 1.14077 1.32922" rpy="-0.349066 0.349066 2.35619" />

</joint>

<!-- Define the position of the front_right camera with respect to the reference link -->

<joint name="$(arg camera_name_1)_camera_joint" type="fixed">

<parent link="$(arg multi_link)"/>

<child link="$(arg camera_name_1)_camera_link"/>

<origin xyz="0.89978 -0.90007 0.72141" rpy="0.349066 0.349066 0.785398" />

</joint>

<!-- Define the position of the rear_left camera with respect to the reference link -->

<joint name="$(arg camera_name_2)_camera_joint" type="fixed">

<parent link="$(arg multi_link)"/>

<child link="$(arg camera_name_2)_camera_link"/>

<origin xyz="-0.99078 1.02823 0.97763" rpy="-0.349066 -0.349066 -2.35619" />

</joint>

<!-- Define the position of the rear_right camera with respect to the reference link -->

<joint name="$(arg camera_name_3)_camera_joint" type="fixed">

<parent link="$(arg multi_link)"/>

<child link="$(arg camera_name_3)_camera_link"/>

<origin xyz="-0.99981 -0.9978 0.97763" rpy="0.349066 -0.349066 -0.785398" />

</joint>

</robot>

zed_descr urdf in zed_wrapper - here is defined the offset between the front left camera and the GPS antenna. Is this correct, or do I have to set the offset w.r.t the base_link, if I want to track this position?

<?xml version="1.0"?>

<!--

// Copyright 2024 Stereolabs

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

-->

<robot name="stereolabs_camera" xmlns:xacro="http://ros.org/wiki/xacro">

<xacro:arg name="camera_name" default="zed" />

<xacro:arg name="camera_model" default="zed" />

<xacro:arg name="custom_baseline" default="0.0" />

<!-- If GNSS fusion is enabled the position of the antenna with respect to the camera mount point is required -->

<xacro:arg name="enable_gnss" default="false" />

<xacro:arg name="gnss_x" default="-1.66657" />

<xacro:arg name="gnss_y" default="-0.54662" />

<xacro:arg name="gnss_z" default="0.57043" />

<xacro:include filename="$(find zed_wrapper)/urdf/zed_macro.urdf.xacro" />

<xacro:zed_camera name="$(arg camera_name)" model="$(arg camera_model)" custom_baseline="$(arg custom_baseline)" enable_gnss="$(arg enable_gnss)">

<origin xyz="$(arg gnss_x) $(arg gnss_y) $(arg gnss_z)" rpy="0 0 0"/>

</xacro:zed_camera>

</robot>