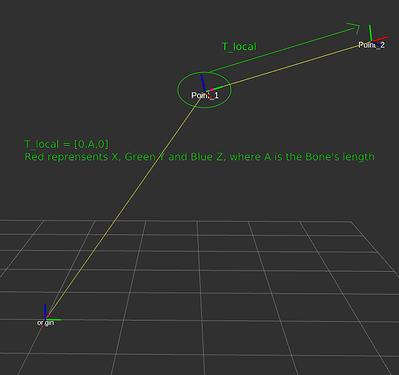

The livelink for Unity project (GitHub - stereolabs/zed-unity-livelink: ZED Livelink plugin for Unity) uses a standard humanoid avatar (Unity - Manual: Humanoid Avatars) to animate the skeleton data coming from the SDK. The Body38 format of the SDK looks a lot like the skeleton of a humanoid avatar, but when using Body34, how are the joints fitted to match the humanoid avatar? For example, the pelvis bone of a Body34 skeleton is lower than that of the Body38 (and humanoid avatar) skeleton. Do you have a formula for calculating the location and rotation of the pelvis bone of the humanoid avatar when using Body34?

Also, how are the body parts scaled to the appropriate length? When running the livelink in realtime animating people with different heights, is the height of the avatar scaled accordingly, or are the skeleton data fitted to an avatar of a set height of for example 1.80 meters?