Hi all,

We need to achieve the lowest possible latency for our application. The first goal is to send the ZED X streams through a local network and display them on a monitor.

The setup I’m currently using :

- ZED X camera

- ZED Box Orin™ NX 8GB

- Asus ZenScreen (used as ZED Box monitor)

- POE+ Switch

- Personal computer, GTX 1660Ti

For the first two tests I tried to have the simplest possible pipeline by displaying the left stream directly on the screen connected to the ZED Box.

Test 1

nvargus nv3dsink

pipeline : gst-launch-1.0 nvarguscamerasrc sensor-id=0 sensor-mode=0 ! "video/x-raw(memory:NVMM),width=1920,height=1200,framerate=60/1,format=NV12" ! nv3dsink

latency 5 : 84ms

latency 4 : 84ms

latency 3 : 84ms

latency 2 : 83ms

latency 1 : 84ms

latency 0 : 84ms

mean : 84ms

Test 2

zedsrc nv3dsink

pipeline : gst-launch-1.0 zedsrc camera-fps=60 stream-type=0 camera-resolution=2 ! nv3dsink

latency 5 : 100ms

latency 4 : 100ms

latency 3 : 116ms

latency 2 : 116ms

latency 1 : 100ms

latency 0 : 100ms

mean : 105.3ms

For the two following tests I’ve sent the stream in H264 on the local network.

Test 3

nvargus udp autovideosink

pipeline jetson : gst-launch-1.0 nvarguscamerasrc sensor-id=0 sensor-mode=0 ! "video/x-raw(memory:NVMM),width=1920,height=1200,framerate=60/1,format=NV12" ! nvv4l2h264enc maxperf-enable=true insert-sps-pps=true bitrate=8000000 ! rtph264pay ! udpsink host=10.1.99.255 port=20000 sync=false async=false max-lateness=1000

pipeline pc : gst-launch-1.0 udpsrc port=20000 ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! h264parse ! avdec_h264 ! autovideosink

latency 4 : 105ms

latency 3 : 107ms

latency 2 : 110ms

latency 1 : 107ms

latency 0 : 106ms

mean : 107ms

Test 4

zedsrc udp autovideosink

pipeline jetson : gst-launch-1.0 zedsrc camera-fps=60 stream-type=0 camera-resolution=2 ! videoconvert ! "video/x-raw,format=NV12" ! nvvidconv ! nvv4l2h264enc maxperf-enable=true insert-sps-pps=true bitrate=8000000 ! rtph264pay ! udpsink host=10.1.99.255 port=20000 sync=false async=false max-lateness=1000

pipeline pc : gst-launch-1.0 udpsrc port=20000 ! application/x-rtp,encoding-name=H264,payload=96 ! rtph264depay ! h264parse ! avdec_h264 ! autovideosink

latency 6 : 156ms

latency 5 : 173ms

latency 4 : 173ms

latency 3 : 158ms

latency 2 : 154ms

latency 1 : 143ms

latency 0 : 170ms

mean : 161ms

The last test is by directly streaming using the ZED SDK (camera streaming sample apps). Therefore It is probably less relevant as both streams are sent, unlike the previous tests.

Test 5

zed stream

jetson : camera sender sample app

pc : camera receiver sample app

latency 4 : 233ms

latency 3 : 236ms

latency 2 : 234ms

latency 1 : 233ms

latency 0 : 202ms

mean : 227.6ms

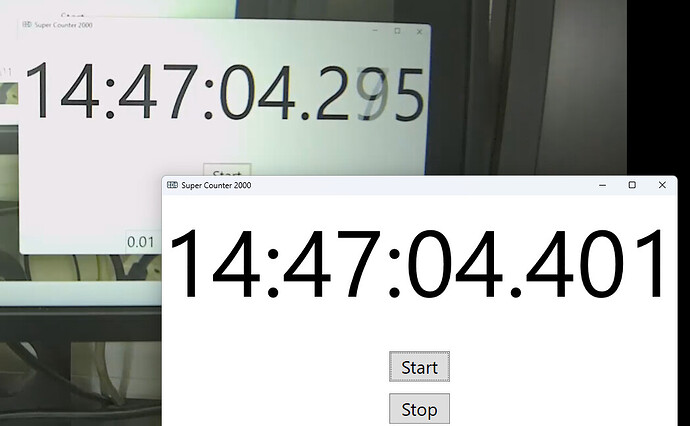

To measure the latency, I simply filmed the different screens (all 60Hz) this way :

For our application, a latency above 150ms is getting really annoying, the goal is to drive a machine in a FPV like way.

I found a related topic here : zedsrc low framerate with left+depth & object detection GST pipeline on Jetson Nano · Issue #16 · stereolabs/zed-gstreamer · GitHub

From my understanding, where the ZED SDK have to convert the video in BGRA format and have it in RAM memory in order to “perform all the elaborations”, the nvarguscamerasrc is directly able to work in the NVMM memory in NV12 format, which accelerates a lot the pipeline execution.

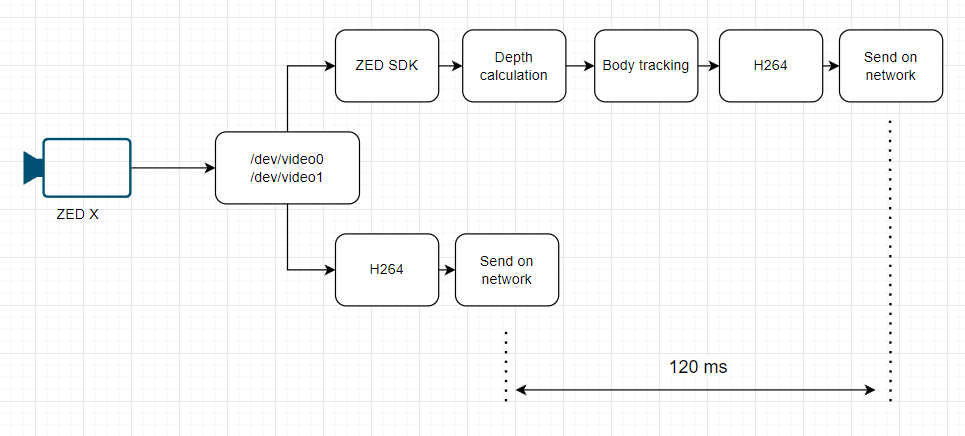

At a first glance, we could use the nvargus plugin. However, in the future we would like to use all the features brought by this wonderful SDK, in order to help the operator during his driving sessions.

Is there a way of getting the best of both worlds ? For example a way of retrieving the raw streams before the ZED SDK performs all the elaborations ?

I hope the following basic diagram could summarize what I mean :

That wouldn’t be an issue to get depth and other information with 200-300ms latency, the problem is only about the real-time perception of the operator.

Thanks a lot for your time and your work.