We are using the Global localization example scripts from https://github.com/stereolabs/zed-sdk/tree/master/global%20localization. We have tested both the c++ and python versions recording with the camera mounted on the hood of a car at low speed. The fact is that it never seems to calibrate correctly and when processing the files later on the computer the results are far from being correct.

What are the best practices to correctly make a recording so that Global localization is processed with satisfactory results?

Hi @a.martin

Welcome to the Stereolabs community.

Please provide more information concerning the configuration you are using:

- Camera model

- ZED SDK version

- GNSS Sensor

- Software configuration

- any other useful information

- Camera model : ZED-X , running on a ZED Box Orin™ NX 16GB

- ZED SDK version : 4.1.2

- GNSS Sensor : the one included with the bundle of the ZED Box Orin (GPS RTK U-blox ZED-F9P)

- Software configuration : Ubuntu 20.04.5 LTS in the ZED Box for recording, Windows 11 for postprocesing

- any other useful information : I have tryed to attach the zed diagnosis tool log but as a new user I still cannot attach files. Everything seems correct but if there is anything in particular you need to know I can copy/paste

Hi @a.martin

thank you for the information.

This can seem like a question with an obvious reply, but I must be sure that everything is correctly configured:

did you connect the GNSS antenna to the ZED Box port?

Of course. The antenna is connected and we start the GPSD service, we check that xgps shows the satellite constellation and we are able to extract the GPS track from the svo2 file.

Hi @a.martin

thank you for all the information.

My colleague @TanguyHardelin will provide you with the required support.

Hi @a.martin,

To assist you better, could you please provide the recorded SVO2 file that includes GNSS data? Additionally, it would be helpful if you could specify any errors or warnings that are displayed when you replay the recorded data.

Thank you,

Tanguy

Of course. How can I send you the file? Because it is just over 500MB

Using the example recording scripts from both c++ and python we never managed to calibrate the fusion correctly. In c++ we always have the “GNSS mode” as “FIX 3D” in green and “GNSS Status” in “RTK_FIX” in green, but “GNSS Fusion Status” in “Calibration in progress” in red

We have tested the “playback” python script offline and it always returns “Positional Tracking Status: Visual Inertial” in red

Hi @a.martin,

Thank you for your response. If possible, could you please provide a Google Drive link or any other downloadable link to access the SVO2 file with GNSS data?

Regarding the calibration process, you can enable the calibration STD information in the C++ playback sample by modifying the code as follows:

if (1) {

// GNSS coordinate system to ZED coordinate system is not initialized yet

// The initialization involves optimizing the fit between the ZED computed path and the GNSS computed path.

// To perform this calibration, you need to move your system by the distance specified in positional_tracking_fusion_parameters.gnss_initialization_distance.

float yaw_std;

sl::float3 position_std;

fusion.getCurrentGNSSCalibrationSTD(yaw_std, position_std);

if (yaw_std != -1.f)

std::cout << "GNSS State: " << current_geopose_status << ": calibration uncertainty yaw_std " << yaw_std << " rad position_std " << position_std[0] << " m, " << position_std[1] << " m, " << position_std[2] << " m\t\t\t\r";

}

This modification will allow you to obtain information about the calibration uncertainty, including yaw_std (yaw standard deviation) and position_std (position standard deviation) in meters.

Regards,

Tanguy

Thank you very much.

Is there a way to send you the link privately?

We will try to see how to adjust those values.

On the other hand, and although it never seems to calibrate, repeating the process, sometimes when exporting it seems to have done the complete fusion (Most of the time only a small piece). When doing spatial mapping and exporting the results, the camera pose is exported following a path matching the fused_position.kml file, but the geometry obj does not match, it uses the camera pose without fusion to generate the geometry.

Is there a way to send you the link privately?

By private message it will be good enough.

repeating the process, sometimes when exporting it seems to have done the complete fusion

The GNSS / VIO calibration involves moving the camera and GNSS over a certain distance. While in motion, a calibration uncertainty is computed based on the alignment between VIO and GNSS data. This uncertainty reflects how well the calibration values are constrained by both VIO and GNSS. The calibration process continues until the uncertainty reaches a sufficiently low level. The if(1) that I mentionned above plot the current uncertainty reached by the calibration.

The threshold for the “low level” is determined by the target_yaw_uncertainty parameter for yaw calibration and the target_translation_uncertainty parameter for translation calibration. The yaw calibration uncertainty criteria is mandatory, while the translation criteria is optional and can be enabled by using the enable_translation_uncertainty_target parameter.

This may explain why only a part of your trajectory is exported by the fusion module, as it uses the initial position for the GNSS/VIO calibration. To reduce the distance required for calibration, you can increase the target_yaw_uncertainty parameter. If the initial calibration is not perfect, it will be refined during subsequent fusion. However, be cautious not to set a too lenient value for target_yaw_uncertainty. If the calibration results are too poor, the system won’t be able to perform any fusion, and it will reset to the calibration stage.

I hope these explanations clarify the process for you. If you would like further analysis, I am waiting your SVO file.

Regards,

Tanguy

Thank you very much for taking the time to test the file.

I will continue here, avoiding location references, as it may be beneficial for the rest of the community.

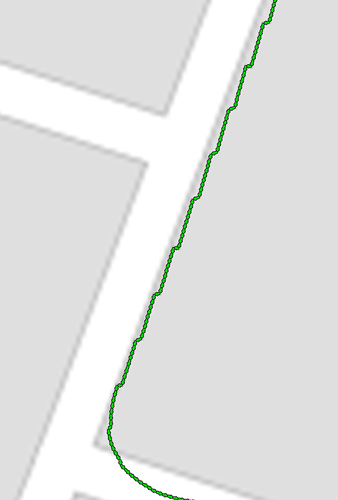

In the tests we have, the resulting GPS has continuous position jumps throughout the entire route. We have tried changing some settings but without achieving a smooth result.

It becomes more evident when we import the camera pose into Maya, since obvious jumps in height can be seen.

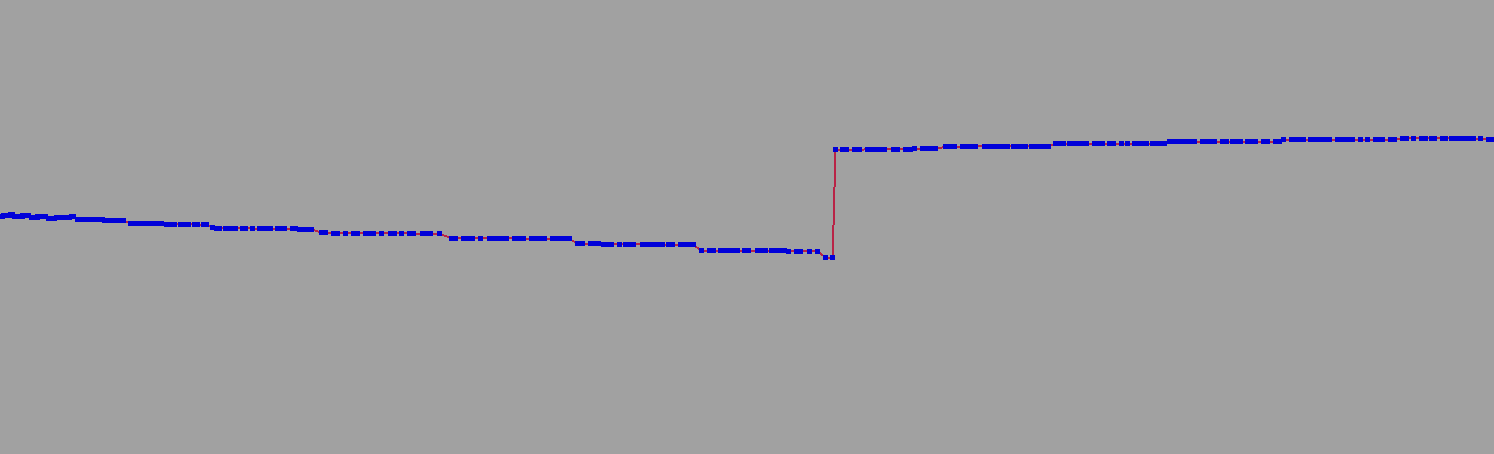

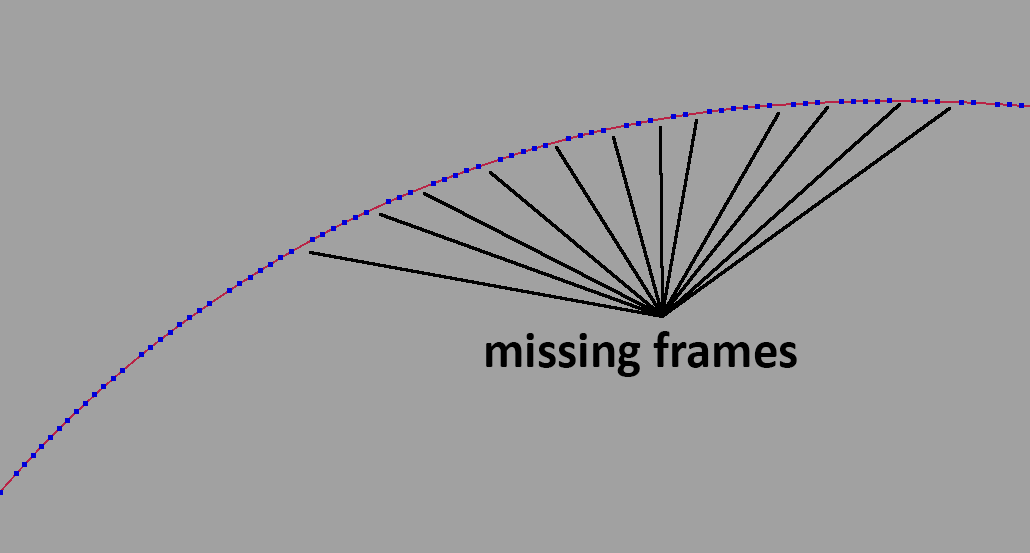

You can also see that despite the svo being recorded at 30fps in h265 lossy there are continuous frame drops throughout the journey. They can also be seen when playing the file in ZED Explorer

Just in case, we have also exported the image sequence from both cameras and processed it in Reality Capture, obtaining the same results with missing frames.

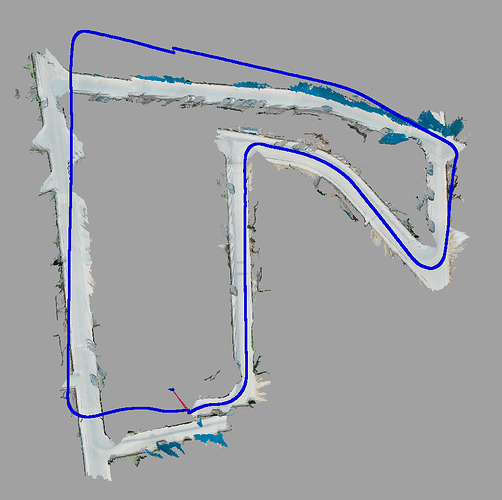

Returning to the topic of Spatial Mapping, we have not been able to get the geometry to match the calculated trajectory. You can see that at first it coincides, but as the recording progresses it becomes separated. The script we use is a mixture of several examples from the documentation.

from display.generic_display import GenericDisplay

from gnss_replay import GNSSReplay

import sys

import pyzed.sl as sl

from tkinter import filedialog

import os

import exporter.KMLExporter as export

import argparse

import cv2

"""

OPTIONS

"""

smooth_track = False

depth_mode = "NEURAL_PLUS" # It's necesary for spatial mapping NEURAL_PLUS / NEURAL / ULTRA / QUALITY / PERFORMANCE

depth_min_distance = 1.5

depth_max_distance = 20

depth_stabilization = 4 #0-100. 0 Disabled. Default 1

tracking_mode = "GEN_2" #0 for STANDAR, 1 for QUALITY

runtime_enable_fill_mode = False

runtime_confidence_threshold = 95

runtime_texture_confidence_threshold = 100

mapping_max_memory_usage = 8000

mapping_range_meter = 100 # Set maximum depth mapping range

mapping_resolution_meter = 1.00

#opt_imu_only = False #Not implemented / No se va a implentar en princpio porque solo saca la información de

#los sensores en bruto, no la pose de camara

"""

Init Variables

"""

text_translation = ""

text_rotation = ""

"""

Input/Output

"""

#Ask for the svo file

input_file = filedialog.askopenfilename(

title="Seleccionar archivo svo",

filetypes=(("Archivo SVO",["*.svo","*.svo2"]),("Todos","*.*"))

)

#Sets de addition to the name

name_options = ""

if smooth_track:

name_options = name_options + "_SM"

name_options = name_options + "_DM_" + str(depth_mode) + "_MinDis_" + str(depth_min_distance) + "_MaxDis_" + str(depth_max_distance) + "_Stb_" + str(depth_stabilization)

name_options = name_options + "_TM_" + str(tracking_mode) + "_Fill_" + str(runtime_enable_fill_mode) + "_Thr_" + str(runtime_confidence_threshold)

name_options = name_options + "_TxThr_" + str(runtime_texture_confidence_threshold) + "_rg_" + str(mapping_range_meter) + "_res_" + str(mapping_resolution_meter)

print(name_options)

#Divides into folder an file with extension

input_folder, input_file_name_w_ext = os.path.split(input_file)

#Divides the file into name and extesion, only the file name is needed

input_file_name, input_file_ext = os.path.splitext(input_file_name_w_ext)

#Generates the csv

output_file_csv_name = (input_folder+'/'+input_file_name+name_options+'.csv')

output_file_csv = open(output_file_csv_name, 'w')

#Names the obj

output_file_obj = (input_folder+'/'+input_file_name+name_options+'.obj')

#Names de area file

output_file_area = (input_folder+'/'+input_file_name+name_options+'.area')

"""

INIT Camera

"""

# Create a Camera object

zed = sl.Camera()

# Create a InitParameters object and set configuration parameters

py_translation = sl.Translation()

text_translation = ""

text_rotation = ""

init_params = sl.InitParameters()

init_params.sdk_verbose = 1

log_path = "open_log.txt"

init_params.sdk_verbose_log_file = log_path

init_params.camera_resolution = sl.RESOLUTION.AUTO # Use HD720 or HD1200 video mode (default fps: 60)

init_params.coordinate_system = sl.COORDINATE_SYSTEM.RIGHT_HANDED_Y_UP # Use a right-handed Y-up coordinate system

init_params.coordinate_units = sl.UNIT.METER # Set units in meters

init_params.set_from_svo_file(input_file) # set the camera to use an SVO file

#Depth mode

if depth_mode == "NEURAL": # It's necesary for spatial mapping NEURAL / ULTRA / QUALITY / PERFORMANCE

init_params.depth_mode = sl.DEPTH_MODE.NEURAL

print("Test_NEURAL")

elif depth_mode == "NEURAL_PLUS":

init_params.depth_mode = sl.DEPTH_MODE.NEURAL_PLUS

print("Test_NEURAL_PLUS")

elif depth_mode == "ULTRA":

init_params.depth_mode = sl.DEPTH_MODE.ULTRA

print("Test_ULTRA")

elif depth_mode == "QUALITY":

init_params.depth_mode = sl.DEPTH_MODE.QUALITY

print("Test_QUALITY")

elif depth_mode == "PERFORMANCE":

init_params.depth_mode = sl.DEPTH_MODE.PERFORMANCE

print("Test_PERFORMANCE")

else:

init_params.depth_mode = sl.DEPTH_MODE.ULTRA

print("Test_DEFAULT_ULTRA")

init_params.depth_maximum_distance = depth_max_distance

init_params.depth_minimum_distance = depth_min_distance

init_params.depth_stabilization = depth_stabilization

# Open the camera

err = zed.open(init_params)

if err != sl.ERROR_CODE.SUCCESS:

print("Camera Open : " + repr(err) + ". Exit program.")

exit()

# Init Runtime parameters

runtime_parameters = sl.RuntimeParameters()

runtime_parameters.enable_fill_mode = runtime_enable_fill_mode

runtime_parameters.confidence_threshold = runtime_confidence_threshold

runtime_parameters.texture_confidence_threshold = runtime_texture_confidence_threshold

# Enable positional tracking with default parameters

py_transform = sl.Transform() # First create a Transform object for TrackingParameters object

tracking_parameters = sl.PositionalTrackingParameters(_init_pos=py_transform)

if tracking_mode == "GEN_2":

mode = sl.POSITIONAL_TRACKING_MODE.GEN_2

print("Tracking GEN2")

elif tracking_mode == "GEN_1":

mode = sl.POSITIONAL_TRACKING_MODE.GEN_1

print("Tracking GEN1")

else:

print ("Traking erroneo.GEN_1 por defecto")

tracking_parameters.enable_imu_fusion = True

tracking_parameters.enable_area_memory = True

if smooth_track:

tracking_parameters.enable_pose_smoothing = True

else:

tracking_parameters.enable_pose_smoothing = False

err = zed.enable_positional_tracking(tracking_parameters)

if err != sl.ERROR_CODE.SUCCESS:

print("Enable positional tracking : " + repr(err) + ". Exit program.")

zed.close()

exit()

# Display

display_resolution = sl.Resolution(1280, 720)

left_img = sl.Mat()

# Create Fusion object:

fusion = sl.Fusion()

init_fusion_param = sl.InitFusionParameters()

init_fusion_param.coordinate_units = sl.UNIT.METER

init_fusion_param.coordinate_system = sl.COORDINATE_SYSTEM.RIGHT_HANDED_Y_UP

init_fusion_param.verbose = True

fusion_init_code = fusion.init(init_fusion_param)

if fusion_init_code != sl.FUSION_ERROR_CODE.SUCCESS:

print("[ZED][ERROR] Failed to initialize fusion :" + repr(fusion_init_code) + ". Exit program")

exit()

# Enable odometry publishing:

configuration = sl.CommunicationParameters()

zed.start_publishing(configuration)

uuid = sl.CameraIdentifier(zed.get_camera_information().serial_number)

fusion.subscribe(uuid, configuration, sl.Transform(0, 0, 0))

# Enable positional tracking for Fusion object

positional_tracking_fusion_parameters = sl.PositionalTrackingFusionParameters()

positional_tracking_fusion_parameters.enable_GNSS_fusion = True

gnss_calibration_parameters = sl.GNSSCalibrationParameters()

gnss_calibration_parameters.target_yaw_uncertainty = 2

gnss_calibration_parameters.enable_translation_uncertainty_target = True

gnss_calibration_parameters.target_translation_uncertainty = 10

gnss_calibration_parameters.enable_reinitialization = True

gnss_calibration_parameters.gnss_vio_reinit_threshold = 20

positional_tracking_fusion_parameters.gnss_calibration_parameters = gnss_calibration_parameters

fusion.enable_positionnal_tracking(positional_tracking_fusion_parameters)

# Setup viewer:

py_translation = sl.Translation()

# Setup viewer:

viewer = GenericDisplay()

viewer.init(zed.get_camera_information().camera_model)

print("Start grabbing data ...")

gnss_replay = GNSSReplay(None, zed)

input_gnss_sync = sl.GNSSData()

"""

Functions

"""

def progress_bar(percent_done, bar_length=50):

#Display progress bar

done_length = int(bar_length * percent_done / 100)

bar = '=' * done_length + '-' * (bar_length - done_length)

sys.stdout.write('\r[%s] %i%s' % (bar, percent_done, '%'))

sys.stdout.flush()

def main():

time_offset = None

# Enable spatial mapping

mapping_parameters = sl.SpatialMappingParameters(map_type=sl.SPATIAL_MAP_TYPE.MESH)

mapping_parameters.max_memory_usage = mapping_max_memory_usage

mapping_parameters.range_meter = 100 # Set maximum depth mapping range

mapping_parameters.resolution_meter = 1.00 # Set resolution

err = zed.enable_spatial_mapping(mapping_parameters)

if err != sl.ERROR_CODE.SUCCESS:

print("Enable spatial mapping : " + repr(err) + ". Exit program.")

zed.close()

exit(1)

mesh = sl.Mesh() # Create a Mesh object

# INITIALIZE CSV OUTPUT FILE

output_file_csv.write('tx, ty, tz, rx, ry, rz \n')

# Track the camera position during the longitude of the video

i = 0

zed_sensors = sl.SensorsData()

while viewer.isAvailable():

# get the odometry information

if zed.grab() == sl.ERROR_CODE.SUCCESS:

zed_pose = sl.Pose()

zed.get_position(zed_pose, sl.REFERENCE_FRAME.WORLD)

zed.retrieve_image(left_img, sl.VIEW.LEFT, sl.MEM.CPU, display_resolution)

cv2.imshow("left", left_img.numpy())

cv2.waitKey(10)

elif zed.grab() == sl.ERROR_CODE.END_OF_SVOFILE_REACHED:

print("\nExtracting Mesh...\n")

err = zed.extract_whole_spatial_map(mesh)

print(repr(err))

print("Filtering Mesh...\n")

mesh.filter(sl.MeshFilterParameters()) # Filter the mesh (remove unnecessary vertices and faces)

print("Saving Mesh...\n")

mesh.save(output_file_obj)

# Disable tracking and mapping and close the camera

zed.disable_spatial_mapping()

fusion.close()

zed.close()

break

# print("End of SVO file.")

# fusion.close()

# zed.close()

# exit()

status, input_gnss = gnss_replay.grab(zed_pose.timestamp.get_nanoseconds())

if status == sl.FUSION_ERROR_CODE.SUCCESS:

ingest_error = fusion.ingest_gnss_data(input_gnss)

latitude, longitude, altitude = input_gnss.get_coordinates(False)

coordinates = {

"latitude": latitude,

"longitude": longitude,

"altitude": altitude,

}

export.saveKMLData("raw_gnss.kml", coordinates)

# Fusion is asynchronous and needs synchronization. Sometime GNSSData comes before camera data raising "NO_NEW_DATA_AVAILABLE" error

# This does not necessary means that fusion doesn't work but that no camera data were presents for the gnss timestamp when you ingested the data.

if ingest_error != sl.FUSION_ERROR_CODE.SUCCESS and ingest_error != sl.FUSION_ERROR_CODE.NO_NEW_DATA_AVAILABLE:

print("Ingest error occurred when ingesting GNSSData: ", ingest_error)

# get the fused position

if fusion.process() == sl.FUSION_ERROR_CODE.SUCCESS:

fused_position = sl.Pose()

# Get position into the ZED CAMERA coordinate system:

current_state = fusion.get_position(fused_position)

if current_state == sl.POSITIONAL_TRACKING_STATE.OK:

current_state = fusion.get_fused_positional_tracking_status().tracking_fusion_status

rotation = fused_position.get_rotation_vector()

translation = fused_position.get_translation(py_translation)

text_rotation = str((round(rotation[0], 2), round(rotation[1], 2), round(rotation[2], 2)))

text_translation = str(

(round(translation.get()[0], 2), round(translation.get()[1], 2), round(translation.get()[2], 2)))

viewer.updatePoseData(fused_position.pose_data(), text_translation, text_rotation, current_state)

fusion.get_current_gnss_data(input_gnss_sync)

# Display it on the Live Server

viewer.updateRawGeoPoseData(input_gnss_sync)

# Get position into the GNSS coordinate system - this needs a initialization between CAMERA

# and GNSS. When the initialization is finish the getGeoPose will return sl::POSITIONAL_TRACKING_STATE::OK

current_geopose = sl.GeoPose()

current_geopose_satus = fusion.get_geo_pose(current_geopose)

if current_geopose_satus == sl.GNSS_FUSION_STATUS.OK:

# Display it on the Live Server

viewer.updateGeoPoseData(current_geopose, zed.get_timestamp(sl.TIME_REFERENCE.CURRENT))

_, yaw_std, position_std = fusion.get_current_gnss_calibration_std()

if yaw_std != -1:

print("GNSS State : ", current_geopose_satus, " : calibration uncertainty yaw_std ", yaw_std,

" rd position_std", position_std[0], " m,", position_std[1], " m,", position_std[2],

end=' m\r')

# # Get rotation and translation and displays it

# rotation = zed_pose.get_rotation_vector()

# translation = zed_pose.get_translation()

# rotation_euler = zed_pose.get_euler_angles()

# Get rotation and translation and displays it

rotation = fused_position.get_rotation_vector()

translation = fused_position.get_translation()

rotation_euler = fused_position.get_euler_angles()

if time_offset == None: # Get the first timestamp as an offset to start in 0

time_offset = zed.get_timestamp(sl.TIME_REFERENCE.IMAGE).get_milliseconds()

timestamp = zed.get_timestamp(sl.TIME_REFERENCE.IMAGE).get_milliseconds()

timestamp = timestamp - time_offset

output_file_csv.write(str(translation.get()[0]) + ", " + str(translation.get()[1]) + ", " + str(

translation.get()[2]) + ", " + str(rotation_euler[0]) + ", " + str(

rotation_euler[1]) + ", " + str(rotation_euler[2]) + ", " + str(timestamp) + "\n")

mapping_state = zed.get_spatial_mapping_state()

"""

else :

GNSS coordinate system to ZED coordinate system is not initialized yet

The initialization between the coordinates system is basically an optimization problem that

Try to fit the ZED computed path with the GNSS computed path. In order to do it just move

your system and wait that uncertainty come bellow uncertainty threshold you set up in your

initialization parameters.

"""

# Extract, filter and save the mesh in an obj file

print("\nExtracting Mesh...\n")

err = zed.extract_whole_spatial_map(mesh)

print(repr(err))

print("Filtering Mesh...\n")

mesh.filter(sl.MeshFilterParameters()) # Filter the mesh (remove unnecessary vertices and faces)

print("Saving Mesh...\n")

mesh.save(output_file_obj)

# Disable tracking and mapping and close the camera

zed.disable_spatial_mapping()

fusion.close()

zed.close()

"""

EXEC_MAIN

"""

main()

Regarding the hardware we use, we only use the ZED Box Orin to record and repair the svo2 files, the rest of the process is carried out in Windows

Hello,

I apologize for the delay in my response. It appears that your multiple issues are not related, so I will address each of them separately.

In the tests we have, the resulting GPS has continuous position jumps throughout the entire route. We have tried changing some settings but without achieving a smooth result.

Indeed, small jumps in position can occur when the VIO position is being corrected by GNSS data. This situation is more likely to happen when either the VIO or GNSS system provides poor results. Several factors can contribute to these situations, including:

For VIO:

- Presence of high dynamic objects in the scene.

- Lack of defined regions of interest, especially when some part of image do not move.

- Mounting the camera on a highly vibrating platform.

- Poor calibration of the VIO system (Not supposed to happen).

For GNSS:

- Presence of multi-path effects, such as in urban environments with tall buildings.

- Low number of tracked satellites.

Efforts are underway to address these issues, but specific dates for their resolution cannot be provided at this time.

You can also see that despite the svo being recorded at 30fps in h265 lossy there are continuous frame drops throughout the journey. They can also be seen when playing the file in ZED Explorer

This issue is indeed known. Occasionally, when the system is overloaded, a few frames may be dropped. This typically occurs when the hardware is unable to complete all the operations requested by the .grab() function within the timeframe of a single frame, resulting in frame drops.

To mitigate this, a feature is planned for the next release that will decouple the recording frames per second (FPS) from the FPS of the .grab() function. In the meantime, have you tried setting the depth mode to NONE and disabling both positional tracking and fusion? Doing so can alleviate system overload and should allow you to record all frames without drops.

Additionally, have you set your ZEDBox to its maximum performance mode? This can help optimize the system’s performance and reduce the likelihood of frame drops caused by overload.

Returning to the topic of Spatial Mapping, we have not been able to get the geometry to match the calculated trajectory.

I have a question regarding your setup. Are you displaying the mesh computed by the sl::Camera object along with the position computed by the sl::Fusion object? If you are, please note that these two are not correlated. In order to project your mesh into a geo-referenced coordinate system, you can obtain the transformation between the ZED coordinate system and the Geo coordinate system using the get_geo_tracking_calibration function. Additionally, have you enabled GNSS rolling calibration in your tests?

I hope this answers your questions.

Regards,

Tanguy

Thank you very much for the answer,

As for the drop frames, it was something that we noticed after the update that added the svo2 format. We have tried recording with Zed Explorer in both 4.0.8 and 4.1.2 and the performance is very different:

4.0.8 - We can record up to 60fps with the lossless codec, except with h264 where the performance is somewhat worse.

4.1.2 - In lossless we achieve less than 20 fps, and with lossy it is close to 30 but with occasional drop frames.

Really our intention is only to use the Zed Box to record the svos and do all the offline processing on another more capable computer.

I’m afraid I don’t know the exact answer nor how to implement “get_geo_tracking_calibration”. I would be very grateful if you had an example that could guide me a little on how to generate the mesh with the camera pose resulting from the fusion.

Hello,

As for the drop frames, it was something that we noticed after the update that added the svo2 format. We have tried recording with Zed Explorer in both 4.0.8 and 4.1.2 and the performance is very different:

4.0.8 - We can record up to 60fps with the lossless codec, except with h264 where the performance is somewhat worse.

Thank you for reporting this. Indeed the 4.1 release version introduce the new SVO2 file format. We will investigate this further. In the mean-time, you could return to the old SVO version by setting this following environment variable:

export ZED_SDK_SVO_VERSION=1

The primary distinction between SVO1 and SVO2 is the inclusion of high-frequency IMU data storage capability, along with the ability to store custom data. However, even with SVO1, you can still utilize geo-tracking fusion by storing your GNSS data in JSON format, as demonstrated in the 4.0.8 recording sample. Please be aware that if you are using Tracking Gen2 with SVO1, you will need to disable the IMU fusion since Tracking Gen2 needs high-frequency IMU data.

I’m afraid I don’t know the exact answer nor how to implement “get_geo_tracking_calibration”.

The get_geo_tracking_calibration function in the Fusion class of the SDK allows you to obtain the calibration matrix between the sl::Camera reference frame and the geo-world sl::Fusion reference frame. This calibration is useful if you want to convert the trajectory from the camera’s reference frame to the Fusion reference frame.

To perform the conversion of the camera’s pose to the Fusion’s GeoPose (latitude/longitude), you can also use the camera_to_geo function, which is also available in the Fusion class. This function converts the sl::Pose from the camera’s reference frame to the sl::GeoPose in the Fusion’s coordinate system.

Once you have the GeoPose object, you can further convert it to the ECEF (Earth-Centered, Earth-Fixed) or ENU (East-North-Up) coordinate system, depending on your specific requirements. For the conversion from latitude/longitude to ECEF, you can utilize the functions provided by the GeoConverter class in the SDK.

However, if you need to convert latitude/longitude to ENU coordinates, the GeoConverter class does not provide a direct function for that. In such cases, you can consider using external libraries like pymap3d for Python or rtklib for C++, which offer functionality for converting between latitude/longitude and ENU coordinates.

Regards,

Tanguy

Yes, we know, but since we did not notice any notable difference in performance between version 4.1, 4.1.1 and 4.1.2 we only did the tests comparing 4.0.8 and 4.1.2

We are trying to achieve the most precise camera pose possible and we assume that the high frequency IMU could help us. In any case, while the problems with svo2 are solved (corrupt files that almost always have to be repaired, frame drops…) we will have to test which version works best for us.

Spatial Mapping

Possibly I’m getting it wrong.

What I don’t really understand how to do is that when executing:

mapping_state = zed.get_spatial_mapping_state()

How to tell “zed.get_spatial_mapping_state()” to use the camera pose obtained from the fusion.Because from what I understand from the screenshot is that it is using the pose calculated in VIO

It doesn’t seem like it’s a coordinate reference system problem.

I’ve been looking at SpatialMappingParameters and several other functions that seemed related, but I haven’t been able to find how to define which camera pose it uses.

Something similar to how when I take the camera values to save them in a .csv

fused_position = sl.Pose()

......

......

# Get rotation and translation and displays it

rotation = fused_position.get_rotation_vector()

translation = fused_position.get_translation()

rotation_euler = fused_position.get_euler_angles()

Yes, we know, but since we did not notice any notable difference in performance between version 4.1, 4.1.1 and 4.1.2 we only did the tests comparing 4.0.8 and 4.1.2

Yes indeed the 4.1.2 is the latest release, it solve a lot of GNSS and VIO issues, you should use this version.

How to tell “zed.get_spatial_mapping_state()” to use the camera pose obtained from the fusion.

Unfortunately you are right the spacial mapping is currently using the pose computed by sl::Camera. There currently no way to use this with fusion computed pose. What I suggested is to project your spatial map into fusion geo-reference thanks to computed calibration but indeed this do not feet your problems since you need accurate position.

Spatial mapping thanks to fusion position is on our development road-map. However, I am unable to provide a specific release version for this feature at the moment.

Regards,

Tanguy

Thank you very much for the response and clarifying our problems.

We will be awaiting new updates that allow us to continue investigating the use of the ZED