The goal:

I would like to use the ZED Unity plugin’s spatial mapping, inside-out positional tracking, and real-time plane detection functionality alongside the Quest Pro’s functionality (eye tracking, hand tracking, body tracking, color passthrough, etc.). I do not need to render any camera images from the ZED camera (2/mini), only to re-create the real world as a mesh and help with tracking. I would like this to potentially work with other VR headsets as well using OpenXR (app running on computer not VR headset) (I understand OpenXR is currently not supported, but based on the below, i’m wondering if there is a workaround since I only use specific features).

The problem:

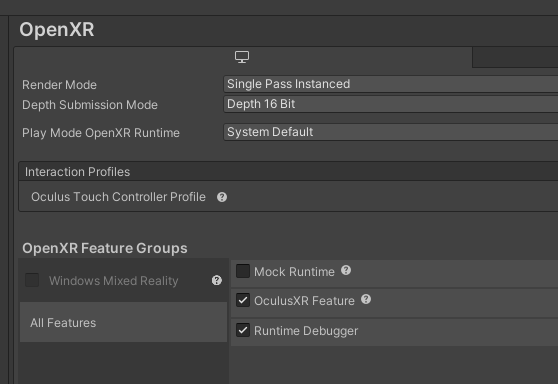

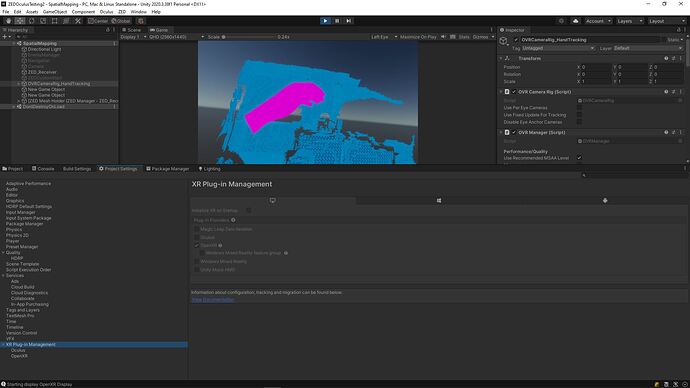

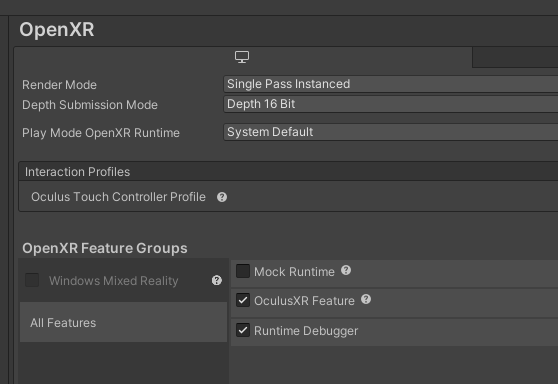

I have a 2022.1.17f1 Unity project with the ZED Unity plugin (3.8.0), Oculus Integration (46.0) (Interaction, Platform, SampleFrameowrk, VR), and XR Plug-in Management installed. The Scene contains a Directional Light, standard Camera, ZED_Rig_Mono prefab (with its camera disabled), and a GameObject that holds a script to automatically initially Spatial Mapping once the ZED camera is ready.

I am able to get generated meshes via spatial mapping when the “OculusXR Feature” is disabled.

When I enable the “OculusXR Feature,” the spatial mapping no longer creates meshes.

The question:

Is there a way for what I am trying to do achievable using OpenXR? Are there some logging/troubleshooting steps I can use to help me determine the exact issue to create my own workaround? (like perhaps using a separate scene, a separate thread, creating spatial mapping as an OpenXR feature, etc.)

Hi,

I’m sorry for the late reply.

Is it possible to send me a small sample that reproduce the issue at support@stereolabs.com ?

I’ll test it on my side and see what can be possible. As you said, the answer is straightforward : the plugin is not compatible with OpenXR. However, I can maybe find a workaround.

Best,

Benjamin Vallon

Stereolabs Support

Sample emailed. Thank you!

FYI, I noticed that if I commented out the OVRPlugin.UnityOpenXR.HookGetInstanceProcAddr(func) statement in the OculusXRFeature class and simply return a new IntPrt(), the Spatial Mapping continues to work with the OpenXR plugin + OculusXR feature enabled. The game does not run in the headset though.

I am still trying to understand what the OVRPlugin.UnityOpenXR.HookGetInstanceProcAddr does and why this could be causing an issue.

I made some initial progress. The issue looks to be timing related. If I initialize XR manually AFTER ZED Spatial Mapping has started (I initialize XR in OnMeshStarted event handler), then i’m able to get OpenXR and ZED Spatial Mapping working at the same time.

I almost got to the point of trying to turn the spatial mapping into a subsystems that is able to be enabled in the XR Plug-In Management > OpenXR. I still may.

I currently have this working via streaming (Sender implemented via console application and receiver implemented in a Unity script). I also bypassed the ZEDManager and am directly utilizing the ZEDCamera and ZEDSpatialMappingHelper classes. I’ve tried so many different things I’m not sure what he simple fix is. It could be simply using one of the Mono/Stereo Rigs and initializing XR manually. I may try this myself and respond if anyone is curious.