This seems similar to this existing issue, but I’m curious if anyone has recommendations on how best to implement what I’m trying to achieve and clarify some confusions I have.

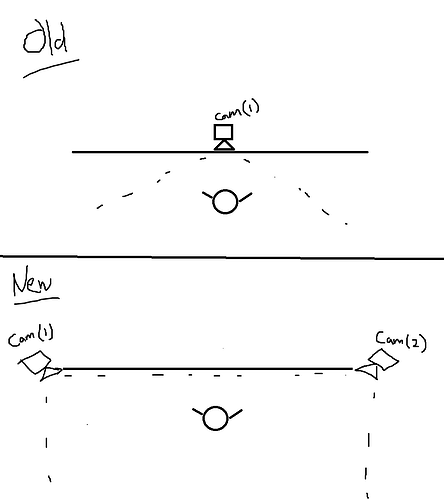

I’m attempting to project a mirrored avatar representation of a user on the wall in front of them, and I originally built the project to have a central camera facing towards the users in the center of the projected wall. Since then, the project has been updated to have two cameras on each corner of the projection wall (about 30ft apart, to the sides of the users). Now I’ve been trying to re-orient the Virtual_Camera and ZEDPawn in the scene in a way that reflects this setup, but moving and rotating them doesn’t seem to change the perspective of the camera with respect to users - it still behaves as if the camera is situated in the center of the projection wall. Even changing the offsets in the calibration file by hand doesn’t seem to achieve anything.

Is there something I’m missing? It feels like I have to rebuild the whole scene with respect to the new camera position at the level origin, when I should just be able to move an actor to emulate the transformation. Thanks!

(Pardon the crude paint drawing - this is the old and new setup as described above.)

Hi,

By default, it will use the ZED Pawn camera as main camera and you will see the scene from this point of view (the point of view of the ZED Camera).

To change the PoV of the scene, you indeed need to add a “BP_VirtualCamera” actor. You can then move it anywhere in the scene.

If I understand correctly what you are trying to achieve, you should just need to place this BP_VirtualCamera actor at the position of the “old” camera in your drawing.

Is that what you tried?

This is indeed one of the setups I’ve tried so far.

No matter what positions I have the ZEDPawn and BP_VirtualCamera actor in the scene, the inputs still act as if Camera 1 is in the old position in the Unreal scene (in other words, nothing seems to be affected by the position of my virtual camera).

For details, I’m using the BP_ZEDLiveLink_Manager for camera data, so could this not be initializing the scene properly like the BP_ZED_Initializer would? I removed the Initializer because it doesn’t need to detect cameras for user data since that’s being provided by the Fusion application; it throws camera detection errors when I include it in the project.

Yes, you are using our Fusion module, you don’t need our zed-ue5 plugin at all, only the live link plugin (GitHub - stereolabs/zed-livelink: ZED LiveLink Plugin for Unreal) is necessary.

Indeed, in a fusion configuration, the skeleton data will be provided relative to the origin of the system, which is usually below one of the cameras, at ground height. It is described in the calibration file that you generated with ZED360. The position of one camera should be x = 0, y = -height, z = 0.

You can artificially apply an offset of the origin of the system by changing the position of all the cameras by the same translation.