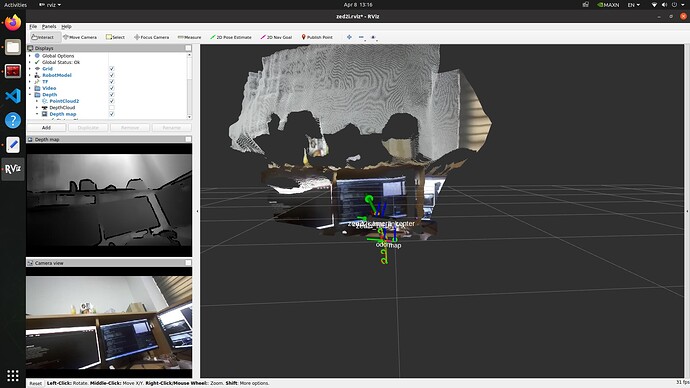

I’m using zed wrapper with neural mode and no confidance filtering but there is still ‘nan’ data in my depth map. When i use in SDK neural mode worked well.

Here my ‘common.yaml’

I’m using zed2i model

# params/common.yaml

# Common parameters to Stereolabs ZED and ZED mini cameras

---

# Dynamic parameters cannot have a namespace

brightness: 4 # Dynamic

contrast: 4 # Dynamic

hue: 0 # Dynamic

saturation: 4 # Dynamic

sharpness: 4 # Dynamic

gamma: 8 # Dynamic - Requires SDK >=v3.1

auto_exposure_gain: false # Dynamic

gain: 100 # Dynamic - works only if `auto_exposure_gain` is false

exposure: 40 # Dynamic - works only if `auto_exposure_gain` is false

auto_whitebalance: true # Dynamic

whitebalance_temperature: 42 # Dynamic - works only if `auto_whitebalance` is false

depth_confidence: 100 # Dynamic

depth_texture_conf: 100 # Dynamic

point_cloud_freq: 15.0 # Dynamic - frequency of the pointcloud publishing (equal or less to `grab_frame_rate` value)

general:

camera_name: zed # A name for the camera (can be different from camera model and node name and can be overwritten by the launch file)

zed_id: 0

serial_number: 0

gpu_id: -1

base_frame: 'base_link' # must be equal to the frame_id used in the URDF file

sdk_verbose: 1 # Set verbose level of the ZED SDK

svo_compression: 2 # `0`: LOSSLESS, `1`: AVCHD, `2`: HEVC

self_calib: true # enable/disable self calibration at starting

camera_flip: 'AUTO' # camera flip mode: 'OFF', 'ON', 'AUTO'

pub_resolution: 'CUSTOM' # The resolution used for output. 'NATIVE' to use the same `general.grab_resolution` - `CUSTOM` to apply the `general.pub_downscale_factor` downscale factory to reduce bandwidth in transmission

pub_downscale_factor: 2.0 # rescale factor used to rescale image before publishing when 'pub_resolution' is 'CUSTOM'

pub_frame_rate: 15.0 # frequency of publishing of video and depth data (see SDK API "InitParameters::grab_compute_capping_fps")

svo_realtime: true # if true the input SVO will be played trying to respect the original framerate eventually skipping frames, otherwise every frame will be processed respecting the `pub_frame_rate` setting

region_of_interest: '[]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#region_of_interest: '[[0.25,0.33],[0.75,0.33],[0.75,0.5],[0.5,0.75],[0.25,0.5]]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#region_of_interest: '[[0.25,0.25],[0.75,0.25],[0.75,0.75],[0.25,0.75]]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#region_of_interest: '[[0.5,0.25],[0.75,0.5],[0.5,0.75],[0.25,0.5]]' # A polygon defining the ROI where the ZED SDK perform the processing ignoring the rest. Coordinates must be normalized to '1.0' to be resolution independent.

#video:

depth:

depth_mode: 'NEURAL' # 'NONE', 'PERFORMANCE', 'QUALITY', 'ULTRA', 'NEURAL'

depth_stabilization: 1 # [0-100] - 0: Disabled

openni_depth_mode: false # 'false': 32bit float meter units, 'true': 16bit uchar millimeter units

pos_tracking:

pos_tracking_enabled: true # True to enable positional tracking from start

pos_tracking_mode: 'STANDARD' # Matches the ZED SDK setting: 'QUALITY', 'STANDARD' -> Use 'QUALITY' with 'ULTRA' depth mode for improved outdoors performance

set_gravity_as_origin: true # If 'true' align the positional tracking world to imu gravity measurement. Keep the yaw from the user initial pose.

imu_fusion: true # enable/disable IMU fusion. When set to false, only the optical odometry will be used.

publish_tf: true # publish `odom -> base_link` TF

publish_map_tf: true # publish `map -> odom` TF

map_frame: 'map' # main frame

odometry_frame: 'odom' # odometry frame

area_memory_db_path: '' # file loaded when the node starts to restore the "known visual features" map.

save_area_memory_db_on_exit: false # save the "known visual features" map when the node is correctly closed to the path indicated by `area_memory_db_path`

area_memory: true # Enable to detect loop closure

floor_alignment: false # Enable to automatically calculate camera/floor offset

initial_base_pose: [0.0,0.0,0.0, 0.0,0.0,0.0] # Initial position of the `base_frame` -> [X, Y, Z, R, P, Y]

init_odom_with_first_valid_pose: true # Enable to initialize the odometry with the first valid pose

path_pub_rate: 2.0 # Camera trajectory publishing frequency

path_max_count: -1 # use '-1' for unlimited path size

two_d_mode: false # Force navigation on a plane. If true the Z value will be fixed to "fixed_z_value", roll and pitch to zero

fixed_z_value: 0.00 # Value to be used for Z coordinate if `two_d_mode` is true

depth_min_range: 0.0 # Set this value for removing fixed zones of the robot in the FoV of the camerafrom the visual odometry evaluation

set_as_static: false # If 'true' the camera will be static and not move in the environment

mapping:

mapping_enabled: false # True to enable mapping and fused point cloud publication

resolution: 0.05 # maps resolution in meters [0.01f, 0.2f]

max_mapping_range: -1 # maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0]

fused_pointcloud_freq: 1.0 # frequency of the publishing of the fused colored point cloud

clicked_point_topic: '/clicked_point' # Topic published by Rviz when a point of the cloud is clicked. Used for plane detection

sensors:

sensors_timestamp_sync: false # Synchronize Sensors messages timestamp with latest received frame

max_pub_rate: 400. # max frequency of publishing of sensors data. MAX: 400. - MIN: grab rate

publish_imu_tf: true # publish `IMU -> <cam_name>_left_camera_frame` TF

object_detection:

od_enabled: false # True to enable Object Detection [not available for ZED]

model: 'MULTI_CLASS_BOX_ACCURATE' # 'MULTI_CLASS_BOX_FAST', 'MULTI_CLASS_BOX_MEDIUM', 'MULTI_CLASS_BOX_ACCURATE', 'PERSON_HEAD_BOX_FAST', 'PERSON_HEAD_BOX_ACCURATE'

max_range: 15. # Maximum detection range

allow_reduced_precision_inference: true # Allow inference to run at a lower precision to improve runtime and memory usage

prediction_timeout: 0.5 # During this time [sec], the object will have OK state even if it is not detected. Set this parameter to 0 to disable SDK predictions

object_tracking_enabled: true # Enable/disable the tracking of the detected objects

mc_people: true # Enable/disable the detection of persons for 'MULTI_CLASS_BOX_X' models

mc_vehicle: true # Enable/disable the detection of vehicles for 'MULTI_CLASS_BOX_X' models

mc_bag: true # Enable/disable the detection of bags for 'MULTI_CLASS_BOX_X' models

mc_animal: true # Enable/disable the detection of animals for 'MULTI_CLASS_BOX_X' models

mc_electronics: true # Enable/disable the detection of electronic devices for 'MULTI_CLASS_BOX_X' models

mc_fruit_vegetable: true # Enable/disable the detection of fruits and vegetables for 'MULTI_CLASS_BOX_X' models

mc_sport: true # Enable/disable the detection of sport-related objects for 'MULTI_CLASS_BOX_X' models