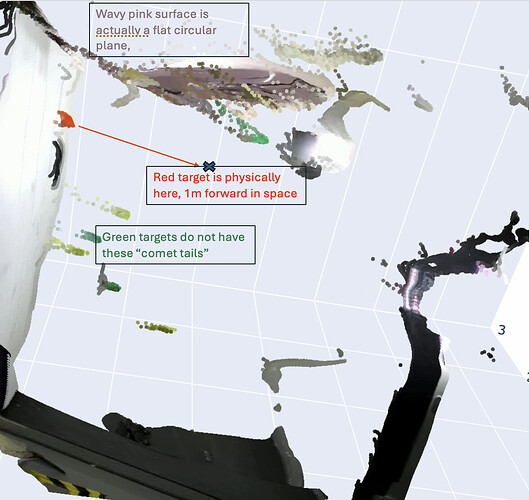

In the attached video clip here, there are several issues that seem to stem from the NEURAL depth algorithm.

- Planar targets appear very non-planar

- Small targets get extruded to much greater depth at their edges, producing a “comet tail” effect

- Red targets appear at significant offset, e.g. something at 1.2m from the camera appears at 2.2m

I’ve tried to capture some of these in this screenshot, but it’s more apparent in the video I’ve linked above.

Is there any way to mitigate these effects? In my use case, I don’t need extreme speed, but I do need reliable depth accuracy. I fabricated a 2m-tall calibration target to spatially match the ZED 2i’s output to another sensor, and with such significant distortion, I cannot register the sensors together.

Please let me know if you need more information. Thanks!