Do you have any recommendation/code project on how to perform post-processing on skeleton data to make it look more smooth and have the characters stick to the floor (and not pass underneath the ground). Take the following video as an example, that I have created by modifying the ZED Unity livelink sample: The avatars are constantly performing small, non-smooth movements, and are moving underground. I am also wondering if it is something that can be done from within Unity? Or Unreal Engine?

Hi,

Indeed the skeletons are very jittery.

You are using pre-recorded data, how are you controlling the frame rate of the animation? Are you updating the avatars in an Update loop at the fps of the scene?

Can you try to update the animation at a fixed fps, corresponding to the frame rate of the recorded data?

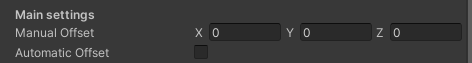

To stick the avatars on the floor, you can try to use either the "manual " or the “automatic” offset option available in the plugin. You can find it on the Prefab of the avatar.

The automatic offset tried to stick the feet of the avatar on the ground without preventing jumps.

Stereolabs Support

Hi Ben, is solving this issue in your priority?

Hello @raychen ,

We’re indeed always working on improving the skeletons.

Nevertheless, such jitters in a live usage (I think @haakonflaar is replaying data in their example) should be fixed by either re-doing the calibration or tweaking the parameters of the SDK, mainly body format and body model. It also depends of the number of cameras on the same computer in USB workflow, or the hardware of said computer (running an instance of the SDK per camera is a quite heavy load on the GPU and can decrease fps).

Jean-Loup

As far as I know there is no way to set a fixed FPS when running skeleton tracking with fused cameras - let me know if that functionality is/will be available. The data I have has an average difference between each timestep of approximately 52 ms. 1000 / 52 = 19.2 FPS. Currently I have only sat a 52 ms sleep after each timestep in the Update loop.

What would be a better solution? I guess interpolating the data so that the data has a fixed timestep (FPS). Do you have instructions/code on how to do so?

Setting automatic offset did not help. I can always tweak the manual offset, but the correct offset varies over time for the same person so I don’t believe that is an ideal solution either. Using relative rotations and global position, is there a way to process the data so that the person will always have his feet on the floor?

Is it possible to put on a collider around the avatars to prevent them from colliding with the ground, themselves, other avatars and other objects in the scene?

As far as I know there is no way to set a fixed FPS when running skeleton tracking with fused cameras - let me know if that functionality is/will be available. The data I have has an average difference between each timestep of approximately 52 ms. 1000 / 52 = 19.2 FPS. Currently I have only sat a 52 ms sleep after each timestep in the Update loop.

I think that the way to go to have a fixed fps, at this point, would be to lower the fps on the sender.

The fusion module has no fps itself, it uses the fps from the senders.

I imagine you’re recording in 30fps on each camera. If you got an average unstable 19 fps, I think setting the initParameters.camera_fps to 15 instead would bring a stable fusion at 15 fps. (You can also try to lower your resolution if possible, and try 30fps).

Can I ask on what hardware you’re running this sample? (CPU/GPU)

What would be a better solution? I guess interpolating the data so that the data has a fixed timestep (FPS). Do you have instructions/code on how to do so?

Interpolating the data could indeed be a solution. I would do so by creating a Dictionary of HumanBodyBones to Quaternion, let’s call it rigBoneRotationLastFrame to store the local rotations (like the rigBoneTarget in SkeletonHandler. Initialize it at the same time as rigBoneTarget in SkeletonHandler.cs in the Create() function.

Then, in the LateUpdate of the ZEDSkeletonAnimator (so after the whole animation pipeline is done), loop on it and replace the animator.getbonetransform(bone).localRotation with a Quaternion.Slerp() from the rigBoneRotationLastFrame rotation to the animator.getbonetransform(bone).localRotation.

Then update the rigBoneRotationLastFrame with animator.getbonetransform(bone).localRotation.

In the next updates of the sample, we’ll add this interpolation option.

Setting automatic offset did not help. I can always tweak the manual offset, but the correct offset varies over time for the same person so I don’t believe that is an ideal solution either. Using relative rotations and global position, is there a way to process the data so that the person will always have his feet on the floor?

Unfortunately, it would be tweaking the automatic offset function. It will also be updated in the next updates to be more reliable.

Is it possible to put on a collider around the avatars to prevent them from colliding with the ground, themselves, other avatars and other objects in the scene?

That’s the approach we used in our Unreal plugin, but for now we have not explored this in Unity.

The Foot IK / animation workflow will be updated in the coming weeks for the next unity/livelink sample update, and should bring noticeably better results.

Best,

Jean-Loup

@JPlou It is still glitchy after interpolating the data to 30 FPS.

Would you recommend using Unreal instead? Do you believe the glitchy effects will be less there? Also, do you have a modified livelink sample in Unreal where I can use pre-recorded data?

@haakonflaar sorry to hear that.

Indeed, Unreal/Live Link has more smoothing options that could reduce the jitters.

We don’t have a modified sample to run with pre-recorded data, however, it can run with SVOs and the calibration file, like Unity live link and other Fusion samples.

You can modify it to fit your needs and use the recorded data (it should not be very different algorithmically from what’s required on Unity), just be aware that you’ll need the UE5 source file to build the sample. You can find more information on this page of the documentation: Building ZED Live Link | Stereolabs

The main difference with Unity is that the data is formatted to Live Link format in the sender before being sent.

@JPlou I have gotten the Unreal livelink sample to run using data from a live camera. I have no prior experience with Unreal - could you point me in the direction to where I should modify the code to read and animate from a .JSON file instead of listening to UDP messages?

@haakonflaar To fully benefit from the Live Link smoothing features, your pipeline should probably be similar to what we do: transform your data into Live Link ready data and read them in UE5 through Live Link. You should have very little to do in the Unreal Engine editor itself.

Most of the work is done in this C++ file: zed-livelink/main.cpp at main · stereolabs/zed-livelink · GitHub

The core of the conversion is in this function : zed-livelink/main.cpp at 1ea1e95f0e734674ea95cc5c4284f4c6e6ff1e39 · stereolabs/zed-livelink · GitHub

Then, in the Live Link settings, you can set the smoothing parameter.

I’m thinking of something, though: your data are the result of the Fusion I suppose? Is it possible for you to re-record the SVO at 15 fps (by setting the fps on each camera, I mentioned it in an earlier message on this topic) so that the fps is stable? This way, the Fusion should give cleaner results, and your interpolation should I guess be cleaner too.

@JPlou How does using .SVO files work when performing fusion? Can I enable .SVO recording on all cameras and load all SVO files and run fusion on the SVO files? Do you have a sample project performing fusion on multiple SVO files?

How do I re-build hte zed-livelink-fusion project if I make any changes to it? Can’t see no cmakelist.txt there…?

How does using .SVO files work when performing fusion? Can I enable .SVO recording on all cameras and load all SVO files and run fusion on the SVO files? Do you have a sample project performing fusion on multiple SVO files?

The process to use SVO is as you said with one extra step, ZED360 calibration.

So:

- Configure your cameras with ZED360 : ZED360 - Stereolabs

- Save the file

- Record the SVOs by any mean, we have a multi-camera recording sample available for instance: zed-sdk/recording/recording/multi camera/cpp at master · stereolabs/zed-sdk · GitHub

- Modify your calibration file to indicate that the Fusion should use SVO, I went more in-depth in this post: Fusion Sample Code with SVO Files - #2 by JPlou

- Run a Fusion sample, passing the edited configuration file (it should work as is): zed-sdk/body tracking/multi-camera at master · stereolabs/zed-sdk · GitHub

How do I re-build hte zed-livelink-fusion project if I make any changes to it? Can’t see no cmakelist.txt there…?

Rebuilding the zed-livelink-fusion project involves a particulate process described on this page: Building ZED Live Link - Stereolabs

Basically, you need UE5 source, and to clone the zed-livelink repo (the whole repo) into <Engine Install Folder>\Engine\Source\Programs\. Then, after working on the sender in the repo, build it as described in the documentation.