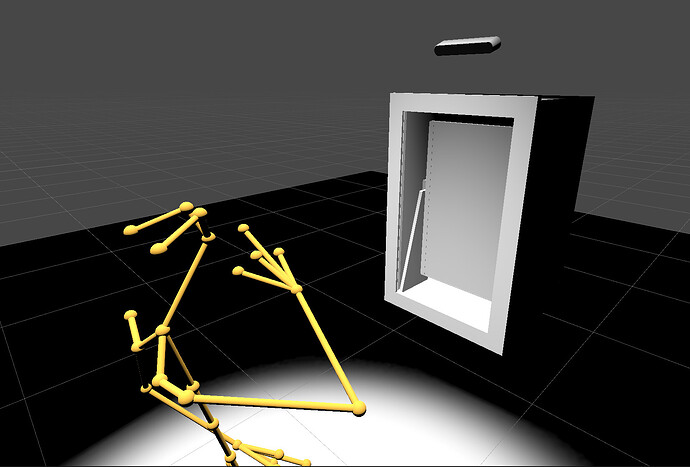

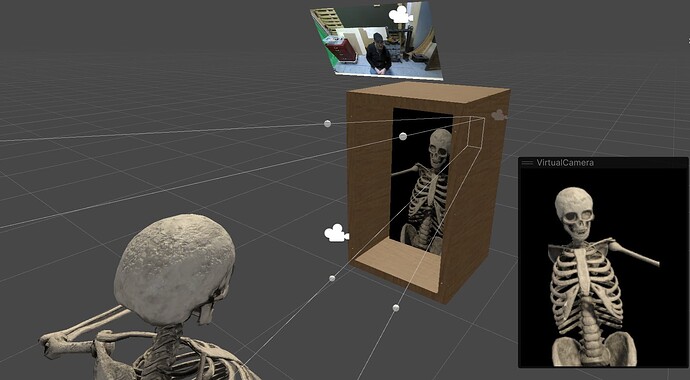

I’m working on a prototype “smart mirror” setup corresponding to the Unity screenshot shown below. A ZED 2i is placed above a small box that has a one-way security mirror set into a frame (mirror not shown here so we can see inside the box). The ZED 2i faces forward and downwards in order to track the person looking into the mirror frame. Inside the box is a monitor set roughly as deep behind the mirror as the viewer in front.

On this monitor I’m going to render content in such a way that it appears co-located with the viewer’s mirror image.

The overall approach is:

- Set up Unity project as outlined in Body Tracking - Stereolabs.

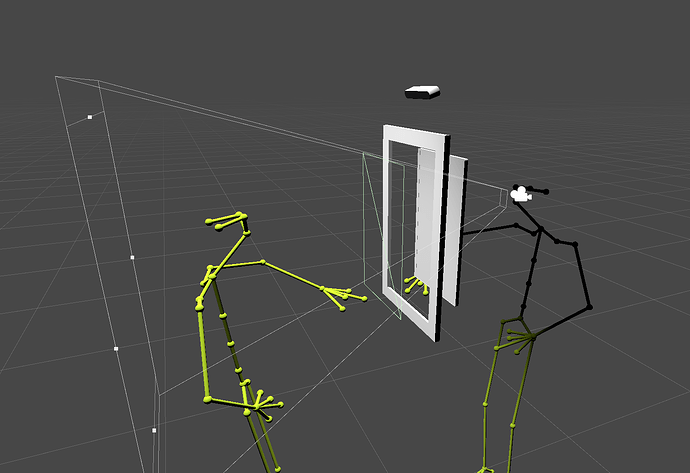

- Create an additional virtual camera for the monitor content.

- When a tracked body appears, determine its left-eye position in world space coordinates.

- Position the virtual camera on the opposite side of the mirror plane at this eye position, looking back towards the viewer.

- Adjust the virtual camera’s frustum so that it intersects with a mirrored version of the monitor. Note that this is an asymmetric view frustum. In Unity it can be achieved by adjusting the lens shift parameters of a physical camera.

- Repeat 4-5 as the tracked body’s eye-point changes.

At the moment I’m stuck on step 3. Here’s a code snippet from a script attached to the virtual camera. It gets runs once per frame.

BodyTrackingFrame bodyTrackingFrame = zedManager.GetBodyTrackingFrame;

DetectedBody firstDetectedBody = bodyTrackingFrame.detectedBodies[0];

BodyData bodyData = firstDetectedBody.rawBodyData;

Vector3 eyePosition = bodyData.keypoint[((int)BODY_38_PARTS.LEFT_EYE)];

Debug.Log(eyePosition);

transform.position = eyePosition;

Ignore for the moment that there is no error/null checking going on here. How do I transform a keypoint from its local coordinate system to the world coordinate system?

Any help is much appreciated.