Hi all,

I just bought two Zed 2i, then I plan to use them together.

One fixed on the front side of a vehicle, the second one fixed on the rear side of the vehicle.

The goal is to create a realtime 3D surround view.

So, is there a documentation/tutorial that explains how to fix the position of the two cameras relative of a fixed coordinates then use them for realtime mapping ?

Thank you.

Hello,

I started my tests but I encounter a bug (?) during the mapping process :

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

Here a minimal test case :

#include <sl/Camera.hpp>

using namespace std;

using namespace sl;

int main(int argc, char **argv) {

// Create a ZED camera object

Camera zed0;

Camera zed1;

// Set configuration parameters

InitParameters init_parameters;

init_parameters.camera_resolution = RESOLUTION::HD720; // Use HD720 video mode (default fps: 60)

init_parameters.coordinate_system = COORDINATE_SYSTEM::RIGHT_HANDED_Y_UP; // Use a right-handed Y-up coordinate system

init_parameters.coordinate_units = UNIT::METER; // Set units in meters

// Open the camera

init_parameters.input.setFromSVOFile("cam01.svo");

init_parameters.svo_real_time_mode = true;

zed0.open(init_parameters);

init_parameters.input.setFromSVOFile("cam02.svo");

init_parameters.svo_real_time_mode = true;

zed1.open(init_parameters);

// Enable positional tracking with default parameters. Positional tracking needs to be enabled before using spatial mapping

sl::PositionalTrackingParameters tracking_parameters;

zed0.enablePositionalTracking(tracking_parameters);

zed1.enablePositionalTracking(tracking_parameters);

// Enable spatial mapping

sl::SpatialMappingParameters mapping_parameters;

zed0.enableSpatialMapping(mapping_parameters);

zed1.enableSpatialMapping(mapping_parameters);

// Grab data during 500 frames

int i = 0;

sl::Mesh mesh0; // Create a mesh object

sl::Mesh mesh1; // Create a mesh object

while (i < 200) {

// For each new grab, mesh data is updated

if (zed0.grab() == ERROR_CODE::SUCCESS) {

// In the background, spatial mapping will use newly retrieved images, depth and pose to update the mesh

sl::SPATIAL_MAPPING_STATE mapping_state = zed0.getSpatialMappingState();

// Print spatial mapping state

cout << "\rImages 0 captured: " << i << " / 500 || Spatial mapping state: " << mapping_state << "\t" << flush;

i++;

}

if (zed1.grab() == ERROR_CODE::SUCCESS) {

// In the background, spatial mapping will use newly retrieved images, depth and pose to update the mesh

sl::SPATIAL_MAPPING_STATE mapping_state = zed1.getSpatialMappingState();

// Print spatial mapping state

cout << "\rImages 1 captured: " << i << " / 500 || Spatial mapping state: " << mapping_state << "\t" << flush;

i++;

}

}

cout << endl;

// Extract, filter and save the mesh in a obj file

cout << "Extracting Mesh...\n";

zed0.extractWholeSpatialMap(mesh0); // Extract the whole mesh

zed1.extractWholeSpatialMap(mesh1); // Extract the whole mesh

cout << "Filtering Mesh...\n";

mesh0.filter(sl::MeshFilterParameters::MESH_FILTER::LOW); // Filter the mesh (remove unnecessary vertices and faces)

mesh1.filter(sl::MeshFilterParameters::MESH_FILTER::LOW); // Filter the mesh (remove unnecessary vertices and faces)

cout << "Saving Mesh...\n";

mesh0.save("mesh0.obj"); // Save the mesh in an obj file

mesh1.save("mesh1.obj"); // Save the mesh in an obj file

// Disable tracking and mapping and close the camera

zed0.disableSpatialMapping();

zed1.disableSpatialMapping();

zed0.disablePositionalTracking();

zed1.disablePositionalTracking();

zed0.close();

zed1.close();

return EXIT_SUCCESS;

}

I have the error when I try to adapt the “spatial mapping” sample too.

Config :

Zed SDK : 3.7.2

Cuda : 11.5.119

Processor : i9-11980HK

RAM : 32Go

GPU : Nvidia RTX3080 16Go

OS : Windows 10 Pro

I made another test using the “spacial mapping” sample with the following modified code :

/**********************************************************************************

** This sample demonstrates how to capture a live 3D reconstruction of a scene **

** as a fused point cloud and display the result in an OpenGL window. **

**********************************************************************************/

// ZED includes

#include <sl/Camera.hpp>

// Sample includes

#include "GLViewer.hpp"

#include <opencv2/opencv.hpp>

// Using std and sl namespaces

using namespace std;

using namespace sl;

int main(int argc, char **argv) {

Camera zed1;

Camera zed2;

// Set configuration parameters for the ZED

InitParameters init_parameters;

init_parameters.depth_mode = DEPTH_MODE::ULTRA;

init_parameters.coordinate_system = COORDINATE_SYSTEM::RIGHT_HANDED_Y_UP; // OpenGL's coordinate system is right_handed

// Open the camera

init_parameters.input.setFromCameraID(0);

zed1.open(init_parameters);

init_parameters.input.setFromCameraID(1);

zed2.open(init_parameters);

auto camera_infos = zed1.getCameraInformation();

// Point cloud viewer

GLViewer viewer;

// Initialize point cloud viewer

FusedPointCloud map;

GLenum errgl = viewer.init(argc, argv, camera_infos.camera_configuration.calibration_parameters.left_cam, &map, camera_infos.camera_model);

// Setup and start positional tracking

Pose pose;

POSITIONAL_TRACKING_STATE tracking_state = POSITIONAL_TRACKING_STATE::OFF;

PositionalTrackingParameters positional_tracking_parameters;

positional_tracking_parameters.enable_area_memory = false;

zed1.enablePositionalTracking(positional_tracking_parameters);

zed2.enablePositionalTracking(positional_tracking_parameters);

// Set spatial mapping parameters

SpatialMappingParameters spatial_mapping_parameters;

// Request a Point Cloud

spatial_mapping_parameters.map_type = SpatialMappingParameters::SPATIAL_MAP_TYPE::FUSED_POINT_CLOUD;

// Set mapping range, it will set the resolution accordingly (a higher range, a lower resolution)

spatial_mapping_parameters.set(SpatialMappingParameters::MAPPING_RANGE::SHORT);

spatial_mapping_parameters.set(SpatialMappingParameters::MAPPING_RESOLUTION::HIGH);

// Request partial updates only (only the latest updated chunks need to be re-draw)

spatial_mapping_parameters.use_chunk_only = true;

// Start the spatial mapping

zed1.enableSpatialMapping(spatial_mapping_parameters);

zed2.enableSpatialMapping(spatial_mapping_parameters);

// Timestamp of the last fused point cloud requested

chrono::high_resolution_clock::time_point ts_last1;

chrono::high_resolution_clock::time_point ts_last2;

// Setup runtime parameters

RuntimeParameters runtime_parameters;

// Use low depth confidence avoid introducing noise in the constructed model

runtime_parameters.confidence_threshold = 50;

auto resolution = camera_infos.camera_configuration.resolution;

// Define display resolution and check that it fit at least the image resolution

Resolution display_resolution(min((int)resolution.width, 720), min((int)resolution.height, 404));

// Create a Mat to contain the left image and its opencv ref

Mat image_zed1(display_resolution, MAT_TYPE::U8_C4);

Mat image_zed2(display_resolution, MAT_TYPE::U8_C4);

cv::Mat image_zed_ocv1(image_zed1.getHeight(), image_zed1.getWidth(), CV_8UC4, image_zed1.getPtr<sl::uchar1>(MEM::CPU));

cv::Mat image_zed_ocv2(image_zed2.getHeight(), image_zed2.getWidth(), CV_8UC4, image_zed2.getPtr<sl::uchar1>(MEM::CPU));

// Start the main loop

while (viewer.isAvailable()) {

// Grab a new image

if (zed1.grab(runtime_parameters) == ERROR_CODE::SUCCESS) {

// Retrieve the left image

zed1.retrieveImage(image_zed1, VIEW::LEFT, MEM::CPU, display_resolution);

// Retrieve the camera pose data

tracking_state = zed1.getPosition(pose);

viewer.updatePose(pose, tracking_state);

if (tracking_state == POSITIONAL_TRACKING_STATE::OK) {

auto duration = chrono::duration_cast<chrono::milliseconds>(chrono::high_resolution_clock::now() - ts_last1).count();

// Ask for a fused point cloud update if 500ms have elapsed since last request

if((duration > 500) && viewer.chunksUpdated()) {

// Ask for a point cloud refresh

zed1.requestSpatialMapAsync();

ts_last1 = chrono::high_resolution_clock::now();

}

// If the point cloud is ready to be retrieved

if(zed1.getSpatialMapRequestStatusAsync() == ERROR_CODE::SUCCESS) {

zed1.retrieveSpatialMapAsync(map);

viewer.updateChunks();

}

}

cv::imshow("ZED View 1", image_zed_ocv1);

}

if (zed2.grab(runtime_parameters) == ERROR_CODE::SUCCESS) {

// Retrieve the left image

zed2.retrieveImage(image_zed2, VIEW::LEFT, MEM::CPU, display_resolution);

// Retrieve the camera pose data

tracking_state = zed2.getPosition(pose);

//viewer.updatePose(pose, tracking_state);

if (tracking_state == POSITIONAL_TRACKING_STATE::OK) {

auto duration = chrono::duration_cast<chrono::milliseconds>(chrono::high_resolution_clock::now() - ts_last2).count();

// Ask for a fused point cloud update if 500ms have elapsed since last request

if ((duration > 500) && viewer.chunksUpdated()) {

// Ask for a point cloud refresh

zed2.requestSpatialMapAsync();

ts_last2 = chrono::high_resolution_clock::now();

}

// If the point cloud is ready to be retrieved

if (zed2.getSpatialMapRequestStatusAsync() == ERROR_CODE::SUCCESS) {

zed2.retrieveSpatialMapAsync(map);

viewer.updateChunks();

}

}

cv::imshow("ZED View 2", image_zed_ocv2);

}

cv::waitKey(15);

}

// Save generated point cloud

//map.save("MyFusedPointCloud");

// Free allocated memory before closing the camera

image_zed1.free();

image_zed2.free();

// Close the ZED

zed1.close();

zed2.close();

return 0;

}

Only the second camera do mapping process and I get the same error in the console window :

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

CUDA error at C:\builds\sl\ZEDKit\lib\src\sl_zed\SpatialMappingHandler.cpp:539 code=400(cudaErrorInvalidResourceHandle) "cudaEventRecord(ev_cpu_data, strm)"

Is there a way to use two (or more?) cameras (fixed in rigid body together) to do realtime mapping ?

OK. For an unknown reason, if I compute each zed camera on its own thread, parallele mapping works … a little.

Here my code :

///////////////////////////////////////////////////////////////////////////

//

// Copyright (c) 2022, STEREOLABS.

//

// All rights reserved.

//

// THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

// "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

// LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

// A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

// OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

// SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

// LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

// DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

// THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

// (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

// OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

//

///////////////////////////////////////////////////////////////////////////

/**********************************************************************************

** This sample demonstrates how to capture a live 3D reconstruction of a scene **

** as a fused point cloud and display the result in an OpenGL window. **

**********************************************************************************/

#include <sl/Camera.hpp>

#include <opencv2/opencv.hpp>

#include "GLViewer.hpp"

bool _enableMapping = true;

bool _running = true;

void run(int index, std::mutex &mutex, GLViewer &viewer, sl::FusedPointCloud &map, sl::Transform tf) {

sl::Camera zed;

// Set configuration parameters for the ZED

sl::InitParameters init_parameters;

init_parameters.depth_mode = sl::DEPTH_MODE::PERFORMANCE;

init_parameters.coordinate_system = sl::COORDINATE_SYSTEM::RIGHT_HANDED_Y_UP; // OpenGL's coordinate system is right_handed

init_parameters.coordinate_units = sl::UNIT::MILLIMETER;

init_parameters.camera_resolution = sl::RESOLUTION::HD720;

//init_parameters.camera_fps = 60;

init_parameters.depth_minimum_distance = 200;

init_parameters.sdk_verbose = true;

// Open the camera

init_parameters.input.setFromCameraID(index);

zed.open(init_parameters);

// Setup and start positional tracking

sl::PositionalTrackingParameters positional_tracking_parameters;

positional_tracking_parameters.enable_area_memory = true;

positional_tracking_parameters.enable_imu_fusion = true;

positional_tracking_parameters.initial_world_transform = tf;

zed.enablePositionalTracking(positional_tracking_parameters);

if (_enableMapping) {

// Set spatial mapping parameters

sl::SpatialMappingParameters spatial_mapping_parameters;

// Request a Point Cloud

spatial_mapping_parameters.map_type = sl::SpatialMappingParameters::SPATIAL_MAP_TYPE::FUSED_POINT_CLOUD;

// Set mapping range, it will set the resolution accordingly (a higher range, a lower resolution)

spatial_mapping_parameters.set(sl::SpatialMappingParameters::MAPPING_RANGE::LONG);

spatial_mapping_parameters.set(sl::SpatialMappingParameters::MAPPING_RESOLUTION::HIGH);

// Request partial updates only (only the latest updated chunks need to be re-draw)

spatial_mapping_parameters.use_chunk_only = true;

// Start the spatial mapping

zed.enableSpatialMapping(spatial_mapping_parameters);

}

std::chrono::high_resolution_clock::time_point ts_last;

// Setup runtime parameters

sl::RuntimeParameters runtime_parameters;

runtime_parameters.confidence_threshold = 50;

auto camera_infos = zed.getCameraInformation();

auto resolution = camera_infos.camera_configuration.resolution;

// Define display resolution and check that it fit at least the image resolution

sl::Resolution display_resolution(std::min((int)resolution.width, 720), std::min((int)resolution.height, 404));

// Create a Mat to contain the left image and its opencv ref

sl::Mat image_zed(display_resolution, sl::MAT_TYPE::U8_C4);

cv::Mat image_zed_ocv(image_zed.getHeight(), image_zed.getWidth(), CV_8UC4, image_zed.getPtr<sl::uchar1>(sl::MEM::CPU));

std::cout << "Camera " << index << " Type:" << camera_infos.camera_model << " SN:" << camera_infos.serial_number << std::endl;

while (_running) {

if (zed.grab(runtime_parameters) == sl::ERROR_CODE::SUCCESS) {

// Retrieve the left image

zed.retrieveImage(image_zed, sl::VIEW::LEFT, sl::MEM::CPU, display_resolution);

// Retrieve the camera pose data

sl::Pose pose;

auto tracking_state = zed.getPosition(pose);

viewer.updatePose(index, pose, tracking_state);

if (_enableMapping && tracking_state == sl::POSITIONAL_TRACKING_STATE::OK) {

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(std::chrono::high_resolution_clock::now() - ts_last).count();

/*

mutex.lock();

zed.extractWholeSpatialMap(map);

viewer.updateChunks();

mutex.unlock();

*/

// Ask for a fused point cloud update if 100ms have elapsed since last request

if ((duration > 0) && viewer.chunksUpdated()) {

// Ask for a point cloud refresh

zed.requestSpatialMapAsync();

ts_last = std::chrono::high_resolution_clock::now();

}

// If the point cloud is ready to be retrieved

if (zed.getSpatialMapRequestStatusAsync() == sl::ERROR_CODE::SUCCESS && viewer.chunksUpdated() && mutex.try_lock()) {

zed.retrieveSpatialMapAsync(map);

viewer.updateChunks();

mutex.unlock();

}

}

cv::imshow("ZED View : " + std::to_string(index) + " : " + std::to_string(camera_infos.serial_number), image_zed_ocv);

}

cv::waitKey(10);

}

image_zed.free();

zed.close();

}

int main(int argc, char **argv)

{

// Initialize point cloud viewer

sl::FusedPointCloud map;

// Point cloud viewer

GLViewer viewer;

viewer.init(argc, argv, &map, sl::MODEL::ZED2i);

std::mutex zedmutex;

std::thread zed1thread(run, 0, std::ref(zedmutex), std::ref(viewer), std::ref(map), sl::Transform(sl::Rotation(), sl::Translation(0, 0, -75)));

std::thread zed2thread(run, 1, std::ref(zedmutex), std::ref(viewer), std::ref(map), sl::Transform(sl::Rotation(M_PI, sl::Translation(0, 1, 0)), sl::Translation(0, 0, 75)));

while (viewer.isAvailable())

sl::sleep_ms(1);

_running = false;

if (zed1thread.joinable()) zed1thread.join();

if (zed2thread.joinable()) zed2thread.join();

// Save generated point cloud

//map.save("MyFusedPointCloud");

return 0;

}

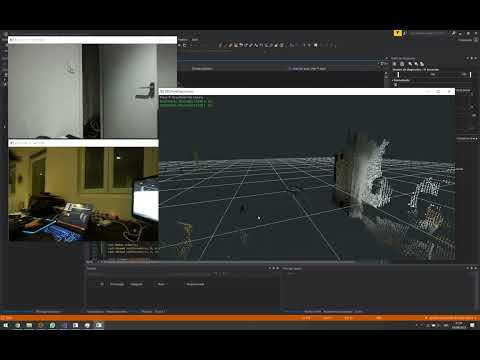

It seems the sl::FusedPointCloud does not support very well multiple retrieveSpatialMapAsync() (or extractWholeSpatialMap()) from different cameras because the updated chunks are corrupted as you can see on the following video :

Hi all,

I just updated the SDK to the latest (3.7.4) and I still have the issue while the changelog of this version indicates to have fixed it.

- Fixed spatial mapping invalid behavior when using multiple camera simultaneously

Is there something else wrong ?

Hi,

Based on your post, I have made this basic sample to reproduce the error:

int main(int argc, char **argv) {

Camera zed1;

Camera zed2;

// Set configuration parameters for the ZED

InitParameters init_parameters;

// Open the camera

init_parameters.input.setFromCameraID(0);

zed1.open(init_parameters);

init_parameters.input.setFromCameraID(1);

zed2.open(init_parameters);

POSITIONAL_TRACKING_STATE tracking_state = POSITIONAL_TRACKING_STATE::OFF;

PositionalTrackingParameters positional_tracking_parameters;

positional_tracking_parameters.enable_area_memory = false;

zed1.enablePositionalTracking(positional_tracking_parameters);

zed2.enablePositionalTracking(positional_tracking_parameters);

// Set spatial mapping parameters

SpatialMappingParameters spatial_mapping_parameters;

// Request a Point Cloud

spatial_mapping_parameters.map_type = SpatialMappingParameters::SPATIAL_MAP_TYPE::FUSED_POINT_CLOUD;

// Set mapping range, it will set the resolution accordingly (a higher range, a lower resolution)

spatial_mapping_parameters.set(SpatialMappingParameters::MAPPING_RANGE::SHORT);

spatial_mapping_parameters.set(SpatialMappingParameters::MAPPING_RESOLUTION::HIGH);

// Request partial updates only (only the latest updated chunks need to be re-draw)

spatial_mapping_parameters.use_chunk_only = true;

// Start the spatial mapping

zed1.enableSpatialMapping(spatial_mapping_parameters);

zed2.enableSpatialMapping(spatial_mapping_parameters);

int f = 0;

// Start the main loop

while (f++ < 700) {

// Grab a new image

if (zed1.grab() == ERROR_CODE::SUCCESS) {

}

if (zed2.grab() == ERROR_CODE::SUCCESS) {

}

}

// Initialize point cloud viewer

FusedPointCloud map;

zed1.extractWholeSpatialMap(map);

map.save("ZED1_Map.ply", sl::MESH_FILE_FORMAT::PLY);

map.clear();

zed2.extractWholeSpatialMap(map);

map.save("ZED2_Map.ply", sl::MESH_FILE_FORMAT::PLY);

map.clear();

// Close the ZED

zed1.close();

zed2.close();

return 0;

}

With the 3.7.4 it runs without errors and exports two point clouds at the end (tested on windows).

Tell me what errors do you have.

Hi,

Thank you for your answer.

In my use case, I would like to share the same FusedPointCloud on the two cameras for realtime mapping.

And as you can see on the youtube video I sent before, some chunks “blinks” between the two cameras. Maybe there is some conflict between the chunks indexes inside the ZED SDK ?

My bad, I was focus on the SDK error not on your specific request.

Actually the SDK can run simultaneously several cameras but they can not share data. Meaning you can not use the same map for your different cameras.

You see some flickering because each instance of the Camera update a part of the map (as it is not design to do so).

The main part you are missing here is the fact that both camera are not in the same coordinate frame, as they have both their own World. You should take a look at this sample to share the same world across your cameras.

You still won’t be able to have a single map but at least your maps will fit together and you should find a way to merge them.

Thank for your answer and pointing me the error on cameras coordinate frame.

Do you plan to allow multiple cameras mapping sharing the same FusedPointCloud in a future release ?

Or create a “fake” camera which is a combinaison of the two in a rigid body ?

Hi @SL-PY ,

I’m back to this topic.

I’m not sure to understand well how to set the two cameras in the same coordinate frame.

Could you briefly explain me how to do that ?

Do I have to recalibrate the position (using resetPositionalTracking()) of one camera on the other at regular intervals ?

Thank you.