Hi,

I’m working on a multi-camera setup using two ZED2i cameras. My goal is to fuse the point cloud data from both cameras into a single, well-aligned cloud so I can calculate the volume of an object placed within the shared space.

Here’s what I’m doing:

- Calibration:

- I used ZED360 for calibration.

- Flip mode: Auto

- Depth mode: Neural (instead of Ultra)

- Both cameras are connected locally via USB 3.0 on the same machine.

- Fusion code:

- I’m using the body tracking fusion sample from the official ZED SDK GitHub:

zed-sdk/body tracking/multi-camera/cpp at master · stereolabs/zed-sdk · GitHub - Resolution: 1080p @ 30 FPS on both cameras.

- Depth mode: **NEURAL_PLUS

Problem:

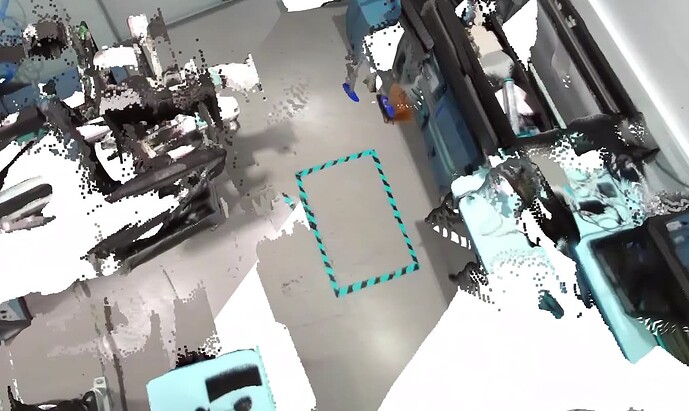

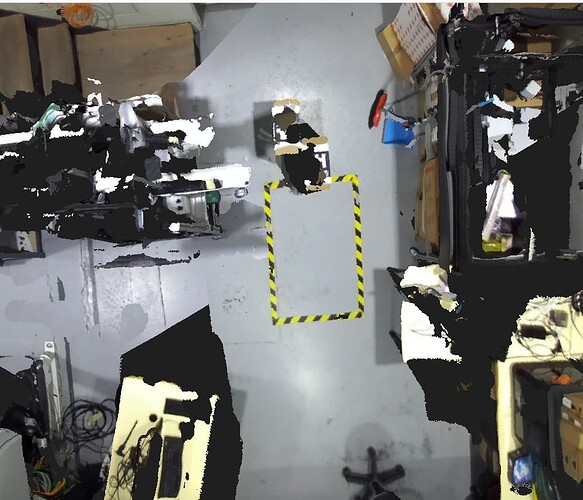

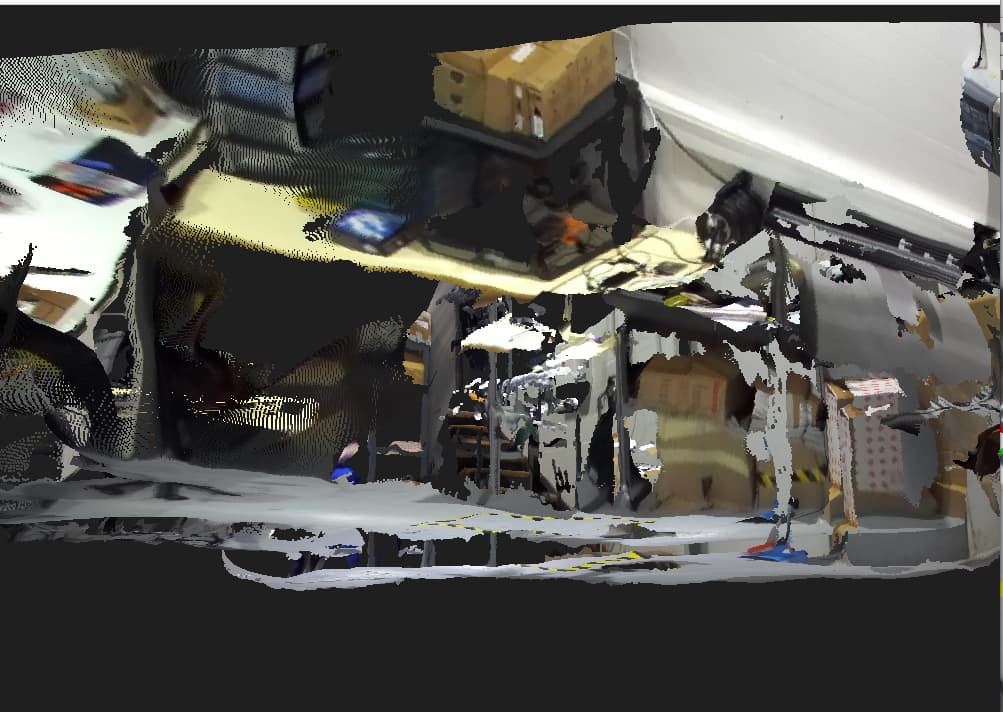

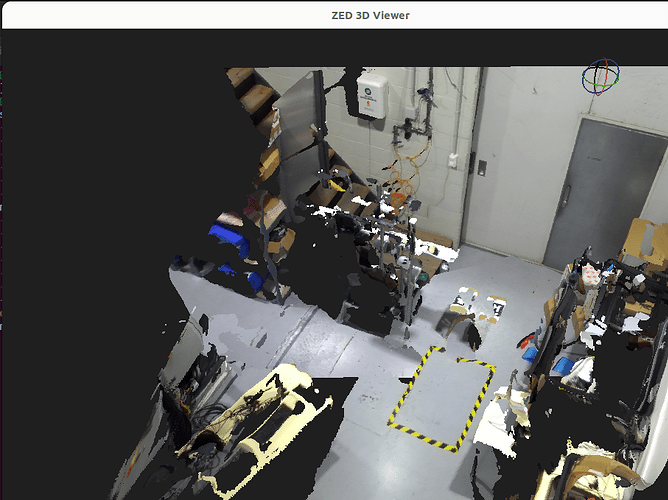

The point clouds from the two cameras are not aligning correctly after fusion. The fused cloud seems disjointed or misaligned, making volume estimation unreliable.

I’ve attached an image showing the issue (see below).

Questions:

- Is this the correct approach for creating a unified point cloud for volume estimation?

- Could this issue be caused by:

- Improper calibration?

- USB bandwidth limits?

- Using Neural instead of Ultra depth mode?

- Camera placement or orientation?

- Are there additional steps or settings I should be using to improve the accuracy of fusion?

Any suggestions or troubleshooting tips would be appreciated!

In the above image you can see that the box made by tape should match or align but it is not.