Hi all,

I’m using the object custom detector with a model I trained myself, and I’m trying to extract the real-world 3D coordinates of the detections. I haven’t changed anything in the code — I’m simply loading my custom model.

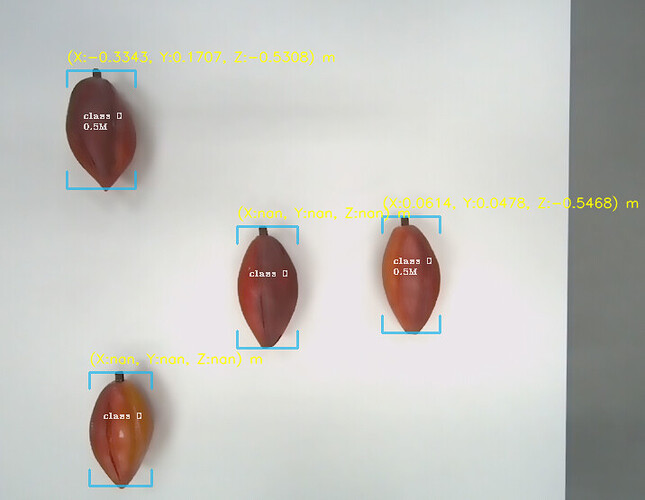

Here’s what I’ve observed:

-

When the detected object is in Y > 0, the detector finds it, tracks it, and returns valid coordinates. Once tracking starts, I can move the object anywhere in the image, and it continues to work correctly.

-

When the object is in Y < 0, it gets detected, but it’s not tracked, and the coordinates are returned as

NaN. If I then move the object into Y > 0, tracking starts and the coordinates are valid again.

I’d like to be able to obtain valid coordinates across the entire image, not just in the Y > 0 region.

Has anyone experienced this issue or knows what might be causing it? Is there a parameter or setting I need to adjust to ensure consistent coordinate extraction?

I’m using Right-handed with Y pointing up and I already tried to disable the track.