Hi,

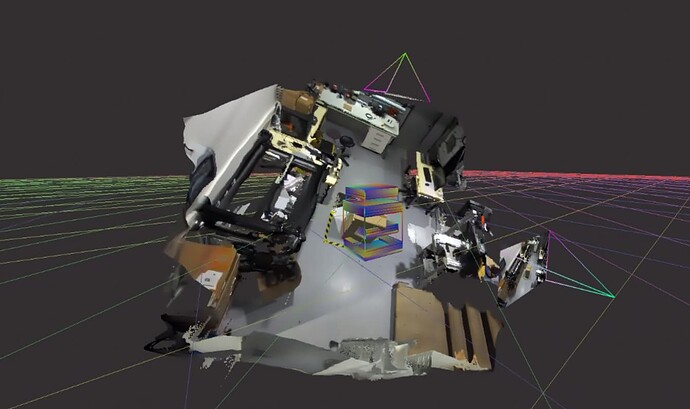

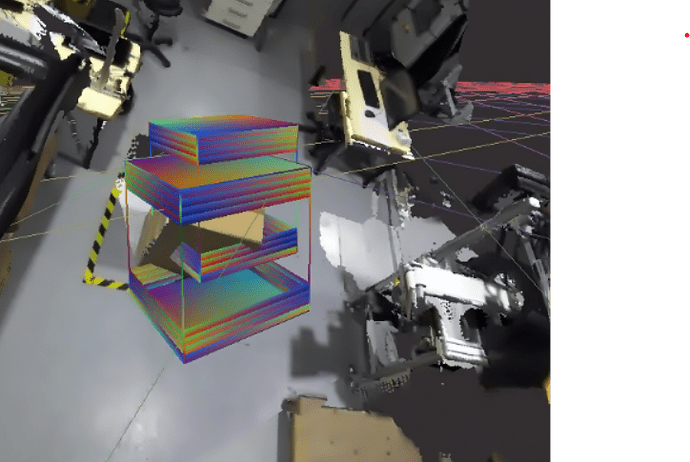

I’m working with two ZED2i cameras and have successfully set up point cloud fusion using the ZED SDK Fusion module. The fusion is running well.

As the next step, I’m integrating YOLOv8 custom object detection using TensorRT. For this, I’ve followed the official Stereolabs tutorial:

https://github.com/stereolabs/zed-yolo/tree/master/cpp_tensorrt_yolo_onnx

When I run this detection code with a single ZED2i camera, everything works perfectly — including YOLO inference, object ingestion via ingestCustomBoxObjects, and 2D/3D object tracking.

However, when I integrate the same detection logic into my multi-camera fusion pipeline, I’m facing an issue with the camera read loop.

In the original YOLO example, zed.grab() is used, but in the multi-camera fusion subscriber mode, the ZED SDK terminal output suggests using zed.read() instead.

Here’s a simplified version of my code:

// Previously used in YOLO example// if (zed.grab(rt) == sl::ERROR_CODE::SUCCESS)

// In fusion mode I switched to:if (zed.read() == sl::ERROR_CODE::SUCCESS) {zed.retrieveImage(left_sl, sl::VIEW::LEFT, sl::MEM::GPU, sl::Resolution(0, 0), detector.stream);

// Run YOLO inference

auto detections = detector.run(left_sl, display_resolution.height, display_resolution.width, CONF_THRESH);

// Move image from GPU to CPU for visualization

left_sl.updateCPUfromGPU(zed_cuda_stream); // Stream obtained from zed.getCUDAStream()

cudaDeviceSynchronize();

}

When using zed.read(), I encounter a CUDA pipeline error. Specifically, the error message is:

./zed_yolo_customObject FusionConfig.json

Try to open ZED 36787928..[2025-08-07 11:26:16 UTC][ZED][INFO] Logging level INFO

[2025-08-07 11:26:17 UTC][ZED][INFO] [Init] Depth mode: NEURAL PLUS

[2025-08-07 11:26:18 UTC][ZED][INFO] [Init] Camera successfully opened.

[2025-08-07 11:26:18 UTC][ZED][INFO] [Init] Sensors FW version: 777

[2025-08-07 11:26:18 UTC][ZED][INFO] [Init] Camera FW version: 1523

[2025-08-07 11:26:18 UTC][ZED][INFO] [Init] Video mode: HD1080@30

[2025-08-07 11:26:18 UTC][ZED][INFO] [Init] Serial Number: S/N 36787928

[2025-08-07 11:26:18 UTC][ZED][WARNING] [Init] Self-calibration skipped. Scene may be occluded or lack texture. (Error code: 0x01)

Using instance_id = 36787928

[2025-08-07 11:26:18 UTC][ZED][WARNING] IMU Fusion is not handled in FLIP mode, IMU Fusion will be disabled

Inference size : 1024x1024

YOLOV8/YOLOV5 format

. ready !

Saved intrinsics to ../intrinsics_depth/0.txt

Try to open ZED 39319354..[2025-08-07 11:26:19 UTC][ZED][INFO] Logging level INFO

[2025-08-07 11:26:19 UTC][ZED][INFO] [Init] Depth mode: NEURAL PLUS

[2025-08-07 11:26:20 UTC][ZED][INFO] [Init] Camera successfully opened.

[2025-08-07 11:26:20 UTC][ZED][INFO] [Init] Sensors FW version: 777

[2025-08-07 11:26:20 UTC][ZED][INFO] [Init] Camera FW version: 1523

[2025-08-07 11:26:20 UTC][ZED][INFO] [Init] Video mode: HD1080@30

[2025-08-07 11:26:20 UTC][ZED][INFO] [Init] Serial Number: S/N 39319354

Using instance_id = 39319354

[2025-08-07 11:26:21 UTC][ZED][WARNING] IMU Fusion is not handled in FLIP mode, IMU Fusion will be disabled

Inference size : 1024x1024

YOLOV8/YOLOV5 format

. ready !

Saved intrinsics to ../intrinsics_depth/1.txt

ZED CUDA STREAM: 0x5bf21e1b1a40

ZED CUDA STREAM: 0x5bf223a2c870

[2025-08-07 11:26:21 UTC][FUSION][INFO] Fusion Logging level INFO

Initialized OpenGL Viewer!

Viewer Shortcuts

- 'q': quit the application

- 'r': switch on/off for raw skeleton display

- 'p': switch on/off for live point cloud display

- 'c': switch on/off point cloud display with raw color

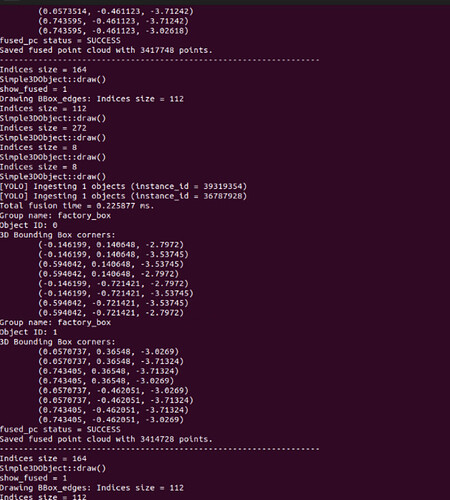

[YOLO] Ingesting 2 objects (instance_id = 39319354)

[2025-08-07 11:26:21 UTC][ZED][WARNING] Camera::ingestCustomBoxObjects: Invalid instance_id value

[2025-08-07 11:26:21 UTC][ZED][WARNING] INVALID FUNCTION CALL in sl::ERROR_CODE sl::Camera::ingestCustomBoxObjects(const std::vector<sl::CustomBoxObjectData>&, unsigned int)

CUDA error at /builds/sl/ZEDKit/lib/src/sl_core/utils/util.cu:482 code=1(cudaErrorInvalidValue) "cudaCreateTextureObject(tex, &resDesc, &texDesc, NULL)"

[YOLO] Ingesting 1 objects (instance_id = 36787928)

[2025-08-07 11:26:21 UTC][ZED][WARNING] Camera::ingestCustomBoxObjects: Invalid instance_id value

[2025-08-07 11:26:21 UTC][ZED][WARNING] INVALID FUNCTION CALL in sl::ERROR_CODE sl::Camera::ingestCustomBoxObjects(const std::vector<sl::CustomBoxObjectData>&, unsigned int)

CUDA error at /builds/sl/ZEDKit/lib/src/sl_core/utils/util.cu:482 code=1(cudaErrorInvalidValue) "cudaCreateTextureObject(tex, &resDesc, &texDesc, NULL)"

in sl::ERROR_CODE sl::Mat::updateCPUfromGPU(cudaStream_t) : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::updateCPUfromGPU(cudaStream_t) : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::updateCPUfromGPU(cudaStream_t) : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::updateCPUfromGPU(cudaStream_t) : Err [700]: an illegal memory access was encountered.

CUDA error at ../src/sl_zed/DetectionHandler.cpp:408 code=700(cudaErrorIllegalAddress) "cudaStreamWaitEvent(cu_strm, ev_depth, 0)"

CUDA error at ../src/sl_zed/DetectionHandler.cpp:408 code=700(cudaErrorIllegalAddress) "cudaStreamWaitEvent(cu_strm, ev_depth, 0)"

in sl::ERROR_CODE sl::Mat::copyTo(sl::Mat&, sl::COPY_TYPE, cudaStream_t) const : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::copyTo(sl::Mat&, sl::COPY_TYPE, cudaStream_t) const : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::copyTo(sl::Mat&, sl::COPY_TYPE, cudaStream_t) const : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::copyTo(sl::Mat&, sl::COPY_TYPE, cudaStream_t) const : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::updateCPUfromGPU(cudaStream_t) : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::updateCPUfromGPU(cudaStream_t) : Err [700]: an illegal memory access was encountered.

CUDA error at ../src/sl_zed/DetectionHandler.cpp:452 code=700(cudaErrorIllegalAddress) "cudaStreamWaitEvent(cu_strm, ev_od, 0)"

CUDA error at ../src/sl_zed/DetectionHandler.cpp:452 code=700(cudaErrorIllegalAddress) "cudaStreamWaitEvent(cu_strm, ev_od, 0)"

in void sl::Mat::alloc(size_t, size_t, sl::MAT_TYPE, sl::MEM) : Err [700]: an illegal memory access was encountered.

in sl::ERROR_CODE sl::Mat::copyTo(sl::Mat&, sl::COPY_TYPE, cudaStream_t) const : Err [12]: invalid pitch argument.

in sl::ERROR_CODE sl::Mat::copyTo(sl::Mat&, sl::COPY_TYPE, cudaStream_t) const : Err [12]: invalid pitch argument.

CUDA error at ../src/sl_zed/DetectionHandler.cpp:247 code=700(cudaErrorIllegalAddress) "cudaEventRecord(ev_image, sdk_stream)"

Segmentation fault (core dumped)

This indicates a problem with the CUDA stream or how the image is being accessed from the GPU.

My understanding so far:

-

In Fusion subscriber mode,

zed.grab()is not supported, sozed.read()must be used. -

However, the stream returned by

zed.getCUDAStream()might not be valid in this mode, leading to the CUDA error. -

It appears that

zed.read()does not expose image buffers in the same way asgrab(), especially when it comes to stream synchronization and GPU memory access.

Questions:

-

What is the correct way to retrieve and process GPU images in Fusion subscriber mode when using

zed.read()? -

Is

zed.getCUDAStream()valid for use withread()in a Fusion setup? -

Should I avoid accessing GPU memory directly and instead retrieve images in CPU memory when using

read()? -

Is there a recommended way to run GPU inference (like YOLO) on images from

read()without causing CUDA resource errors?

I’d appreciate any clarification or advice on how to correctly handle GPU image access in a multi-camera fusion setup when using zed.read() alongside CUDA-based YOLO inference.