Hello Stereolabs team and community!

I’m developing a game that consist on players throwing physical balls to targets in a wall, and for that I thought about using the depthmap functionallity so I can detect when a ball hits a target. Mapping the targets beforehand and knowing the position where the ball impacted the wall, I can know if it impacted a target.

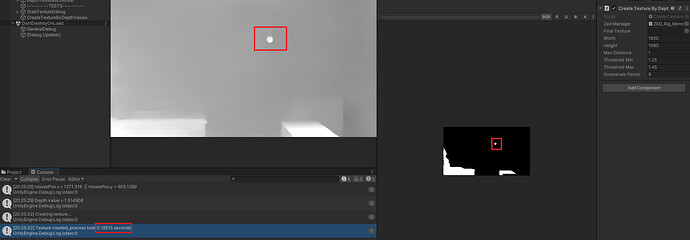

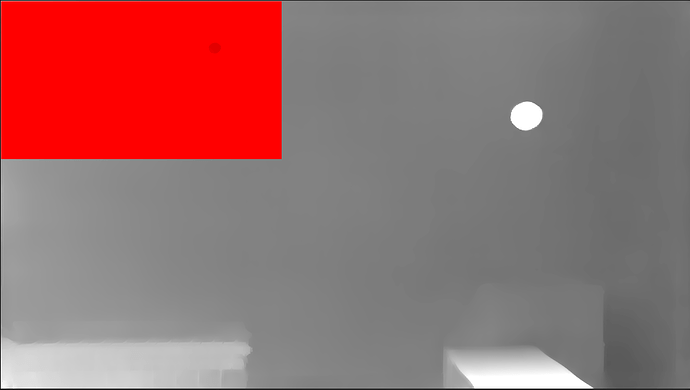

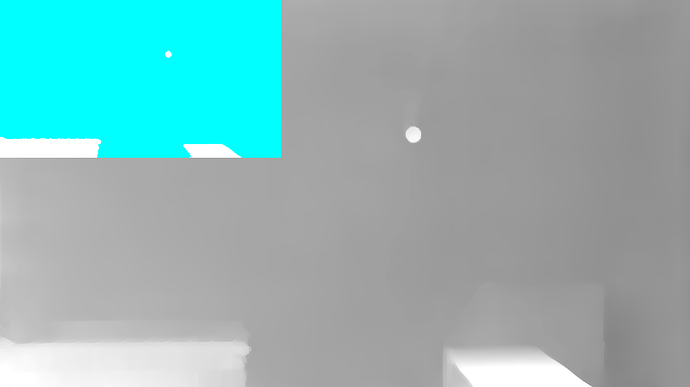

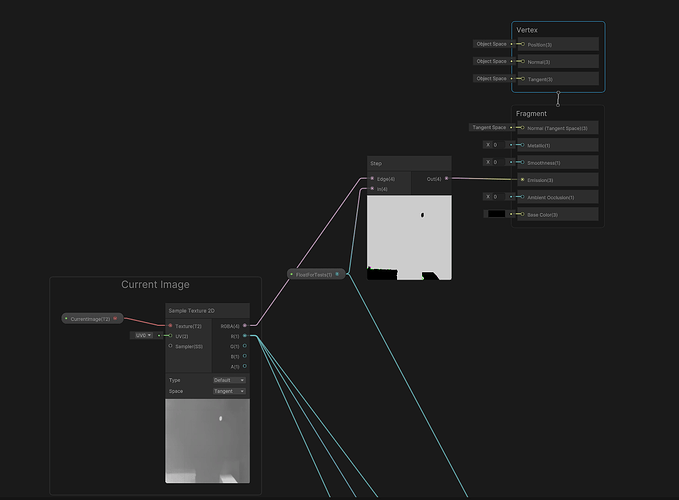

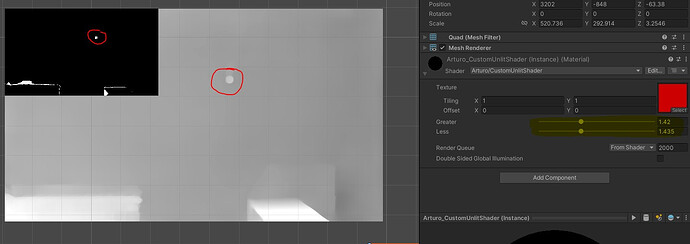

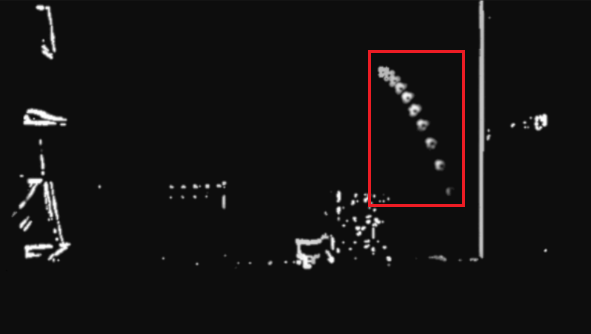

My initial implementation used shaders. For the tests, I grabbed the texture inside ZED_Rig_Mono->Camera_Left->Frame and I setted it to a custom shader. In that shader I tried to get only the pixels closer to the wall, but the output was a bit unstable and imprecise. I think part of this was due to the depth normalization, that makes the whole depthmap to change when a new object appears in the camera. (Check the image attached in this post for a super clear example (thanks @Neeklo!))

The red square is the ball going towards the wall

So I tried a second approach, building my own depthmap using the real depth values. But the only method I found was ZEDCamera->GetDepthValue, which only retrieves the real depth values pixel by pixel. The resolution I use is 1920x1080, and iterating across all that data takes around 11 seconds. I tried downscaling the final texture eight times and I got something that could work, taking only 0.165 seconds.

But I was wondering, is there an easier and quicker way to do this?

Something like the GetDepthValue, but instead returning the data pixel by pixel, returning an array of floats to speed up the process.

Thanks!