According to the formula you provided earlier, are there any examples of how to calculate the corresponding (x, y, z) coordinates in pixel coordinates (u, v), and how to achieve this goal in c++ language

You can find the explanation here:

You must subscribe also to the camera_info topic, where you find all the required parameters.

For C++ examples in ROS, I recommend searching the internet because they are ROS-related, not ZED SDK-related.

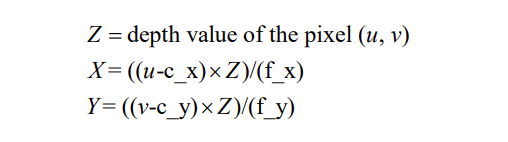

Z = depth value of the pixel (u,v) from the depth map,

How to obtain its corresponding Z-coordinate value through the (u, v) coordinate in zed_ros-wrapper. Which topic should I use?

Is it possible to use a topic called depth-registered, and how to obtain the corresponding Z-value through this topic?

cv_bridge::CvImagePtr cv_ptr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::TYPE_32FC1);

cv::Mat depth_image = cv_ptr->image;

int u = 320; // Example x coordinate

int v = 240; // Example y coordinate

// Get the depth value (Z) at the pixel (u, v)

float Z_value = depth_image.at<float>(v, u);

I found a way to do this, I don’t know, right?

/zed/zed_node/depth/camera_info

/zed/zed_node/left_raw/camera_info

/zed/zed_node/left/camera_info

/zed/zed_node/rgb/camera_info

/zed/zed_node/rgb_raw/camera_info

/zed/zed_node/right/camera_info

There are multiple camera_info topics in the zed package. Which camera_info topic should I use? I used the (u, v) pixels obtained from the image in the left eye. I want to obtain its corresponding (xyz) coordinates.

I consulted you with many questions, and you always patiently explained them to me. Thank you very, very much!

You are on the right way.

You should use the one related to the topic that you are subscribing to.

This means that for depth you must use

/zed/zed_node/depth/camera_info

Thank your very much!

There is another problem that has been bothering me all along, which is that when using the above formula to calculate the (xyz) coordinates, the corresponding 3D coordinate results for the (u, v) coordinates display as’ inf ‘or’ nf ‘. Sometimes, the result appears as’ NAN’ or ‘NAN’. What is the reason for this? Is it because the depth information of the corresponding pixel positions is indeed caused by the hardware when obtaining depth information? Is there any way to solve this problem! thank you!

Not all the pixels of the depth map contain valid information.

That’s because of not matching information or occlusions.

how to solve this problem ,thank you

发自我的小米

在 “Walter Lucetti (Stereolabs)” support@stereolabs.com,2024年9月13日 下午7:06写道:

You must “filter out” (ignore) inf and nan values.

This is a standard process when working with depth maps.

The coordinate point (x, y) I need is displaying NAN ‘or’ inf 'during the conversion process to the corresponding three digit coordinate. If I ignore this inf or NAN, I will not be able to obtain the coordinates of the point I need, and my work will not be able to proceed.

You can take the depth values from points close to this and perform an average to estimate a valid depth value.