Hello,

I have a question about Zed2i principle of calculating the disparity map,and its depth map. Whether it based on SAD? Whether the acquired images has been corrected for distortion and depth map has been synchronization?

Hi @liwei1379

Welcome to the Stereolabs community.

The ZED SDK uses different methods to extract the disparity map, according to the selected DEPTH mode. We cannot discover information regarding the processing behind the stereo-matching algorithms that we use because they are the result of more than 10 years of R&D in this field.

What I can say is that the NEURAL DEPTH mode mixes standard stereo processing to AI inference, while the other DEPTH modes use improved algorithms based on state-of-the-art stereo processing methods.

The acquired images are rectified by the ZED SDK thanks to the very precise factory calibration process performed using robotic arms.

RGB and Depth map information are natively synchronized.

Hi

Whether RGB and Depth map were synchronized behind rectifying the accquired images?

They are synchronized after the undistortion process.

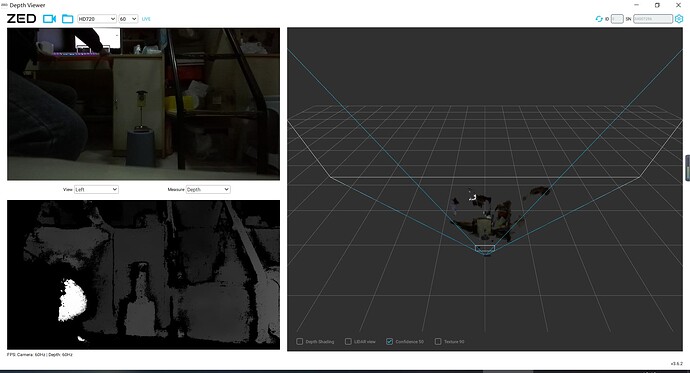

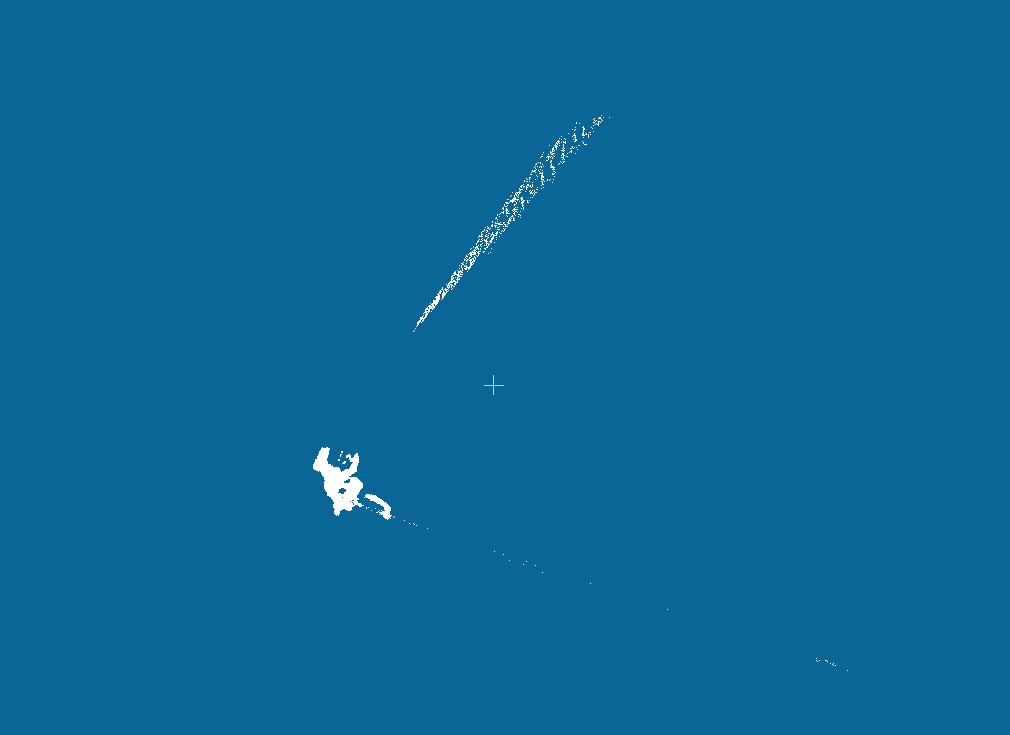

Once, I calibrated zed2i. It should be that the plane point cloud has become wavy. Now the point cloud is unusable. Do you need to send it back for recalibration

Normally you do not need to use the ZED Calibration tool to calibrate your camera.

The factory calibration performs a more precise calibration process.

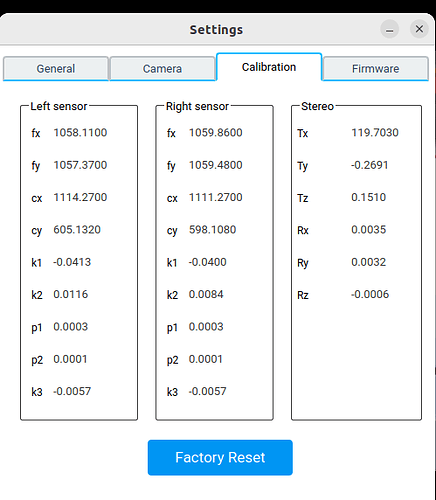

You can restore the factory calibration by opening ZED Explorer and clicking the Factory Reset button in the Calibration panel of the Settings window

Thank you very much!

I have one more question.How to convert zed.retrieveMeasure(Point_cloud_image, sl::MEASURE::XYZ) to pcl::XYZ?

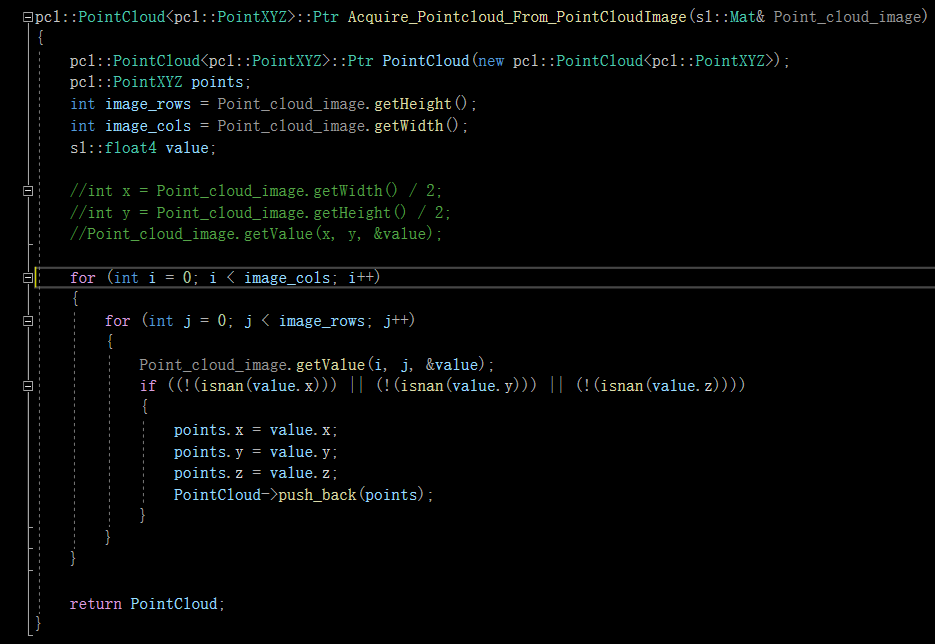

What I’m doing now is

But it’s not work well.

This can help you:

Thanks.I will study it.