I have model best.pt that can detect transport cones and i want to add the model with model bird_eye_viewer . My goal is to detect are there people in the polygon or not . Polygon make by positions of the cones . Thanks for atttention

Hi @Shakir0905

Welcome to the Stereolabs community.

The ZED SDK cannot use custom models directly, but it can track objects detected by a custom inference engine.

Look at these examples to understand how to use your detector: https://github.com/stereolabs/zed-sdk/tree/master/object%20detection/custom%20detector

i didnt see what should I do ?

I see detect.py

Do I have to learn model use this script? and then I will get best.model that I integrate with zed ?

Hi @Shakir0905

If your model was trained with YOLO v5 or v8, you can directly use our samples following the instructions in the Readme (here for YOLOv8 for instance).

You can explore detector.py, and get familiar with the code and workflow and we’ll be happy to help if you have any questions about it.

Dear Development Team,

I have encountered a couple of issues with the Bird View feature in our project and would like to discuss potential solutions.

Firstly, I have noticed that the scaling within the Bird View does not accurately represent real-world distances between objects. Could you please clarify the scaling parameters currently implemented? There may be a need for additional calibration or adjustment of the projection parameters to ensure accurate representation.

Secondly, the quality of the 3D imagery is suboptimal and does not meet the expected level of detail. Could you provide information on the current rendering settings? The issue may be related to low-resolution textures or limitations in the post-processing algorithms used.

I would appreciate technical details on the current configuration and possible ways to programmatically optimize these aspects. If necessary, I am willing to participate in testing proposed solutions to expedite the resolution of these issues.

Moreover, resolving these matters promptly is of the essence as we are preparing for an upcoming exhibition. It is crucial that we present our project in the best light, and these graphical inaccuracies could significantly undermine the experience we intend to deliver.

I look forward to your swift response and proposed action plan.

Best regards,

Shakir

please help me can yuo call someone who can solve my issue

Hi @Shakir0905,

First, please note that we read every message and answer as soon as we can.

About your issues:

The scaling problem is weird, I just tested the sample without issue on my side. Does it only happen when you use your custom model?

For the point cloud accuracy, please try another depth mode, for example, NEURAL should improve the accuracy. You can also increase the resolution in the InitParameters.

Yes, It happens when I use my model , please tell me what should I change in the code to zoom in or the distance between points ?

How can I use another mode - NERAL and resolution in my project ?

![]()

To learn how to use NEURAL and another resolution, please take a look at tutorial 2: image capture and tutorial 3: depth sensing.

Basically, you have to change the values I gave you in the previous message in your InitParameters variable.

Can you try moving the cones and see if they move accordingly in the top-down view?

Theoretically, this view should be in reference to the camera, and the positions should be from the depth detected (correctly it seems).

Have you changed anything in the code?

They are drawn from here in the code. You can try tracking down the scale issue from there (leading to this method).

Can you try printing the camera position? It should be close to 0.

print("pose cam :", cam_w_pose.get_translation().get())

Add this line under cv2.imshow in detector.py. You can also take a look at the objects’ world position and compare it to see if it’s coherent with the scale.

Also, which sample are you using, v5 or v8?

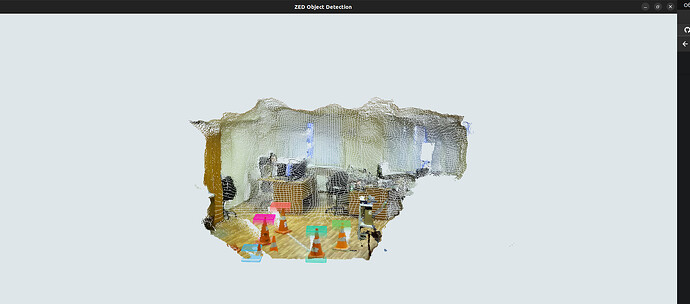

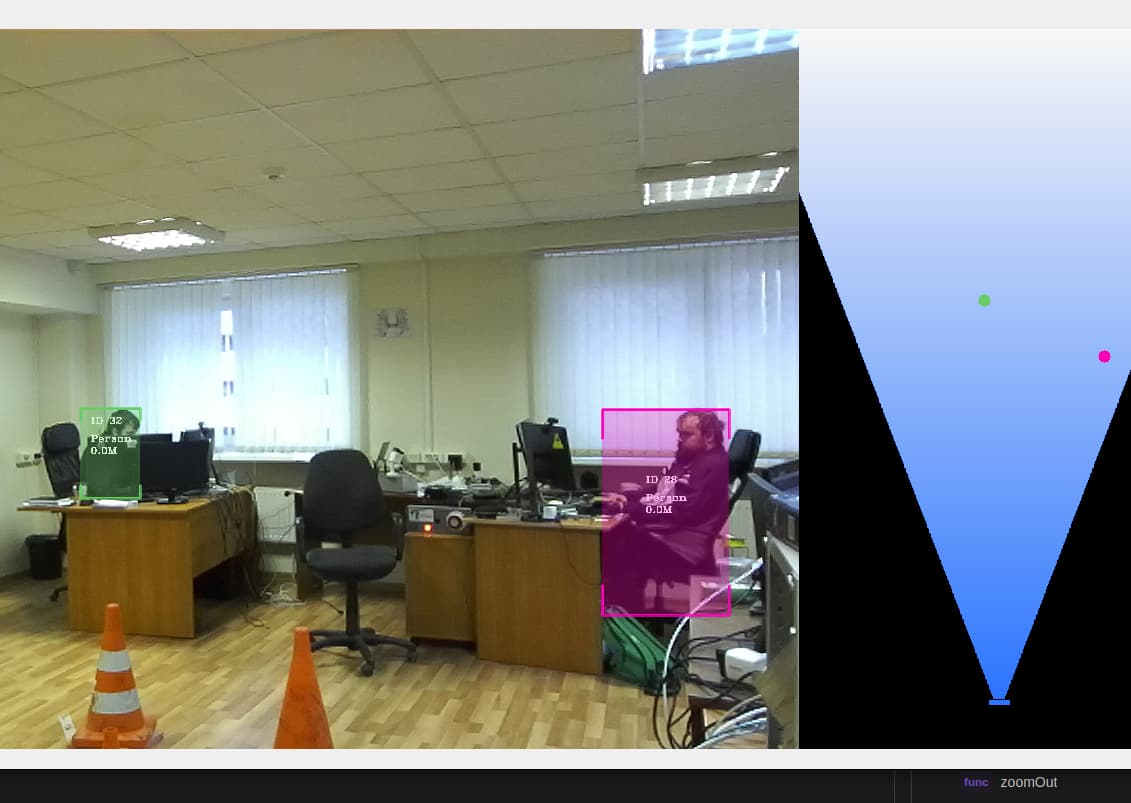

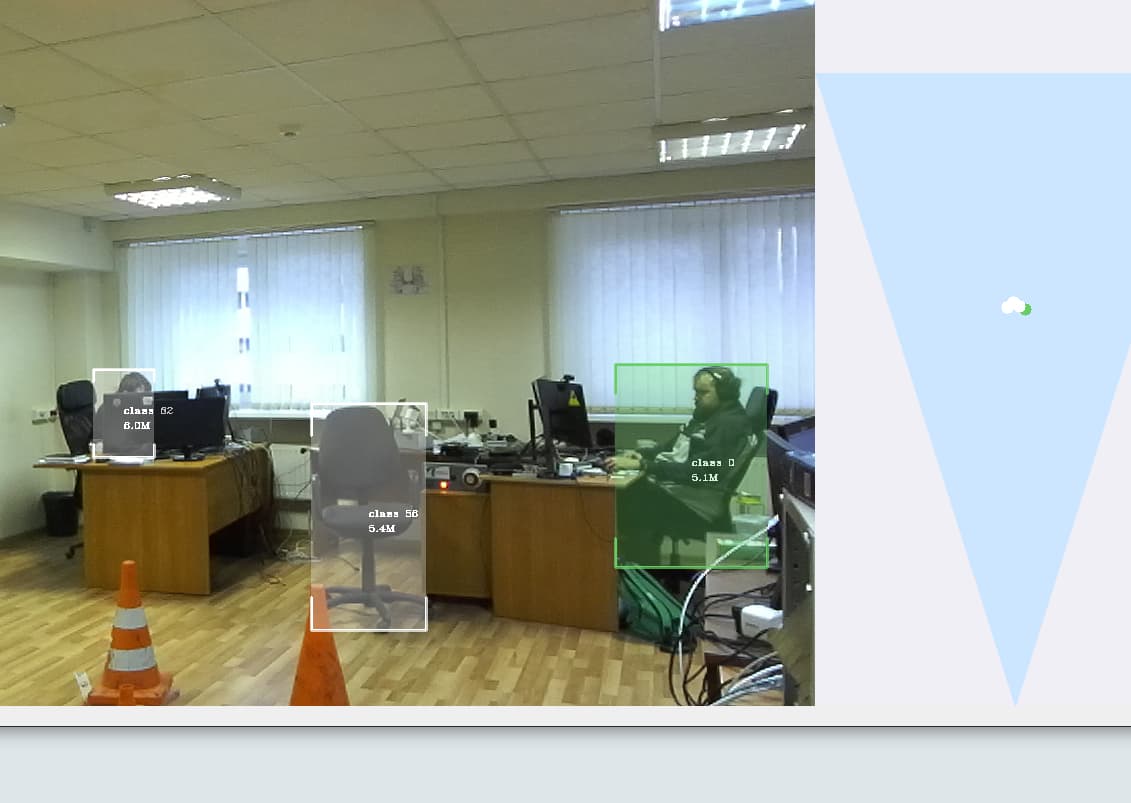

I’m encountering a problem with visualization in two different scenarios using the ZED SDK for object detection. When I run zed-sdk/object detection/birds eye viewer/python , the result looks great in terms of scale and tracking, providing a clear bird’s-eye view.

However, when I switch to zed-sdk/object detection/custom detector/python/python ch_yolov8_new$ , the scales seem incorrect and do not match reality. How can I urgently fix this scaling issue in the second scenario?

If the distance you get is correct, it seems like an issue with the viewer.

We’ll take a look as soon as we can, but I invite you to compare the viewer methods of the custom detector with the ones of the bird’s eye view object detection.

With a quick glance, they seem really similar.

As an alternative, you could plot the horizontal positional data with any plotting lib and check that the data you get is correct. The camera should be the 0,0,0 position since the measure3D_reference_frame from the RuntimeParameters will default to the CAMERA reference frame.