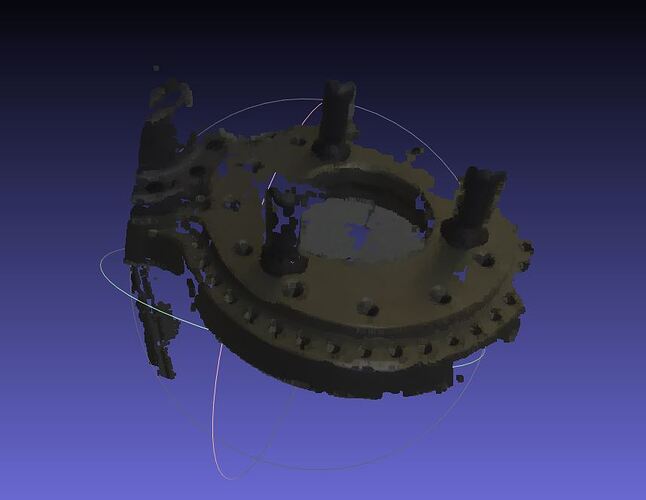

The ones above are point clouds I acquired using the Depth Viewer (with the ZED 2i camera).

I’m going to use python for acquiring new point clouds but I was wondering how to reduce noise and get a better quality. The object I have to capture is shiny. It’s a metal object.

I was wondering how to tune parameters in order to get the best quality.

Should I try to use the neural depth mode?

Should I try to adjust the max distance?

chatGPT suggested me to filter the point cloud to reduce noise by using the model sklearn.cluster.

I’m currently using ULTRA depth mode, when I try to use the NEURAL mode I get an error (failed to optimize model). Should I try another PC with higher computational capability to see if is able to use NEURAL?

I tried to tune other parameters like confidence, texture confidence, depth stabilization, brightness, constrast, hue…

But I need some advice. Should I try to move the camera while using depth stabilization to better fuse different frames into one in order to get a picture which is less noisy?

Thank you in advance for your support.