Hi Team,

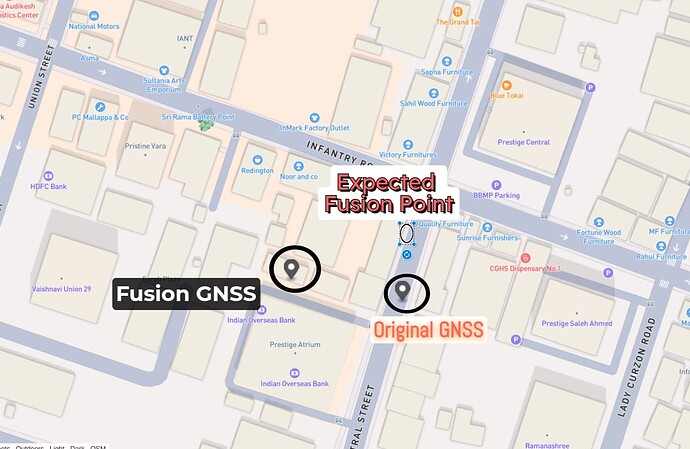

I am currently working on detecting the location of an object using the camera_to_geo method, but the GPS coordinates after fusion do not seem to match the actual location.

For context, I have mounted a ZED2i Camera and a ZED Box with GNSS on a car. Below is the code I am using to calculate the GPS point. Could you kindly help me identify if I’m missing anything or if there’s a specific step I should take to ensure the correct GPS output?

Code:

python

Copy

#!/usr/bin/env python3

import numpy as np

import argparse

import torch

import cv2

import pyzed.sl as sl

from ultralytics import YOLO

from ultralytics.engine.results import Results

from threading import Lock, Thread

from time import sleep

from typing import List

import exporter.KMLExporter as export

from display.generic_display import GenericDisplay

from exporter.gnss_saver import get_current_datetime, GNSSSaver

from gps_reader.gnss_replay import GNSSReplay

from gps_reader.gpsd_reader import GPSDReader

from required_config import constant_file

# Globals

lock = Lock()

run_signal = False

exit_signal = False

image_net: np.ndarray = None

detections: List[sl.CustomMaskObjectData] = None

sl_mats: List[sl.Mat] = None # We need it to keep the ownership of the sl.Mat

def xywh2abcd_(xywh: np.ndarray) -> np.ndarray:

output = np.zeros((4, 2))

# Center / Width / Height -> BBox corners coordinates

x_min = (xywh[0] - 0.5 * xywh[2])

x_max = (xywh[0] + 0.5 * xywh[2])

y_min = (xywh[1] - 0.5 * xywh[3])

y_max = (xywh[1] + 0.5 * xywh[3])

# A ------ B

# | Object |

# D ------ C

output[0][0] = x_min

output[0][1] = y_min

output[1][0] = x_max

output[1][1] = y_min

output[2][0] = x_max

output[2][1] = y_max

output[3][0] = x_min

output[3][1] = y_max

return output

def detections_to_custom_masks_(dets: Results) -> List[sl.CustomMaskObjectData]:

global sl_mats

output = []

sl_mats = []

for det in dets.cpu().numpy():

# Creating ingestable objects for the ZED SDK

obj = sl.CustomMaskObjectData()

# Bounding box

box = det.boxes

xywh = box.xywh[0]

abcd = xywh2abcd_(xywh)

obj.bounding_box_2d = abcd

obj.label = box.cls.item()

obj.probability = box.conf.item()

obj.is_grounded = False

# Mask

if det.masks is not None:

mask_bin = (det.masks.data[0] * 255).astype(np.uint8)

mask_bin = mask_bin[int(abcd[0][1]): int(abcd[2][1]),

int(abcd[0][0]): int(abcd[2][0])]

if not mask_bin.flags.c_contiguous:

mask_bin = np.ascontiguousarray(mask_bin)

# Mask as a sl mat

sl_mat = sl.Mat(width=mask_bin.shape[1],

height=mask_bin.shape[0],

mat_type=sl.MAT_TYPE.U8_C1,

memory_type=sl.MEM.CPU)

p_sl_as_cv = sl_mat.get_data()

np.copyto(p_sl_as_cv, mask_bin)

sl_mats.append(sl_mat)

obj.box_mask = sl_mat

else:

print("[Warning] No mask found in the prediction. Did you use a seg model?")

output.append(obj)

return output

def start_record(video_path, save_req, create_assets, create_notification):

input_type = sl.InputType()

if video_path is not None:

input_type.set_from_svo_file(video_path)

init_params = sl.InitParameters(input_t=input_type, svo_real_time_mode=False)

init_params.coordinate_units = sl.UNIT.METER

init_params.depth_mode = sl.DEPTH_MODE.ULTRA # QUALITY

init_params.coordinate_system = sl.COORDINATE_SYSTEM.RIGHT_HANDED_Y_UP

init_params.depth_maximum_distance = 50

# Initialize the camera

print("Initializing Camera...")

zed = sl.Camera()

status = zed.open(init_params)

if status != sl.ERROR_CODE.SUCCESS:

print(repr(status))

exit()

print("Camera Initialized")

#Enable SVO recording :

if video_path is None:

svo_path = "ZED_SN" + str(zed.get_camera_information().serial_number) + "_" + get_current_datetime() + ".svo"

returned_state = zed.enable_recording(sl.RecordingParameters(svo_path, sl.SVO_COMPRESSION_MODE.H264_LOSSLESS))

if returned_state != sl.ERROR_CODE.SUCCESS:

print("Recording ZED : " + repr(status) + ". Exit program.")

zed.close()

exit()

# Create Fusion object:

fusion = sl.Fusion()

init_fusion_param = sl.InitFusionParameters()

init_fusion_param.coordinate_units = sl.UNIT.METER

fusion_init_code = fusion.init(init_fusion_param)

if fusion_init_code != sl.FUSION_ERROR_CODE.SUCCESS:

print("[ZED][ERROR] Failed to initialize fusion :" + repr(fusion_init_code) + ". Exit program")

exit()

# Enable Positional Tracking

positional_tracking_parameters = sl.PositionalTrackingParameters()

# If the camera is static, uncomment the following line to have better performances and boxes sticked to the ground.

# positional_tracking_parameters.set_as_static = True

zed.enable_positional_tracking(positional_tracking_parameters)

# Enable Object Detection

obj_param = sl.ObjectDetectionParameters()

obj_param.detection_model = sl.OBJECT_DETECTION_MODEL.CUSTOM_BOX_OBJECTS

obj_param.enable_tracking = True

obj_param.enable_segmentation = True

zed.enable_object_detection(obj_param)

# Display

camera_infos = zed.get_camera_information()

camera_res = camera_infos.camera_configuration.resolution

# Create Fusion object:

fusion = sl.Fusion()

init_fusion_param = sl.InitFusionParameters()

init_fusion_param.coordinate_units = sl.UNIT.METER

fusion_init_code = fusion.init(init_fusion_param)

if fusion_init_code != sl.FUSION_ERROR_CODE.SUCCESS:

print("[ZED][ERROR] Failed to initialize fusion :" + repr(fusion_init_code) + ". Exit program")

exit()

# Enable odometry publishing:

configuration = sl.CommunicationParameters()

zed.start_publishing(configuration)

# Enable GNSS data producing:

if video_path is None:

gnss_reader = GPSDReader()

else:

gnss_reader = GNSSReplay(None,zed)

status_initialize = gnss_reader.initialize()

if status_initialize == -1:

gnss_reader.stop_thread()

zed.close()

exit()

# Subscribe to odometry:

uuid = sl.CameraIdentifier(zed.get_camera_information().serial_number)

fusion.subscribe(uuid, configuration, sl.Transform(0, 0, 0))

# Enable positional tracking for Fusion object:

positional_tracking_fusion_parameters = sl.PositionalTrackingFusionParameters()

positional_tracking_fusion_parameters.enable_GNSS_fusion = True

gnss_calibration_parameters = sl.GNSSCalibrationParameters()

gnss_calibration_parameters.target_yaw_uncertainty = 0.1

gnss_calibration_parameters.enable_translation_uncertainty_target = False

gnss_calibration_parameters.enable_reinitialization = True

gnss_calibration_parameters.gnss_vio_reinit_threshold = 5

positional_tracking_fusion_parameters.gnss_calibration_parameters = gnss_calibration_parameters

fusion.enable_positionnal_tracking(positional_tracking_fusion_parameters)

# Prepare runtime retrieval

runtime_params = sl.RuntimeParameters()

obj_runtime_param = sl.ObjectDetectionRuntimeParameters()

image_left_tmp = sl.Mat()

objects = sl.Objects()

model = YOLO('/home/user/Documents/models/Version8Small.pt')

zed_pose = sl.Pose()

gnss_data_saver = GNSSSaver(zed)

viewer = GenericDisplay()

viewer.init(zed.get_camera_information().camera_model)

resolution = zed.get_camera_information().camera_configuration.resolution

class_names = model.names

while True:

plot_frame = None

if zed.grab(runtime_params) == sl.ERROR_CODE.SUCCESS:

zed.get_position(zed_pose, sl.REFERENCE_FRAME.WORLD)

# -- Get the image

zed.retrieve_image(image_left_tmp, sl.VIEW.LEFT)

image_net = image_left_tmp.get_data()

img = cv2.cvtColor(image_net, cv2.COLOR_BGRA2RGB)

img = cv2.cvtColor(img, cv2.COLOR_RGB2BGRA)

det = model.track(img, save=False, retina_masks=True,device='cuda',persist=True, show=True, verbose=False)[0]

plot_frame = det.plot()

# ZED CustomMasks format

detections = detections_to_custom_masks_(det)

# -- Ingest detections

zed.ingest_custom_mask_objects(detections)

zed.retrieve_objects(objects, obj_runtime_param)

else:

break

# Get GNSS data:

if video_path is None:

status, input_gnss = gnss_reader.grab()

else:

status, input_gnss = gnss_reader.grab(zed_pose.timestamp.get_nanoseconds())

if status.value == sl.ERROR_CODE.SUCCESS.value:

# Display it on the Live Server

viewer.updateRawGeoPoseData(input_gnss)

# Publish GNSS data to Fusion

ingest_error = fusion.ingest_gnss_data(input_gnss)

if ingest_error == sl.FUSION_ERROR_CODE.SUCCESS:

print("Ingest error occurred when ingesting GNSSData: ", ingest_error)

latitude, longitude, altitude = input_gnss.get_coordinates(False)

coordinates = {

"latitude": latitude,

"longitude": longitude,

"altitude": altitude,

}

export.saveKMLData("raw_gnss.kml", coordinates)

# Save GNSS data into JSON:

gnss_data_saver.addGNSSData(input_gnss)

else:

print("Ingest error occurred when ingesting GNSSData: ", ingest_error)

# Process data and compute positions:

if fusion.process() == sl.FUSION_ERROR_CODE.SUCCESS:

if save_req and plot_frame is not None:

for obj in objects.object_list:

obj_translation = sl.Translation()

obj_translation.init_vector(obj.position[0], obj.position[1], obj.position[2])

obj_transform = sl.Transform()

obj_transform.set_translation(obj_translation)

obj_pose = sl.Pose()

obj_pose.init_transform(obj_transform)

object_geo_pose = sl.GeoPose()

fusion.camera_to_geo(obj_pose, object_geo_pose)

class_id = obj.raw_label # Class ID

class_name = class_names.get(int(obj.raw_label))

if class_id == 29:

print(object_geo_pose.latlng_coordinates.get_coordinates(False),input_gnss.get_coordinates(False))

cv2.imshow('Frame',plot_frame)

cv2.waitKey(0)

zed.close()

Any insights or suggestions would be greatly appreciated!

Thank you in advance!