Hello,

I was wondering what is the best way to have the whole fused point cloud in ECEF coordinate system, i.e., how to generate a fully geo-referenced point cloud at the end. Is it possible? A working example in python is highly appreciated.

Hello,

We don’t provide this feature natively from the SDK natively. But it’s in the short-term roadmap.

Thank you for the reply and clarification. I am trying to implement this feature but I have the following issues. I would be grateful if you could help me address them:

1- After ingesting GNSS coordinates into the fusion process, when the following conditions are met:

fusion.process() == sl.FUSION_ERROR_CODE.SUCCESSfusion.get_position(fused_position) == sl.POSITIONAL_TRACKING_STATE.OKfusion.get_geo_pose(current_geopose) == sl.GNSS_CALIBRATION_STATE.CALIBRATED

assuming: fused_position = sl.Pose() , current_geopose = sl.GeoPose() and the runtime parameters used in grab has been runtime_parameters = sl.RuntimeParameters(measure3D_reference_frame = sl.REFERENCE_FRAME.WORLD)

is it correct that in such case I should be able to apply camera_to_geo function to go from camera coordinates expressed in sl.REFERENCE_FRAME.WORLD to WGS84 coordinates (latitude, longitude, and altitude)?

I wanted to check this so I gave the fused_position of the camera to the camera_to_geo function. I expected calling fusion.camera_to_geo(fused_position, recalculated_current_geopose) would confirm that recalculated_current_geopose is almost identical to current_geopose, but that is not confirmed. Apparently the function only takes translation part of the input into account and not the whole pose_data (). Moreover, I passed the calculated geolocation of the camera in the fusion process (current_geopose) to the inverse function, i.e., geo_to_camera to receive the fused_position; the latter is also not confirmed.

could you elaborate more on the fusion process and the way one could transform points in the camera coordinate system (sl.REFERENCE_FRAME.WORLD) to WGS84 using fusion.camera_to_geo function?

2- I would like to apply the fusion.camera_to_geo function to a subset of the final fused pointcloud. Then I can use the open3d.pipelines.registration.registration_icp function to calculate the transformation between the selected subset and its twin in WGS84 system produced by the function (and then transformed to ECEF). Should I call the function within the grab loop where I go frame by frame over the recorded SVO file? Can I do it at the very end (after the loop is finished)?

3- Do I need to pass points one by one into the fusion.camera_to_geo function? is there any another way to pass arrays to the function?

Hello Mostafa,

Indeed this is possible to get the fused position into ECEF or UTM. I will answer your question one by one.

when the following conditions are met:

fusion.process() == sl.FUSION_ERROR_CODE.SUCCESS

fusion.get_position(fused_position) == sl.POSITIONAL_TRACKING_STATE.OK

fusion.get_geo_pose(current_geopose) == sl.GNSS_CALIBRATION_STATE.CALIBRATED

Yes when the condition above are met you should be able to start conversion from local camera WORLD to Geo-referenced one. I just would like to point that depending how you initialize the fusion, the first conversion won’t be accurate. Especially if you set the enable_rolling_calibration to true the first transformation used are rough and will be refined when new VIO / GNSS point will be available. You could check the current VIO / GNSS calibration thanks to the get_current_gnss_calibration_std method.

could you elaborate more on the fusion process and the way one could transform points in the camera coordinate system (sl.REFERENCE_FRAME.WORLD) to WGS84 using fusion.camera_to_geo function?

The function camera_to_geo and especially geo_to_camera are just taking the translation part because GNSS data do not provide orientation.

About open3d, unfortunately I never used it. You can probably succeed with both your approaches, giving the data when it comes or everything in the end.

We cannot pass arrays to camera_to_geo function at the moment.

Regards,

Tanguy

Thank you, Tanguy!

My problem is that once the mentioned conditions are met we should be able to, at the very first step, take the fused position of the camera itself (fused_position), pass it to camera_to_geo function, and then the function should give us the same geo-coordinates as the current_geopose, is that right? But this test did not confirm the expected functionality of camera_to_geo. is this a correct approach for the test? Could you please help me have a valid way to test the camera_to_geo function? Or do you have any other suggestion for testing the transformation? I also checked the reverse function (geo_to_camera) in a similar way, this test is also failing. I passed current_geopose to the geo_to_camera function but the output is different from fused_position.

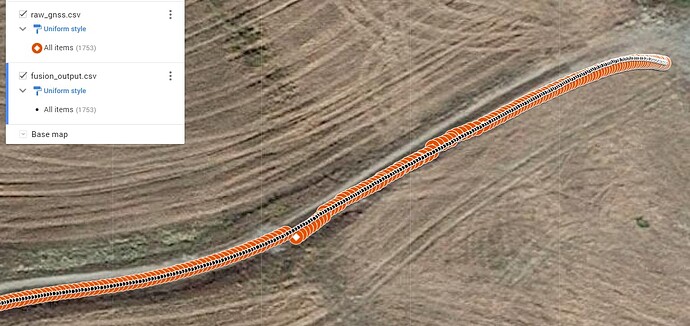

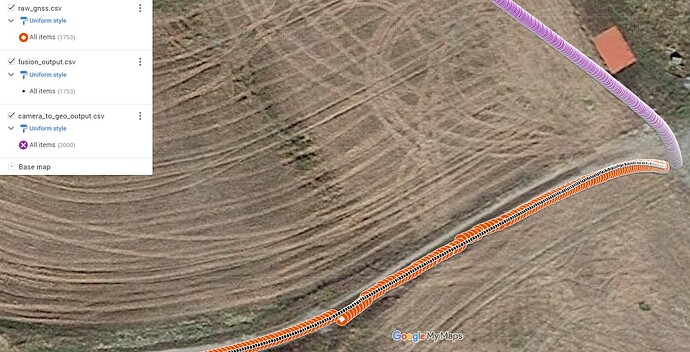

I should add that I think the horizontal accuracy of the fusion output looked good (not so reliable vertical accuracy). As you can see the geopose output of the fusion process shows a better performance compared to raw GNSS solution in this example.

Regards,

Mostafa

Hello mostafa,

Depending the initialization status the answer could be yes or no. Could you print the current calibration uncertainty (thanks to get_current_gnss_calibration_std) ? If this calibration standard deviation is still moving then the VIO / GNSS calibration thread does not finish to refine the calibration yet. This mean that the estimated transformation between your camera and GNSS is still refined (in asynchronous way) meaning that you could use little different transformation in camera_to_geo and geo_to_camera (once the calibration refinement is finished the transformation used is supposed to be same).

Even if the calibration refinement is finished you may still observe little differences if you do the following transformation: fused_position -> camera_to_geo -> geo_to_camera. Since the geo-position does not provide orientation part (only translation) you lose this information during transformation leading to little different results.

Another thing that could perturb the transformation. Did you apply initial transformation to the camera ?

I hope this help,

Regards,

Tanguy

camera_to_geo_output.csv (503.8 KB)

fusion_output.csv (503.8 KB)

raw_gnss.csv (498.3 KB)

playback.py (13.1 KB)

raw_gnss.json (2.5 MB)

saved_output_for_conversion_issue.txt (1.7 MB)

Thanks, please have a look at the attached files.

However, I am not talking about small errors, the error in the conversion is huge (completely wrong direction) while the fused geopose shows good accuracy!

Do you confirm the approach I described for testing the conversion process? If yes, I would be grateful if you could confirm the expected functionality of camera_to_geo and geo_to_camera based on your reference dataset please.

-Mostafa