I am running a variation of the multi-camera sample where I am trying to get the body detection for each individual camera using sk::serialize(camera_raw_data[id]. The individual camera data does not seem to include local_orientation_per_joint unless I am mistaken? Is there a way to get that data for each camera?

Hi @haakonflaar

I believe that local_orientation_per_joint will only be computed if the fitting is enabled. Usually, we recommend disabling it on the senders, though, so that could be what causes the absence of data.

Hi @JPlou

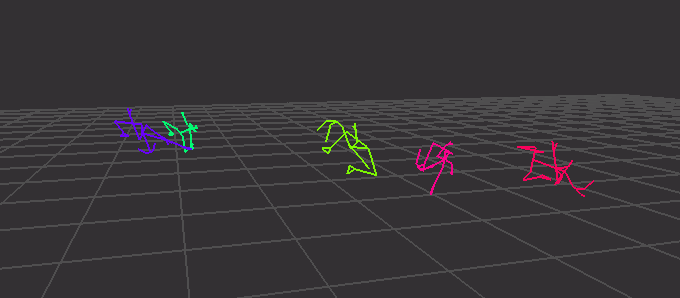

If I activate body fitting and tracking for all cameras I get really strange looking skeletons:

You know why?

Also, activating body fitting did not give me local_orientation_per_joint for the cameras…

I am also wondering: I have body 34 set up on all cameras (via the hub). However, when I check the raw data from each of the cameras, it is only showing 18 keypoints - same is being showed in the GLViewer. I saw the same issue when testing SDK 4.1. The cameras are supposed to run Body 34 but is seemingly running body 18. I am afraid it hurts my detection accuracy. How can I fix that?

Running some tests now, so would appreciate a fast response.

I reproduce your issue when enabling BODY_34 on the senders, I’ll run it through the team to find a fix as soon as possible. This is probably because since the body_34 data is not used by the fusion, it is not sent, but there is a mismanagement of that.

In the meantime, using BODY_18 + fitting + tracking should work, since it seems you need all of it enabled on the sender’s side. BODY_34 is directly inferred from BODY_18 detection, so you will not lose accuracy.

Okay. Hope you get a fix for body 34. Getting body 18 data is not sufficient, I’ll need the body 34 data. I don’t think I was able to get body 38 local orientation data from individual cameras either with fitting and tracking enabled - does that work for you?

By the way, do you advice using body 34 or body 38 for the most accurate body tracking with a fused setup? What are the differences between the two and why do you have both (e.g is body fitting only available on body 34?) ?

Hi @haakonflaar

I was not clear enough in my previous message, but you should be able to get BODY_34 as output of the fusion, even if you use BODY_18 in the sender. You should just have to send BODY_18 and enable the fitting on the receiver, it should give 34.

We’re testing a fix for sending 34 right now.

I’m able to send body 38 correctly, so that should work as expected in 4.1.

As for the difference, we currently recommend the 18/34 workflow for the Fusion. It’s more stable than BODY_38 in this case.

The difference lies in the detection model. For body 18/34, only 18 points are detected on the people from the video feed. To fill in the 34 skeletons, keypoints are inferred from the detection. This model was made to be compatible with more avatar solutions. Body 38 actually tracks the 38 keypoints, which may be stabilized in the process, but all are detected, and not only inferred (except if the fitting is enabled, then points not detected are inferred).