Hello, I found that the point cloud effect collected using the depth sensing example is better than the spatial mapping, but I don’t know why?

Hi @zsj520

can you please add pictures that explain your sentence?

They seem both correct.

The Spatial Mapping point cloud is created from the 3D mesh generated by the Spatial Mapping algorithm and it’s normally different from the live point cloud because of the parameters of the algorithm.

Hello, do you mean that the algorithms for depth sensing and spatial mapping are different?

That’s indeed what I said

Hello, if I want to obtain a high-quality fused point cloud, can I only modify it on depth sensing.But I see that in the example of depth sensing, only a single frame point cloud can be collected

Are you using ZEDfu or you code based on the ZED SDK API?

I am using ZED SDK API

You can find the description of all the involved parameters in the online API documentation:

C++ → SpatialMappingParameters Class Reference | API Reference | Stereolabs

Python → SpatialMappingParameters Class Reference | API Reference | Stereolabs

and useful examples on GitHub:

Thank you for your answer. I have adjusted all the parameters of the spatial mapping to the best, but the effect is still not as good as that of depth sensing. Is there no way to solve this problem?

Please share the setup you are using

the spatial mapping:

InitParameters init_parameters;

init_parameters.depth_mode = DEPTH_MODE::NEURAL;

init_parameters.coordinate_units = UNIT::METER;

init_parameters.depth_stabilization = true;

init_parameters.coordinate_system=COORDINATE_SYSTEM::RIGHT_HANDED_Y_UP;

init_parameters.depth_maximum_distance = 8.;

spatial_mapping_parameters.set(SpatialMappingParameters::MAPPING_RANGE::MEDIUM);

spatial_mapping_parameters.resolution_meter = 0.01;

spatial_mapping_parameters.use_chunk_only = true;

spatial_mapping_parameters.stability_counter = 4;

runtime_parameters.confidence_threshold = 50;

runtime_parameters.texture_confidence_threshold = 100;

auto resolution = camera_infos.camera_configuration.resolution;

float image_aspect_ratio = resolution.width / (1.f * resolution.height);

int requested_low_res_w = min(720, (int)resolution.width);

sl::Resolution display_resolution(requested_low_res_w, requested_low_res_w / image_aspect_ratio);

the depth sensing:

InitParameters init_parameters;

init_parameters.depth_mode = DEPTH_MODE::NEURAL;

init_parameters.coordinate_system = COORDINATE_SYSTEM::RIGHT_HANDED_Y_UP; // OpenGL's coordinate system is right_handed

init_parameters.sdk_verbose = 1;

auto mask_path = parseArgs(argc, argv, init_parameters);

runParameters.confidence_threshold = 50;

runParameters.texture_confidence_threshold = 100;

Thank you very much for your answer!

The parameters are good to obtain a quality spatial mapping result.

Why do you say it’s not good?

Please remember that Spatial Mapping fuses the 3D depth information to obtain a filtered 3D point cloud.

It’s not possible they are exactly the same.

Okay, thank you for your answer. I remember that.

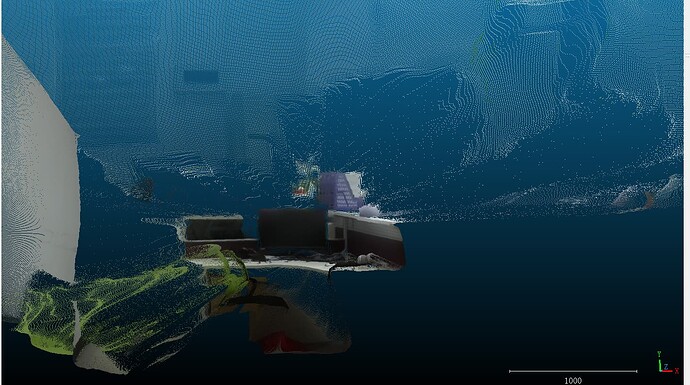

Hello, I want to achieve the same effect as before on the official website, so how should I set the parameters

This view is obtained by using the maximum quality available, with a short depth range.