I am trying to unproject rgbd rectified pair into pointcloud from multiple cameras (4) and then add them together. Here are the steps I am following:

- Calibrate cameras using ZED360 and generate configuration file (calib.json)

- Unproject rgbd to pointcloud into their respective camera coordinate frame using intrinsic matrix

- Transform pointcloud from camera coordinate to world frame using the pose field retrieved from calib.json. I convert the string of 16 float values into 4x4 projection matrix and then do matrix multiplication

- Finally I combine all pointclouds together

I am aware that there is Fusion SDK and sample codes using it that could do something similar to what I am trying to achieve, but I do not want to use Fusion SDK for my application. My question is, am I missing something from the steps I mentioned above that I should do? My understanding of pose retrieved from calib.json is that, suppose that ‘cam0’ is world origin with position (0, H, 0), the pose field defines a matrix that does T_world_from_cam0, similarly the pose field for ‘cam1’ in the calib.json defines a matrix that does T_world_from_cam1, etc. Is that correct?

Hi,

I see that you are trying to compute the fused point cloud from scratch, not even using the point cloud of each camera but using the rgb and the depth images.

May I ask why ?

To answer your question, you are almost right. The translation written in the json file is that you think it is, the translation of the camera in the world reference frame. However, that’s not exactly the case for the rotation.

You can notice a field called “override_gravity” set to false in the JSON file. That means the file does not contain the “full rotation” but only the rotation relative to the camera’s IMU rotational pose.

You need to get the camera’s rotation (using getPosition()) and add up both rotations before projecting the point cloud.

Right now, there is no option in ZED 360 to export the full rotation, but that’s something we can add in the future if necessary.

Hi Benjamin,

Thank you for your reply! Yes, you are correct. I am using rgb and depth images and creating pointcloud manually. The reason I am doing this is for quick prototyping and offline development i.e. with saved depth and rgb images in third party software i.e. Open3D without relying on ZED SDK.

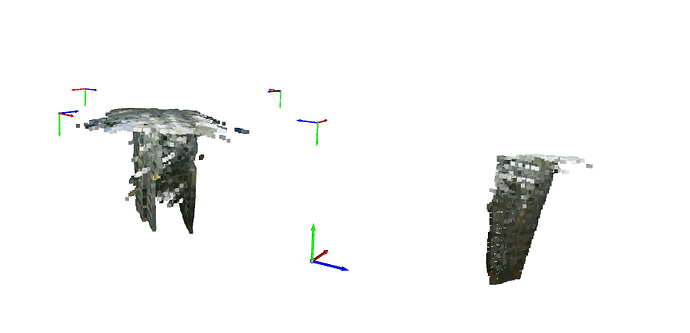

Your suggestion worked for three of my cameras and I get correct placement of pointcloud in WORLD frame however, one camera (which is also set as world origin during ZED360), is placed upside down. The projection for this camera is incorrect. I was thinking that the getPosition would return the correct rotation (with inverted camera in mind) but that does not seem to be the case. I have attached an image for reference. In the image, the camera reference frames can be seen as four corners, the pointcloud projection for three camera is correct in the middle while for the fourth camera that is flipped upside down has its pointcloud projected incorrectly.

I don’t think this camera has anything particular in the fusion; I will double-check.

Can you confirm you are reading the values from the json file using the SDK API ( readFusionConfigurationFile Fusion Module | API Reference | Stereolabs) ?

I am reading the calibration json file using standard python json package and not through FusionAPI. It may be that the camera moved a little since last calibration. I will redo the calibration and hopefully it should just work. Thank you.