Hello, I would like to ask some questions regarding the setup of the calibration and recording video with three zed 2i cameras.

My use case is that I want to use three zed 2i cameras locating around the human and capture the point cloud for the whole human body. To do so, I need to 1) sync time (or frame) 2) calibration (know their corresponded position/orientation) and 3) recording the video (I want at least 1080P)

I now have a PC with GTX3080 GPU and AMD Ryzen 9 5900 12-Core CPU, as well as three Jetson Xaviar NX. I would like to ask what is the most suitable setup for me.

-

Connect all cameras to my PC. I think this is the most convenient setup for me since 1) calibration can be done on local network and 2) I don’t need to manully sync time given that all cameras will record the video using the time of the PC (Is it true?). But I am not sure whether my PC can support recording three 1080P videos at the same time

-

Each camera connected to a jetson, then all jetsons and PCs are connected to a router via Ethernet. This setup can make sure I record videos in 1080P (even 2K). But I failed in calibration under this setup. Morevoer, I am not sure how can I accurately sync time or frames across different devices. In my understanding, I might only need to sync time for each jetson with my PC, right?

-

two cameras are connected to PC and one is connected to Jetson. This is a compromise solution. But I am not sure whether I can calibrate three cameras under this setup, given that this is a mix of local and network settings.

Could you guys give me some recommendations on the setup? Thanks a lot !

Hi,

Thanks for reaching out to us.

As you said, connecting the cameras on the same pc will make the sync easier, as the cameras will be on the same clock.

We actually release a new module in our SDK called “Fusion”, which should be perfect for you.

This module allows you to fuse data from multiple cameras. At first, only the body tracking data was available but we very recently also added the spatial mapping feature, meaning you can now fuse point cloud as well.

There should be a c++ sample available in your ZED SDK folder, at ZED SDK\samples\spatial mapping\multi camera\cpp.

But first, you need to calibrate the cameras in order to know their relative positions.

For that, you can use our tool called “ZED 360”. This tool will help you generate a calibration file that is required by our Fusion module.

Please find the guide for ZED 360 here : https://www.stereolabs.com/docs/fusion/overview/

Best regards,

Stereolabs Support

Thank you for your reply. I have finished the setup of using three cameras on a single PC. I can still record 2K video, even though there are some frames dropped.

I also have finished the calibration using Zed360. But I have several questions about the results. My calibration file is as below.

nan (1.6 KB)

-

It seems that it considers the floor of camera “32482761” as the origin. For y-axis of translation, the reason why the value is negative is zed using “right-handed with the positive Y-axis pointing down, X-axis pointing right and Z-axis pointing away from the camera” coordinates (Coordinate Frames | Stereolabs), right? But can I specify the main camera and the coordinate system during calibration?

-

Are the translation and orientation in the configuration file the camera extrinsic? The documentation says it is “the camera’s world data” (Fusion | Stereolabs). But I am still confused. To be more specific, is it “the position/orientation of the world with respect to the camera.” or the “position/orientation of the camera with respect to the world?” The former is extrinsic. But I am not sure which one is stored in the configuration file

If it is " the position/rotation of the camera in the world’s coordinate system.", then in my understanding, it is actually not the extrinsic.

Typically, in computer vision community, the camera extrinsic is defined as “the position/orientation of the world with respect to the camera”

Sorry, you are right,

the data stored in the file are not the camera extrinsic, but the position/orientation of the camera in the world space.

Sorry for the confusion.

Stereolabs Support

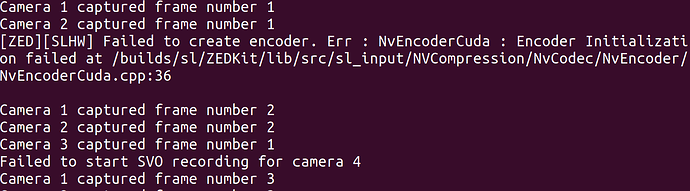

Yeah so I found I can record three cameras with 2K at the same time. However, when I try to record four cameras, even HD 720 or VGA cannot work. This is the error message. Any potential solution for this?