Hello, I am still working on extracing point cloud from human body, as I mentioned here Extracing point cloud and mesh of human body

Now I find a new problem that, when I segment human body, sometimes the bounding box is too small to cover the full body of human.

For example, this is the original image

This is the mask

We can see that the mask cut too much, including half of arm and part of face. How to address this problem?

Hi,

I see that you are using the Object detection module.

Maybe you should use the body tracking module instead. Indeed, with this model, the ZED SDK is using the key points of the skeleton to compute the bounding box.

As long as the keypoints are detected by the camera, the bounding box will include them.

Best regards,

Benjamin Vallon

Stereolabs Support

Thank you for your reply. I have changed my code to body tracking model. But somehow it cannot return the mask (mask.getWidth() = 0 and mask.getHeight() == 0). I see in the zed API reference, it says " : The mask information is only available for tracked objects ( OBJECT_TRACKING_STATE::OK) that have a valid depth. Otherwise, Mat will not be initialized (mask.isInit() == false)". However, I have verified that my code can get the 2D can 3D keypoints, as well as bounding box, for my human body. Thus, it should have valid depth. Can you help me locate the bug? Following is my current code.

BodyTrackingParameters detection_parameters;

detection_parameters.detection_model = BODY_TRACKING_MODEL::HUMAN_BODY_MEDIUM; //specific to human skeleton detection

detection_parameters.enable_tracking = true; // Objects will keep the same ID between frames

detection_parameters.enable_body_fitting = true; // Fitting process is called, user have access to all available data for a person processed by SDK

detection_parameters.body_format = BODY_FORMAT::BODY_34; // selects the 34 keypoints body model for SDK outputs

// Set runtime parameters

BodyTrackingRuntimeParameters detection_parameters_rt;

detection_parameters_rt.detection_confidence_threshold = 40;

zed.enablePositionalTracking();

// Enable body tracking with initialization parameters

auto zed_error = zed.enableBodyTracking(detection_parameters);

if (zed_error != ERROR_CODE::SUCCESS) {

cout << "enableBodyTracking: " << zed_error << "\nExit program.";

zed.close();

exit(-1);

}

sl::Bodies bodies;

Mat mask;

Mat image;

Mat point_cloud;

sl::uchar1 mask_value;

int ID = -1;

while(zed.grab() == ERROR_CODE::SUCCESS){

cout<<"Frame: "<<zed.getSVOPosition()<<endl;

zed.retrieveBodies(bodies, detection_parameters_rt);

cout<<"Body size: "<<bodies.body_list.size()<<endl;

//zed.retrieveObjects(objects, detection_parameters_rt);

//cout << objects.object_list.size() << " Object(s) detected\n\n";

// if (!objects.object_list.empty()) {

if (!bodies.body_list.empty()){

auto first_object = bodies.body_list.front();

for (auto& kp_2d : first_object.keypoint_2d) {

cout << "Keypoint " << kp_2d<<endl;}

mask = first_object.mask;

cout<<"Mask size: "<<mask.getWidth()<<" "<<mask.getHeight()<<endl;

//if mask is empty, skip

//Sometimes zed sdk cannot generate mask for the first few frames

if(mask.getWidth() == 0 || mask.getHeight() == 0){

continue;

}

Hi,

To have access to the 2D mask, you need to set the “BodyTrackingParameters::enable_segmentation” (https://www.stereolabs.com/docs/api/structsl_1_1BodyTrackingParameters.html#a96bdd339eb1e7b6f4a6d27d57ba320e1) parameter to true.

Stereolabs Support

Thanks! I have successfully gotten the mask from the body tracking SDK. However, I found the mask for my hand still misses some information, especially on the hand and feet.

For example, this is the original image

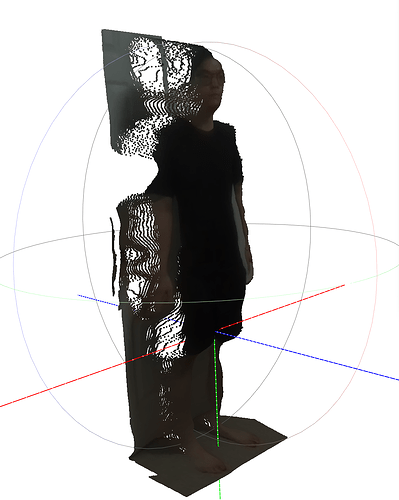

This is the mask and point cloud based on body tracking SDK

And this is the mask and point cloud based on the object tracking SDK

As we can see, the point cloud obtained from the body tracking SDK loses some parts of my right hand (left side on the image). On the other hand, the point cloud obtained from the object tracking SDK loses fingers on my hand, as well as part of my feet. How could I address this problem? Maybe contain both mask information?

Hi,

I see you are using the BODY_FORMAT::BODY_34 which does not detect the fingers which may explain why they are excluded from the mask.

Can you try to change to BODY_38? It should improve it a bit .

Stereolabs Support

I feel like the segmentation module provided by ZED SDK is not a good choice for me, as it cannot provide fine-grained segmentation for the human body. I will try to use mask R-CNN later.

BTW, why my segmented human point cloud has a lot of shadows, such as the following? Is it because it tried to estimate the depth behind me? I am not sure whether such a situation will disappear if I can get fine-grained mask results. If not, how can I alleviate such a situation, in addition to decreasing the depth estimation range? I feel like these points can be counted as outliers, and there should be some algorithm that can remove them.

Their are multiple depth modes available in the ZED SDK, which one are you using? It’s an “Initparameters” (https://www.stereolabs.com/docs/api/structsl_1_1InitParameters.html#a191b170713754cc337c00b5ebb5629fb).

I would recommend using the “NEURAL” depth mode, if that’s not already the case.

Stereolabs Support

I do use NEURAL, in order to get the best depth estimation performance.

using mask-rcnn is a better solution