I have identified the target object through the custom detector, can I get the point cloud information directly belonging to him and store it for later research?

Hi @NineSeven

the ZED SDK does not provide functions to crop the point cloud.

You can try to use the bounding box information and a third-party library like PCL or Open3D to perform this task.

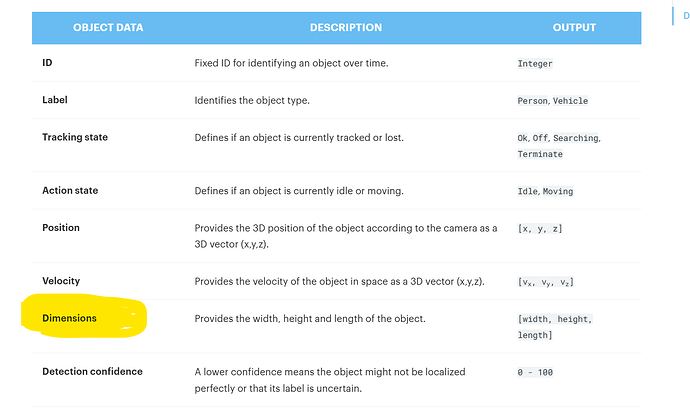

I learned in the introduction to Detection Outputs that we can get information such as dimension. Experimentally, I found that this result is not precise. How do you get the size of this object?

We project the 2D bounding box to 3D.

More details to understand your problem would be really appreciated.

In a nutshell, I’d like to know how you got the dimension information mentioned in the image below. I measured some simple boxes and found that the actual length, width and height did not match the program output.

@NineSeven Can you please provide an example to show what you get and what you expect instead?

My object is 14 cm long, 6 cm wide, and 5 cm high. When I detect an object and I output its dimension, I hope to get the result of “140 60 50”, but now the result is “188 75 70”.

The 3D bounding box has the front face parallel to the camera plane, it’s not oriented as the object. So if the object is rotated the dimensions of the bounding box can change.

I took into account the reason for the angle of the target object. Therefore, when I was conducting the experiment, the object was placed parallel to the plane of the camera, but the measurement results still had a large error. Second, I want to know the underlying logic of this dimensions output. Is it a simple subtraction of the 3D bounding box vertex coordinates, or is it a matrix transformation with depth information?

It’s a coordinate difference filtered by Kalman Filter to keep the information stable.

Therefore, the output of dimension does not use depth information. Dimension is not the actual size of an object, but a measurement at the pixel level?

I wonder what went wrong.Can you provide the code for the dimension part?

I apologize, but we cannot disclose the SDK source code.

Can you please provide pictures showing the problem, and showing what you expect?

It will be useful to debug and find eventual issues to fix.

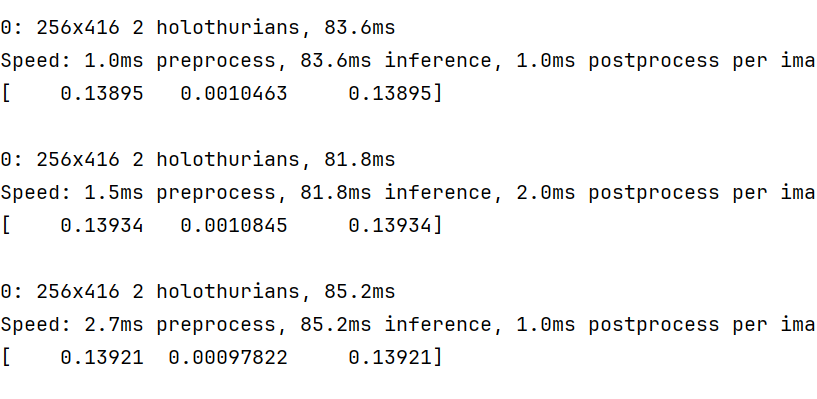

The above is the result of my program running process and printing dimension. I expect the printing result to fit the real size of the target object.

I see a few problems with this image:

- the object is very close. What’s the distance? Did you set the InitParameters correctly to match this distance?

- there is a strong reflection on the surface that can generate depth noise.

What Depth model are you using? Can you post your initialization code?

Can you please record an SVO in the conditions that you are testing and share it with us for testing?

Because the object to be detected is small, if it is too far away, the detection effect will not be good, so I set the detection distance to about 70-100cm.

My initialization code is as follows:

init_params.coordinate_units = sl.UNIT.METER

init_params.depth_mode = sl.DEPTH_MODE.ULTRA

init_params.coordinate_system = sl.COORDINATE_SYSTEM.RIGHT_HANDED_Y_UP

init_params.depth_maximum_distance = 2

I cannot see the setting for the depth_minimum_distance so the default value is used. I recommend using 0.3.

I also recommend changing sl.DEPTH_MODE.ULTRA to sl.DEPTH_MODE.NEURAL for improved results.

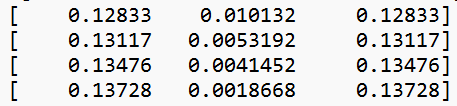

I took your suggestion and experimented, and now the depth accuracy has improved . When I output the dimension. I have a question, when the dimension [width, height, length] array is output, as shown in the figure,the values of width and length are always the same, how can I change it?

This seems like a bug. Can you post the code you used to display the values?

If your code is correct then there is something to be fixed in the Python wrapper or even at the ZED SDK level.

My code about the value is simple, the problem shouldn’t be here:

for object in objects.object_list:

print(“{}”.format(object.dimensions))

I imported my own model on the basis of the custom detector on the official website, initialized parameters such as depth mode, and did not modify anything else.

Can you check this part of the source code accordingly?