Hi,

We are using a ZED-X cam along with a Jetson Orin AGX (we can also stream to a more powerful GPU if needed), our goal is to obtain a dense point cloud (or ideally a mesh) of a human body with the best possible accuracy.

We noticed we could get a “good enough” point cloud using the provided depth sensing code sample and tuning some parameters (depth_min, max, model type etc…). Though to capture the whole body, several point of view are needed, we tried to align point clouds related to all view points by using the poses provided in the positional tracking module. Though the result is misaligned suggesting further alignement (ICP or other methods) would be needed.

Then, looking at the spatial mapping module, we noticed the documentation mentions it can be used to capture a digital model of an object. When trying to use the provided sample, we noticed it mainly “focuses” on wall and background often missing the body or clos objects (our objects are approx 20 to 80 cm far from the camera)… and rarely when it captures it, it is really low resolution (despite the resolution set to high and the mapping range set to near).

In your SDK, is there a solution to create a 3D reconstruction of a full body (mesh or point cloud) with several point of views?

Thanks a lot,

You can set the maximum depth to be used to focus on the closest objects.

You must set the value of sl::SpatialMappingParameters::range_meter:

Ok, so let me clarify this, we did experiments with both depth_sensing and spatial mapping with the sample (C++) provided in the SDK and tuned some parameters:

For spatial_mapping :

sl::SpatialMappingParameters::range_meterto2m(which seems like the minimal value).sl::SpatialMappingParameters::resolutiontoHIGH.DEPTH_MODEtoNEURAL_PLUS- Asking for a point cloud instead of a mesh

For depth_sensing :

depth_minimum_distanceto 0.1mdepth_maximum_distanceto 0.8mDEPTH_MODEtoNEURAL_PLUS

Our results are :

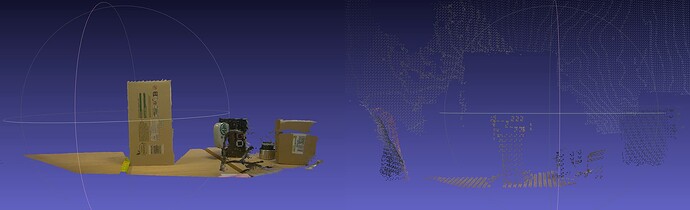

depth_sensing (LEFT) spatial_mapping (RIGHT) on a box (same scene, same camera position)

- In

depth_sensingthe result is way more precise that inspatial_mappingmode - the

spatial_mappingfocuses on object that have well defined shaped (rectangles, planes…) but misses irregular objects like a head, clothes… or maybe easily attached to wall in the background?

Is there a way for spatial_mapping to achieve a resolution closer to what the depth_sensing module is providing? If no, is there a preferred way to align depth_sensing results from different camera poses?

Thanks for your help,

As explained in the documentation, this does not affect the Spatial Mapping module.

Where have you found this limitation?

Hi,

The documentation mentions a limitation to 1m (see here : Spatial Mapping Overview - Stereolabs “The range can be set between 1m to 12m.”).

When experimenting with the SDK, I noticed a warning when setting it to any value lower than 2m, indicating it has been set to the minimal allowed value which is 2m.

There’s an error in the documentation.

The correct depth range for spatial mapping is [2,20] m.

Ok, so sl::SpatialMappingParameters::range_meter to its minimal value e.g 2m is indeed what I did then.

Though, the result is not satisfying (see my screeshots above), do you have any hindsights?

Thanks,

Unfortunately, the Spatial Mapping module is not designed to achieve the quality that you need.

It’s a module designed to scan environment, not bodies or small objects.

I recommend you explore alternative processing by focusing on depth and point clouds, using for example ICP (Iterative Closest Point) algorithms from Open3D or PCL.

In this case you can use the depth_minimum_distance and depth_maximum_distance settings to filter out outliers.