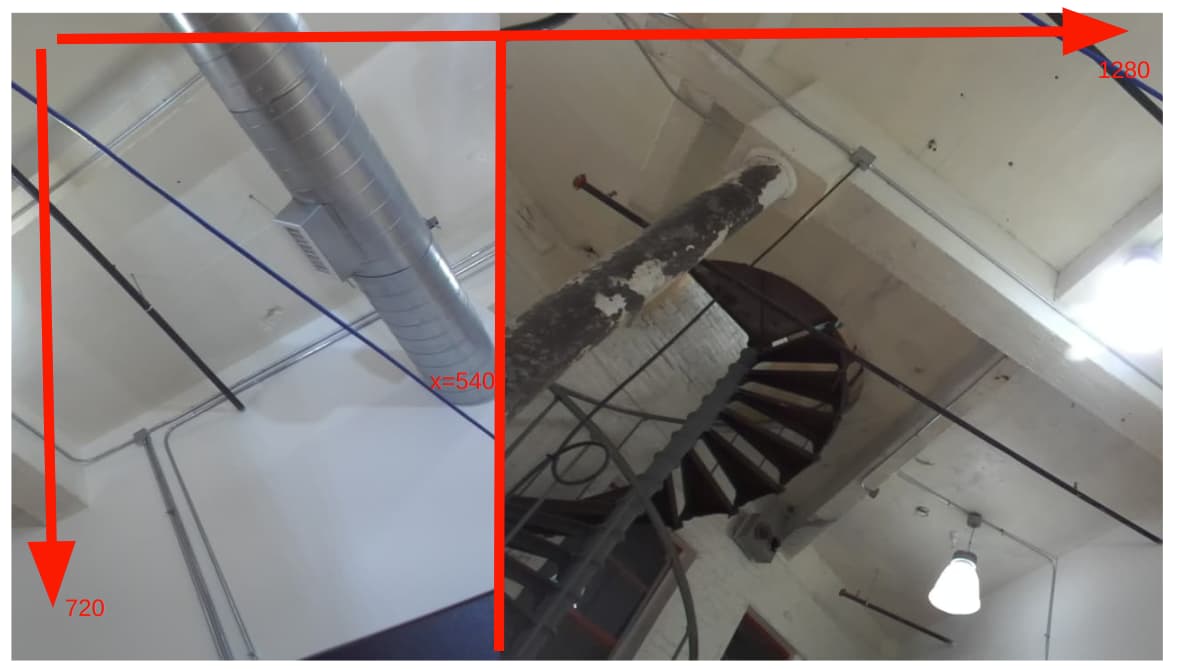

We are retrieving images at 1280x720. The vertical line where the image “tears” is around the x=530-560.

We don’t use SVO recordings so I don’t have any to send you. Regarding sending the images I can upload them to Google Drive, but I am still unsure how to send them to you privately without posting them publicly here. Do you have an email address or does this forum have direct messaging functionality?

Regarding the deepcopy we were following the guidance in https://www.stereolabs.com/docs/api/python/classpyzed_1_1sl_1_1Mat.html#a2e8f08eb3ebc14e70692b0a3eecd6756

deep_copy : defines if the memory of the Mat need to be duplicated or not. The fastest is deep_copy at False but the sl::Mat memory must not be released to use the numpy array.

How can we ensure that sl::Mat memory doesn’t get released in Python without deepcopy?