aha yes, the second solution still requires getting full depth and alignment with the segmented RGB image. I would like to ask how point_cloud.getValue(x,y) function works? Does it get the RGB and depth information from pixel (x,y) in the RGB image and depth map, respectively, and then synthesize the point cloud? If so, I may not need to get the point cloud of the whole scene first. Instead, when I get the pixel of the mask, I can just synthesize the point cloud within the mask, which is essentially the second solution.

Regarding the points on my face, no, I haven’t tried spatial mapping yet. Will the quality of point cloud and mesh in spatial mapping be better than depth sensing? If I use spatial mapping, I should also be able to get the point cloud and mesh from the mask, right?

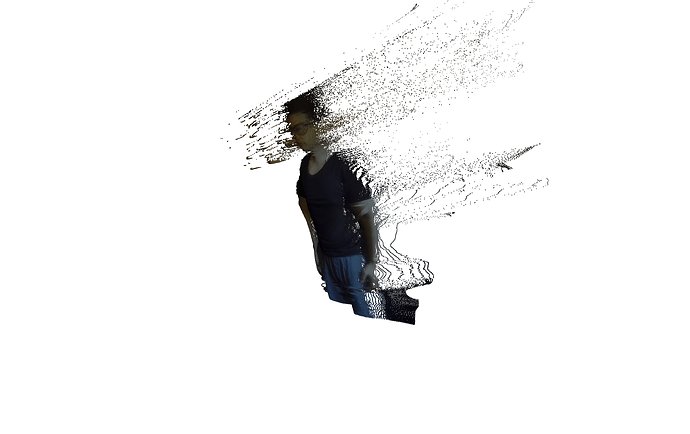

Moreover, I found that when I generate the point cloud of the whole scene, there is a very long “shadow point” that should not be occur, as I posted in Incorrect initial camera position when displaying point cloud captured by Zed 2i on MeshLab. Moreover, in the extracted human model, if I look at it from the side part, there are also a lot of “shadow points”. Not sure if this is the real reason and don’t know how to address it.