I had runned the ZED_body_tracking from the samples,but i don’t konw how to save the data from zed2.

Hello and thank you for reaching us out,

Can you tell me a bit more about what you are trying to achieve here ? What do you mean, saving the data ? I assume you want to read the data somewhere else after that ?

Best regards

Antoine Lassagne

Senior Developer - ZED SDK

Stereolabs Support

Yes,i want to read the data somewhere else after that.After runned the body_tracking,i cant find anywhere to read the data.

Hi Antoine,

I find it quite surprising that the SDK includes examples of live body tracking with a completed skeleton (as well as object detection), but does not include a way to save or export this data directly.

Linking to UE or Unity is absolutely a great thing to offer, thanks - however, this approach means that we are all at the mercy of your dev team keeping the SDK/plugins up to date (eg when is the UE5 plugin officially being released?)

As a digital artist/3D animator who is working in the field of virtual production, I’m very impressed and satisfied with your hardware, but the fact that you are not offering a way to save/export the tracked skeleton to an FBX file for direct importing into programs like Blender seems like a huge oversight on your part.

Have we missed something in your documentation? Do you offer a working example of this already?

ZEDfu for example can record .SVO files - but where are those files supported outside of your ecosystem?

Can we get an .SVO file that can record the human skeleton or object detection tracking data?

I spent weeks researching my options and just paid a fair bit of money for a Brekel Body license Brekel Body v3 (Beta) - Brekel » Brekel so that I can stream the live human tracking skeleton data from my Zed2i directly into Touch Designer.

BUT this does not really feel like a win for me, when your SDK apparently supports this already somehow.

It’s just there’s just no easy way to save the information without first doing a computer science degree and learning how to code in Python or C++

I hope this feedback is taken seriously, I’m not trying to be overly critical as I understand that my goals don’t necessarily align with the original intention of your product - but your response to OP’s question makes it seem like you guys do not recognize that this is a very real situation for a significant number of digital artists who are looking to start virtual production studios at home.

If you can support direct saving & exporting of each of the different features of your product (eg, updating each of the Zed Examples on github with save & export), I guarantee that the visibility of your brand and your sales will increase in value and quantity very quickly.

There is a digital revolution going on right now in cinema and your hardware is clearly standing out amongst a sea of discontinued and inferior products - you just need to lower the barrier for entry a tiny bit more.

I will personally make many youtube tutorials and instagram videos about how to use your products if/when these features become supported via your official software offerings and I’m sure I’m not the only one.

Cheers,

gabriel@nezovic.com

Hello,

Thank you for these clear and detailed explanations, these are precious to us to know what features are required in the future.

You are not the first one asking for this. We are investigating ways so save skeleton animations, FBX animation is one of them (and probably the best one). In the meantime, if you use the FBX SDK from Autodesk, you can already do that yourself, but I understand that’s a whole different amount of work.

There are also lighter ways to save your data, like writing a JSON file, but it will really depend on what software you’ll use to read it in the end. If you’re also writing the software that will read the saved data, it will be a lot easier and a JSON might be a good solution for you. You’ll have to write some code, but nothing difficult.

Unity or Unreal+Livelink often are the right solution because they are easy to use and customize and handle a lot of use-case (not only skeleton tracking). As for our UE5 plugin, it will be released very soon, with the 3.8 version of the SDK.

I’ll let my Colleague @BenjaminV correct me if I’m wrong

Best regards

Antoine Lassagne

Senior Developer - ZED SDK

Stereolabs Support

Hey,

Thanks so much for the reply!

I really appreciate your time and support with all of this.

To give you some more insight into my experience - I originally purchased the ZED2i about a month ago with the intention of using it with livelink + UE5 for both virtual camera and body tracking and was told prior to my purchase that the 3.8 update would be released in about a week.

In the meantime, I have tested every one of the ZED apps on offer, I’ve followed every part of your documentation, tested every github example (using Python), compiled the UE4 plugin from source as well as testing the ‘virtual production’ fork for UE5 and unfortunately none of these solutions were really able to get me up and running with the software and workflow that I would like to use. Although I did learn a huge amount of new skills on the way, so no complaints really haha.

I’m not afraid of getting my hands dirty with code and building something from the examples, but I am not a software developer and I simply do not understand what steps would need to be taken for me to write python code that records the data from the “ZED SDK - Body Tracking” github example and save it as an FBX file.

I even found some guides that walk through something similar using mediapipe/opencv, but I could not decipher how to use this same approach with your SDK https://www.analyticsvidhya.com/blog/2021/07/building-a-hand-tracking-system-using-opencv/

The only real “issue” with all this if I’m honest, is not that it might take your team “X” amount of time to develop a polished update, or that there will be times that your product does work with the latest software out there, but rather that there is no other options available in the meantime.

Consider iOS app development, where many apps become obsolete or lose functionality as Apple continues to update their operating system. If your product is going to lose functionality (and therefore sales), because another company has made a change to their software (which is inevitable), then you are always going to be spending money playing catch up.

Having FBX as a backup, means that your customers will always be supported - without additional and continued investment from your business.

One other thing that would be absolutely amazing, is being able to export the object detection to FBX - eg each detected object is assigned a separate bone (or null/empty object)

If we can easily track People, Objects and Cameras, your products would become indispensable to our industry overnight.

In the long run, Tracking Faces and Hands would be an amazing part of the Virtual Production dream as well.

It’s totally fair that there may be resolution and fidelity issues with this - however if you could find a way to write an example or blog to show how to directly sync your SDK with Googles MediaPipe - then the problem is basically solved.

eg Face and Hand Landmarks Detection using Python - Mediapipe, OpenCV - GeeksforGeeks

Sorry for the wall of text  but I am excited to see what you guys do with all of this tech in the future.

but I am excited to see what you guys do with all of this tech in the future.

Cheers,

gabriel@nezovic.com

Hey,

Don’t be sorry, like I said, we find these feedback really helpful ! We understand your point of view regarding FBX being some kind of fallback when our compatibility is not enough.

I looked at your link, but I don’t think it is what you are looking for. I did not see anything about saving to FBX. I was thinking of a more direct approach, through the FBX SDK that Autodesk distributes. Unfortunately, there are not a lot of tutorials about this, and it’s C++, but the documentation seems allright. That would probably be our approach, but coding a clean thing, python wrappers, etc. will take time and we have other features to deal with before that.

If you only want to code with python, it seems that what you need is here

There are fewer examples unfortunately, but you can find some on this link or this link. Don’t hesitate to ask for help again and keep us updated

Thanks to feedbacks like yours, we may give more importance to this feature in the future.

Regards

Antoine Lassagne

Senior Developer - ZED SDK

Stereolabs Support

Hi,

You should also know that you can save animation data directly in Unreal or Unity.

It also work using livelink for UE, so you don’t necessary need the upcoming ZED UE5 plugin.

Best regards,

Benjamin Vallon

Hello,

I too purchased your camera because Touch Designers website specifically mentions its compatability - yet I came across the same problem, I can´t directly connect the camera to Touchdesigner without having to program in Python or connect vía Unity or Unreal and then connect to Touchdesigner. I am very dissapointed - I´ve been trying to get it to connect for weeks now and still no result.

Did you manage to find a way?

Thank you

Hi,

Here (ZED | Derivative) you can find the ZED integration in TouchDesigner.

We are not implementing this integration but It is done by the TouchDesigner team, I would recommend to ask them directly for more detailed information.

Best regards,

Benjamin Vallon

What specifically are you trying to achieve? Do you want to use the video feed from the cameras or do you want to use tracking information and so on?

In this project I am getting tracking information from python and streaming it to touchdesigner via tcp. no problems so far.

Heyy, thanks for getting back, i’m trying to connect the camera to touchdesigner to be able to use the tracking information / skeleton in a live a/v performance, what do you think? I’ve been able to install the Python libraries and etc. but am not being able to bring the information into Touch.

Hi, unfortunately touchdesigner does not have full sdk integration as far as I understand it only gets rgb video feed, depth, point cloud… from CHOPS you only get info about positional tracking from the camera itself relative to the 3d space. For my project, I also needed to get people tracking and what I did was use the documentation as a reference ( www.stereolabs.com/docs/body-tracking/using-body-tracking/) and create a python script that runs in parallel to touchdesigner where I get the information and send it by tcp to touch. I also tried sending info via osc with no problems but I choose tcp.

Thanks for the info! I´ve been trying over the weekend, but i´m still having problems… I believe its the libraries, so i´ll get in touch with support and see if they can help me out!

Heres the problem (just in case you happen to know)

I can’t run the examples that come with the camera such as: body tracking, object detection and positional tracking.

My sys:

Windows 10(x64)

Cuda 11

Python 3.10.7

#NullFuctionError

Attempt to call an undifined function gluInit, ckeck for bool(gluInit) before calling

File “C:\Program Files (x86) \ZED SDK\samples\bodytracking\python\ogl_viewer\viewer.py”,line819,ininitglutinitO

File “C: \Program Files (×86)\ZED SDK\samples\bodytracking\python\body_tracking.py”, line 86, in viewer.init(camera_info.calibration_parameters.left_cam,

obj_param.enable_tracking,obj_param.body_format)

when I try to initialize by changing parameters or something and tells me that it can’t find:

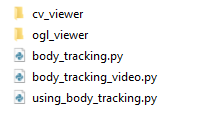

from cv_viewer.utils import *

Thanks for all the info still!

Hi! Make sure you have “cv_viewer” folder in the same location of your python script, you can find that folder in in the GitLab repository zed-examples/body tracking/python at master · stereolabs/zed-examples · GitHub .

Let me know how it goes, maybe I can put something together (Zed to Touch) once I’m back from a trip to give you a starting point. Some python knowledge to get a stable communication between the Zed SDK and Touch.

You also have other options like c++ and you could use the same approach of using a streaming protocol to send to Touch (upd, osc, tcp, etc …), the plugin for unity is also a good option to use zed sdk.

Hello! Me again, hope you’re well. Sadly, i’m still ffing stuck on this one, and was hoping for your guidance once again:

We have managed to connect OSC from the Zed 2i via Conda into Touch vía a OSCIN (dat) but we are getting a complete string on numbers without any commas for our Skeleton, meaning it is impossible to extract arguments by index.

We have attempted to format (keypoint object) of the Zed2i code to send a float instead of string, but this level of Python is far too advanced for us.

Do you have any idea / advice / knowledge on the topic?

Thanks once again!